Wednesday, January 31, 2024

Dashboards: Dead or Alive? The evolution from data graveyards into data gold mines

Monday, January 29, 2024

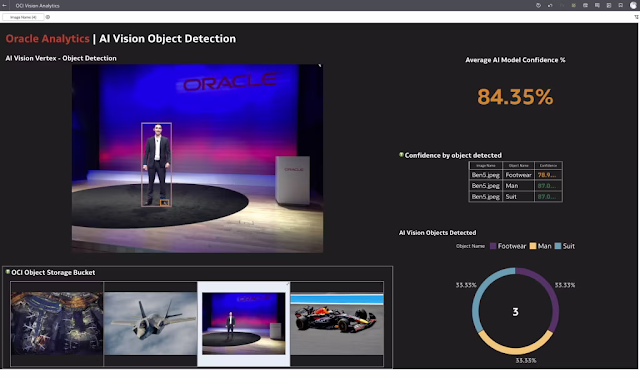

New AI capabilities with Oracle Analytics

Wednesday, January 24, 2024

Introducing Manufacturing Analytics in Oracle Fusion SCM Analytics

Timely insights into work order performance

- How much time does it take to produce my products?

- How does actual time to produce compare against planned time?

- What is the production yield for my plant?

Detect anomalies in product quality to take fast corrective action

See production impact across the entire plan-to-produce process

Create a system of insights from Oracle Cloud Application data and external data sources

Monday, January 22, 2024

Oracle Fusion HCM Analytics analyzes data to reveal Inferred Skills

- What's the difference between a software engineer and a front-end engineer?

- Is it a good idea to hire a sales engineer as a developer?

- Can a business analyst be promoted to an associate product manager?

- What's the path to success for an up-and-coming marketing manager?

- Does a team have the required skills for the project?

- Skills data remains outdated: Many intranets and HCM systems offer a way for employees to highlight their skills. However, very few employees are proactive and understand the value of sharing their talents and skills or seeking opportunities to utilize them.

- Job titles are getting blurred: With changing industry trends, jobs are evolving fast, and employees with the same titles in the same industry and region might have expanded their skills beyond their initial responsibilities. Titles are changing too - a title may have different meanings in different industries and geographies, and creative titles are often used for the same job. This makes it tough to rely on titles as a clear indication of responsibilities.

- A surplus of applications but a shortage of jobs: The pool of resumes for a job requisition is often much higher than the capacity of the recruiting teams to review them. Additionally, the quality and fit of each resume varies, and finding the right candidate is challenging. As a result, recruiters now need to dedicate additional time to review applications to ensure hiring equity.

Introducing Inferred Skills, a new addition to Oracle Fusion HCM Analytics driven by machine learning

Hearst pilots Inferred Skills

“Being able to intelligently supplement data we have about employee skills could help us make more strategic decisions in employee development programs, ensure we have the right people for the work that needs to get done, and ultimately help with key HR outcomes like boosting employee engagement and retention.” Christin Friley, Director of HCM Analytics, Hearst

- More comprehensive employee data

- The ability to conduct more robust analytics

- An enhanced ability to support employee development and retention

- Better insights for internal mobility

Friday, January 19, 2024

Generative AI Chatbot using LLaMA-2, Qdrant, RAG, LangChain & Streamlit

- Enhanced knowledge and information retrieval: RAG enables a chatbot to pull in relevant information from a large body of documents or a database. This feature is particularly useful for chatbots that need to provide accurate, up-to-date, or detailed information that isn’t contained within the model’s pretrained knowledge base.

- Improved answer quality: By retrieving relevant documents or snippets of text as context, RAG can help a chatbot generate more accurate, detailed, and contextually appropriate responses. This capability is especially important for complex queries where the answer might not be straightforward or requires synthesis of information from multiple sources.

- Balancing generative and retrieval capabilities: Traditional chatbots are either generative (creating responses from scratch) or retrieval-based (finding the best match from a set of predefined responses). RAG allows for a hybrid approach, where the generative model can create more nuanced and varied responses based on the information retrieved, leading to more natural and informative conversations.

- Handling long-tail queries: In situations where a chatbot encounters rare or unusual queries (known as long-tail queries), RAG can be particularly useful. It can retrieve relevant information even for these less common questions, allowing the generative model to craft appropriate responses.

- Continuous learning and adaptation: Because RAG-based systems can pull in the latest information from external sources, they can remain up-to-date and adapt to new information or trends without requiring a full retraining of the model. This ability is crucial for maintaining the relevance and accuracy of a chatbot over time.

- Customization and specialization: For chatbots designed for specific domains, such as medical, legal, or technical support, RAG can be tailored to retrieve information from specialized databases or documents, making the chatbot more effective in its specific context.

The need for vector databases and embeddings

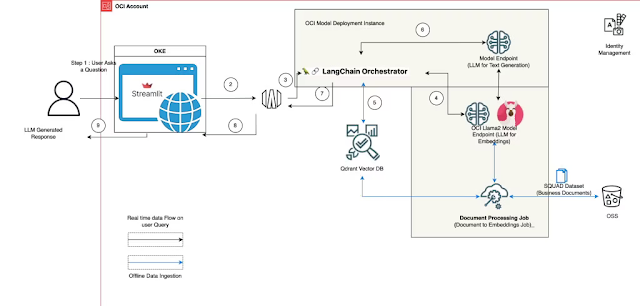

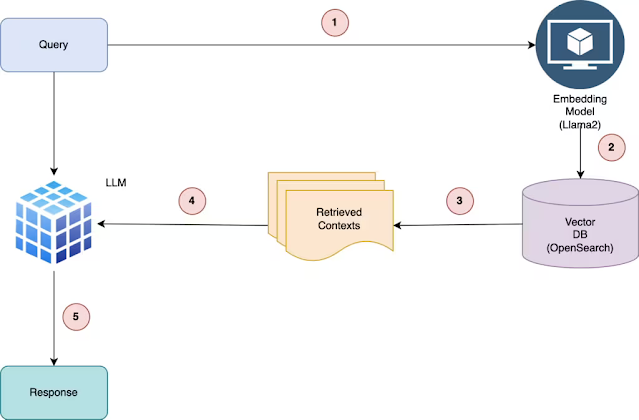

High-level solution overview

- The user provides a question through the Streamlit web application.

- The Streamlit application invokes the predict call API to the model deployment.

- The model deployment invokes Langchain to convert user questions into embeddings.

- The function invokes an Qdrant Service API to send the request to the vector database to find the top k similar documents.

- The function creates a prompt with the user query and the similar documents as context and asks the large language model (LLM) to generate a response.

- The response is provided from the function to the API gateway, which is sent to the Streamlit server.

- The user can view the response on the Streamlit application.

Getting started

- Setting up the Qdrant database instance

- Building Qdrant with Langchain

- Setting up RAG

- Deploying a Streamlit server

- Pass the query to the embedding model to semantically represent it as an embedded query vector.

- Pass the embedded query vector to our vector database.

- Retrieve the top-k relevant contexts, measured by k-nearest neighbors (KNN) between the query embedding and all the embedded chunks in our knowledge base.

- Pass the query text and retrieved context text to our LLM.

- The LLM generates a response using the provided content.

Hardware requirements

Prerequisites

- Configure a custom subnet with a security list to allow ingress into any port from the IPs originating within the CIDR block of the subnet to ensure that the hosts on the subnet can connect to each other during distributed training.

- Create an Object Storage bucket to save the documents which are provided at time of ingestion in the vector database.

- Set the policies to allow OCI Data Science resources to access OCI Object Storage buckets, networking, and others.

- Access the token from HuggingFace to download the Llama2 model. To fine-tune the model, you first must access the pretrained model. Obtain the pretrained model from Meta or HuggingFace. In this example, we use the HuggingFace access token to download the pretrained model from HuggingFace by setting the HUGGING_FACE_HUB_TOKEN environment variable.

- Log group and log from logging service to monitor the progress of the training.

- Go to OCI Logging and select Log Groups.

- Select an existing log groups or create one.

- In the log group, create one predict log and one access log.

- Select Create custom log.

- Specify a name (predict|access) and select the log group you want to use.

- Under "Create agent configuration," select Add configuration later.

- Select Create agent configuration.

- Notebook session: Used to initiate the distributed training and to access the fine-tuned model

- Install the latest version of Oracle Accelerated Data Science (ADS) with the command, pip install oracle-ads[opctl] -U

Deploying the Llama2 model

Setting up the Qdrant database

Initialize Qdrant with Langchain

Setting up RAG

Hosting Streamlit application

Wednesday, January 17, 2024

Mitratech avoids SLA breaches with OCI Application Performance Monitoring

SLAs, service availability, and Synthetic Monitoring OCI APM techniques

Monitoring SLA challenges

Mitratech’s journey featuring OCI APM

- 8 APM domains configured to monitor applications

- 10+ applications configured with OCI APM’s Synthetic Monitoring

- 350+ Java applications configured with OCI APM

- Integrations with PagerDuty and Grafana

- Trace Explorer is used to view traces and spans and identify performance issues and bottlenecks in the monitored application, from browser to database

- Diagnostics features like CPU consumption, allocated memory, and GC impact per thread, as well as a collection of thread stack snapshots including state and lock information

Tuesday, January 16, 2024

Is the Oracle 1Z0-084 Certification Worth It? Exploring the Benefits

Oracle certifications have long been recognized as valuable assets in the realm of IT and database management. Among the array of certifications Oracle offers, the 1Z0-084 exam stands out, focusing on Oracle Database 19c - Performance Management and Tuning.

Before delving into the benefits, let's uncover the essence of the 1Z0-084 exam. This certification concentrates on honing skills related to Oracle Database 19c, emphasizing performance management and tuning - an essential aspect in today's dynamic technological landscape.

The Allure of Oracle 1Z0-084 Certification

Elevating Your Career Trajectory

In an era where businesses hinge on robust database systems, professionals with the 1Z0-084 certification command attention. It is a testament to your proficiency in improving database performance, positioning you as a valuable asset for employers searching for top-tier talent.

Whether you are eyeing a promotion within your current organization or seeking to make a mark in a new venture, the 1Z0-084 certification can be the catalyst that propels your career to new heights.

Unparalleled Expertise in Performance Management

Obtaining the 1Z0-084 certification goes beyond showcasing theoretical knowledge - it demonstrates your mastery of the intricate art of performance management and tuning within Oracle Database 19c. This level of expertise can be a game-changer, opening doors to challenging and rewarding projects that demand a nuanced understanding of database optimization.

The certification validates your hands-on skills, assuring employers that you comprehend the principles and can effectively apply them in real-world scenarios.

Industry Recognition and Credibility with Oracle 1Z0-084 Certification

Oracle certifications carry weight globally, and the 1Z0-084 is no exception. Holding this certification is akin to having a stamp of approval from one of the industry leaders. It adds a layer of credibility to your professional profile, making you a sought-after expert in database optimization.

Imagine the benefit of having a certification that is recognized and respected worldwide. It increases your credibility and reflects positively on the organizations you associate with, further solidifying your position as a reliable and knowledgeable professional.

Navigating the Oracle 1Z0-084 Certification Journey

Resources Galore

The path to 1Z0-084 success is paved with ample resources provided by Oracle. From comprehensive study materials to practice exams and detailed documentation, Oracle ensures that aspirants can confidently tackle the certification journey.

One of the key benefits here is the structured approach to learning. The resources provided are curated to cover all aspects of the exam syllabus, ensuring that you have a holistic understanding of the concepts and skills needed to ace the certification.

Community Support

Joining the Oracle community is not just about preparing for an exam; it's about becoming part of a network of professionals who share a common goal. The community delivers a platform to connect with fellow certification seekers and experienced professionals, creating a collaborative space where knowledge and experiences are freely shared.

Engaging with the community can seriously enhance your preparation. You gain insights, tips, and the assurance that you are not alone in your journey. The community's collective wisdom becomes valuable in your quest for 1Z0-084 excellence.

Making the Decision: Is Oracle 1Z0-084 Certification Truly Worth It?

The Return on Investment

Investing time and effort into obtaining the 1Z0-084 certification is not just a commitment; it's an investment in your professional future. Regarding career opportunities and enhanced expertise, the return far outweighs the initial commitment.

Consider this certification as a strategic move in your career chessboard. It's not just about immediate advancement; it's about setting yourself up for long-term success. Organizations value professionals who continually invest in their skill sets, and the 1Z0-084 certification is a testament to your commitment to excellence.

Adapting to Industry Trends

In the fast-paced and ever-evolving landscape of IT, staying ahead of the curve is not a luxury; it's a necessity. The 1Z0-084 certification ensures you keep pace with industry trends and lead in performance management and tuning.

Technology waits for no one, and today's relevant skills must be improved tomorrow. The 1Z0-084 certification equips you with the latest knowledge and practices, making you a proactive player in the tech arena. It's a commitment to staying relevant and being a driving force in the face of technological advancements.

Conclusion

In conclusion, the Oracle 1Z0-084 certification transcends being a mere credential, a gateway to opportunities and professional growth. As you venture on this certification journey, remember that the benefits extend beyond the immediate. It's not just about passing an exam; it's about honing skills that will shape your career trajectory.

Whether you are an aspiring database professional eager to make your mark or a seasoned expert looking to stay ahead, the Oracle 1Z0-084 certification is undoubtedly worthwhile. It goes beyond the checkboxes on a resume; it's a testament to your dedication to excellence in database management.

In a world of ever-shifting fierce competition and technological landscapes, having the Oracle 1Z0-084 certification on your resume is not just an asset - it's a strategic advantage. It signals to the industry that you are not just adapting to change; you are driving it.

So, is the Oracle 1Z0-084 Certification worth it? The resounding answer is yes. It's an investment, a validation, and a ticket to a future where your performance management and tuning expertise sets you apart in the vast sea of IT professionals.

Monday, January 15, 2024

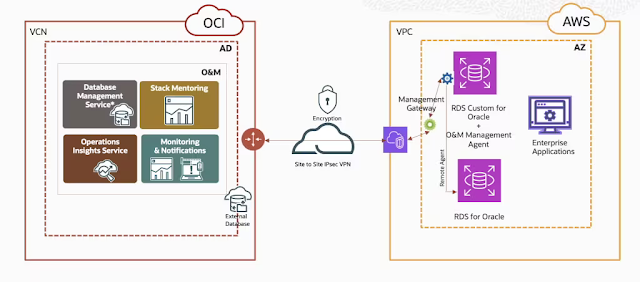

OCI Observability and Management for Multi-Cloud Database: Amazon RDS

- IPsec connectivity between OCI and AWS Cloud

- Provisioning of AWS Native RDS and RDS Custom Databases on Amazon

- Installing Management Gateway and Agent on RDS instances

- Discover the RDS database as an External Database

- Enable Database Management, Stack Monitoring and Operations Insights

- Discover Database System on Database Management

IPsec Connectivity between OCI and AWS

Provision RDS and RDS Custom

- Amazon RDS Database for Oracle.

- Amazon RDS Custom for Oracle.

- Database on AWS EC2 instance.

Install Management Gateway and Management Agent

- Download and Install the Management Gateway on the EC2 instance which is on the public subnet.

- Install the Management Agent on the RDS EC2 instance.

- Check the status of the Management Gateway and Management Agent on the OCI console under Observability and Management.

- Install the required O&M service plugins on the Management Agent.