Friday, October 28, 2022

Announcing Oracle Transaction Manager for Microservices Free

Friday, October 21, 2022

New Autonomous Data Warehouse Enhancements to Streamline Data Preparation and Sharing for Analytics

Business Analysts

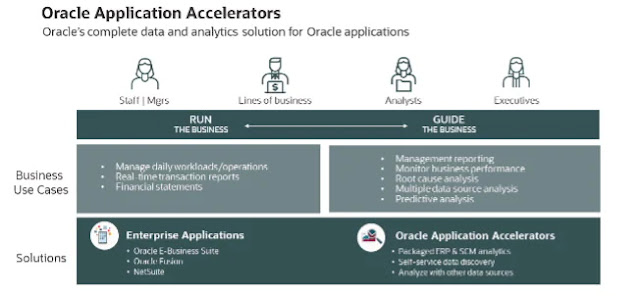

Oracle Applications Customers

Data Lake Users

All customers with new collaboration capabilities

Wednesday, October 19, 2022

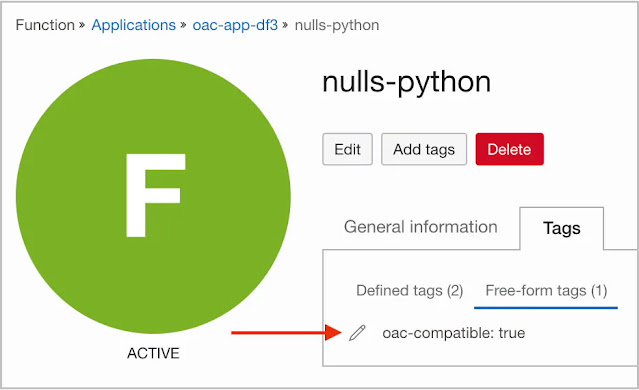

Make Rich Data Even Richer – Use Functions for Custom Data Enrichment in Oracle Analytics Cloud

1. Prerequisites.

2. Creating the function.

3. Registering the function

4. Invoking the function.

5. Troubleshooting.

Monday, October 17, 2022

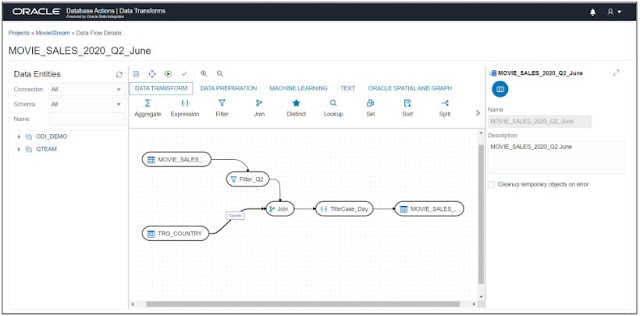

Oracle Analytics Data Flow Cheat Sheet: Making Sense of Large Amounts of Data

1. Data Ingestion

2. Data Preparation

3. Machine Learning

4. Database Analytics includes both Oracle Database Analytics and Graph functions:

Saturday, October 15, 2022

Oracle 1Z0-1077-22 Certification Benefits: Be an Oracle Certified Professional

If you dread taking your Oracle 1Z0-1077-22 exam or your Oracle Order Management Cloud Order to Cash 2022 Implementation Professional certification, you are not alone.

The Oracle Order Management Cloud Order to Cash 2022 Implementation Professional 1Z0-1077-22 exam validates your knowledge in Order Management Cloud projects and using Order entry functionalities. This exam opens the path to becoming an Oracle Order Management Cloud Order to Cash Implementation Professional.

Industry-recognized 1Z0-1077-22 certification from Oracle helps you gain a competitive edge over other candidates. Moreover, earning a professional certification improves your employability and demonstrates your capability. You need to study and prepare well for this 1Z0-1077-22 exam, and this guide is undoubtedly your way to crack the 1Z0-1077-22 exam and pass it with flying colors.

What Are the Benefits of Acquiring An Oracle 1Z0-1077-22 Certification?

Oracle is one of the world’s largest enterprise software companies that offer the certification course for the most lucrative career in the IT domain. Oracle 1Z0-1077-22 certification is a valid and demanded credential that gets worldwide recognition. With the rise in aspiring candidates applying for jobs in IT industries, a certification from the Oracle Corporation enhances your opportunities of getting recognized and selected and confirms your entry into esteemed companies.

With the intense competition in the job market, certification is mandatory for career growth that enhances your productivity and improves credibility, signifying a benchmark of proficiency, competence, and experience. An Oracle 1Z0-1077-22 Certification is undoubtedly worth acquiring and would greatly value a professional’s career.

Some of the reasons why you might want to consider pursuing one of these certifications are:

1. Better Employability

Considering the widespread acceptance of Oracle 1Z0-1077-22 Certification, it would undoubtedly make you more employable. Depending on the certification you obtain, you will become eligible for a variety of job openings. Moreover, the certificate would validate your skills, showing the employers that you could be an excellent addition to their team.

2. Development of Skills

An Oracle 1Z0-1077-22 Certification course would give you an in-depth understanding of various concepts regarding the technology stacks. It would not only help you develop a strong foundation in this field, but you would also be able to grow your skills to a great extent. Ultimately, it would make you a much more skilled professional with significant expertise.

3. Growth Opportunities with Oracle 1Z0-1077-22 Certification

Better skills automatically result in higher growth opportunities for professionals. You would be able to progress through your career quickly, earning promotions for high-skill roles. Valuable certifications such as these also increase the chances of securing promotions by setting you apart from your colleagues.

4. Recognised with Oracle 1Z0-1077-22 Certification

Oracle offers a digital badge to candidates successfully obtaining the certifications. You may put these badges on your social media profiles, including those you might use for professional purposes. The badges display the skills you have acquired, thus helping you stand out from the crowd. Ultimately, an Oracle 1Z0-1077-22 Certification would help you gain recognition among the community of IT professionals.

5. Better Remuneration

Developing additional skills and validating them through such certifications make you more valuable to a company. Thus, your employer would be willing to pay you more to keep you as a part of the organization. Pursuing an Oracle 1Z0-1077-22 Certification course can help you earn a better salary and more benefits.

6. A Validation of Soft Skills

The very purpose of a certification is to validate your skills and knowledge on the specified topic. However, in addition to the hard skills, it also validates various soft skills you would need to join leading companies. For instance, when a professional with an Oracle Order Management Cloud Order to Cash Implementation Professional certification applies for a job, the employer would know that the candidate is hard-working and willing to learn.

Conclusion

An Oracle 1Z0-1077-22 Certification Exam thus evaluates and challenges your technological abilities, thus; enhancing and accelerating them for a bright career prospect, job stability, and high salary. Oracle products, software, and services are highly scalable and used in large-scale and medium-scale organizations.

Most Oracle products are critical for a business and thus need to be managed and handled by only professionals with the right expertise in the said field. Compared to other certifications, Oracle Database-related jobs are many, and people receive a good salary once appointed. Apart from these, other technological streams wherein Oracle certification proves beneficial.

Friday, October 14, 2022

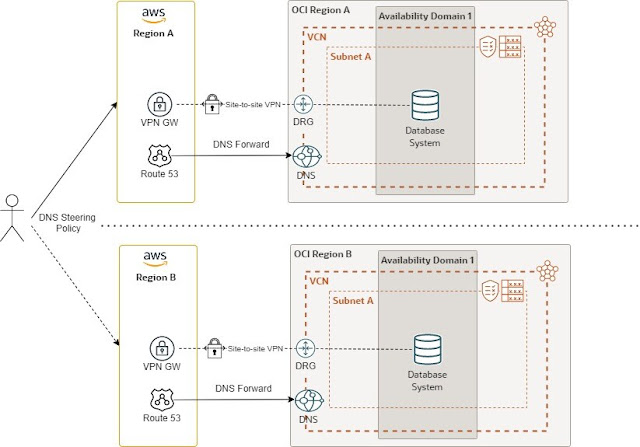

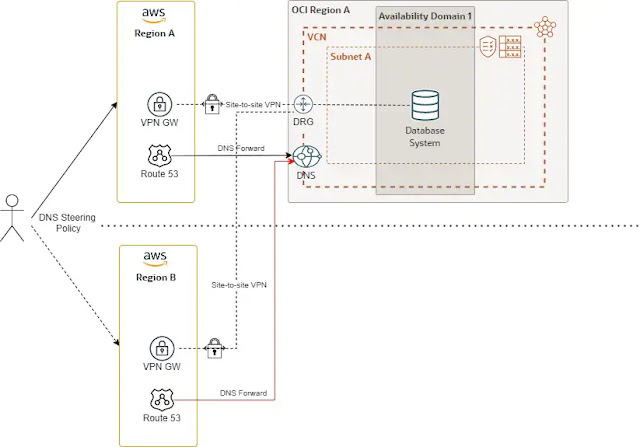

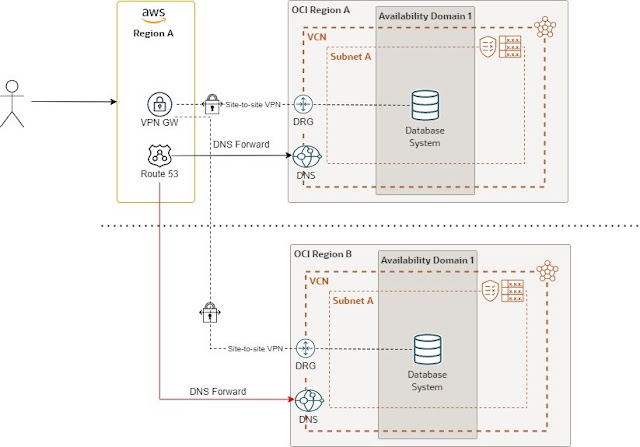

DNS in multicloud disaster recovery architectures

Multicloud DNS architecture

Example multicloud disaster recovery scenario

Wednesday, October 12, 2022

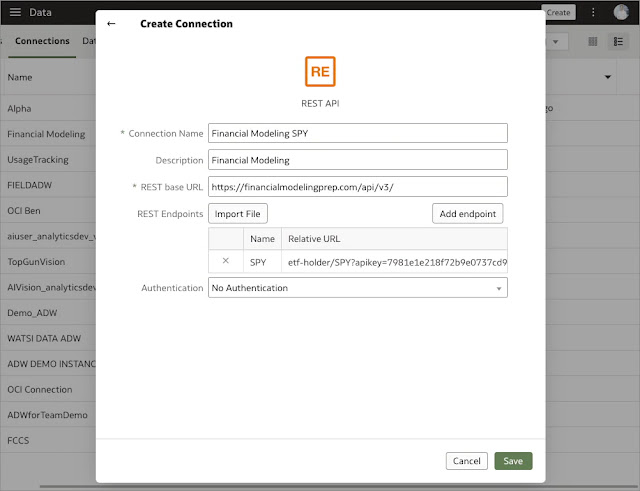

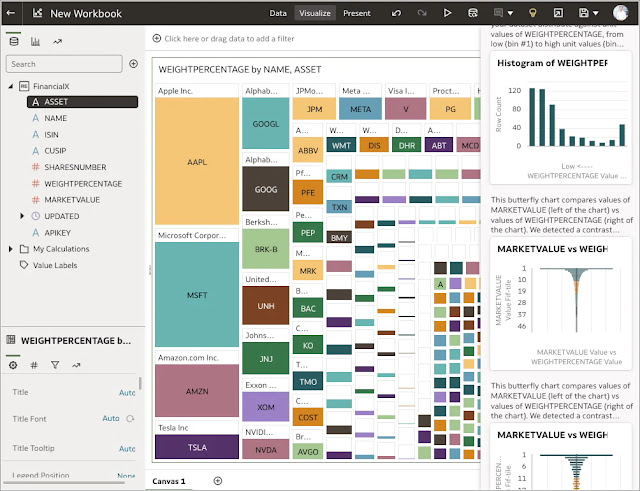

Connect Oracle Analytics to Data Sources with Rest APIs

1. What is a REST API?

2. Create a REST API connection

3. Create a dataset

4. Create your workbook

Monday, October 10, 2022

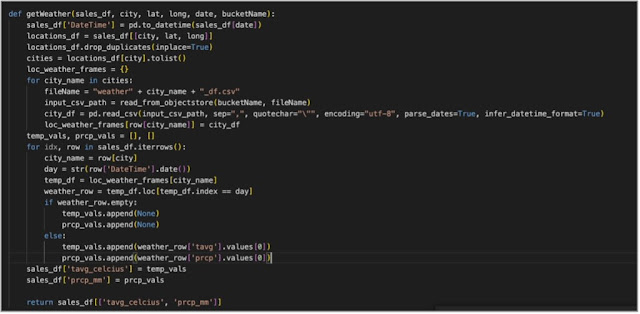

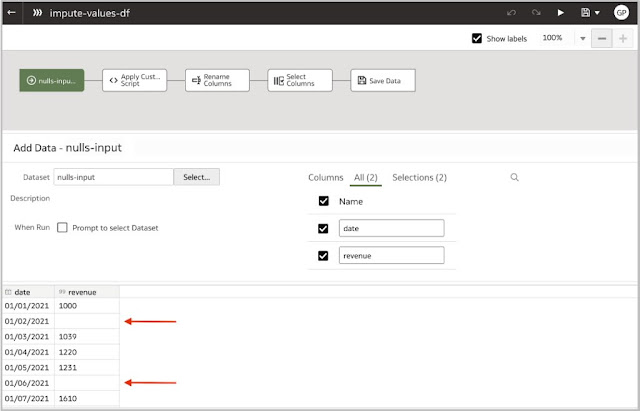

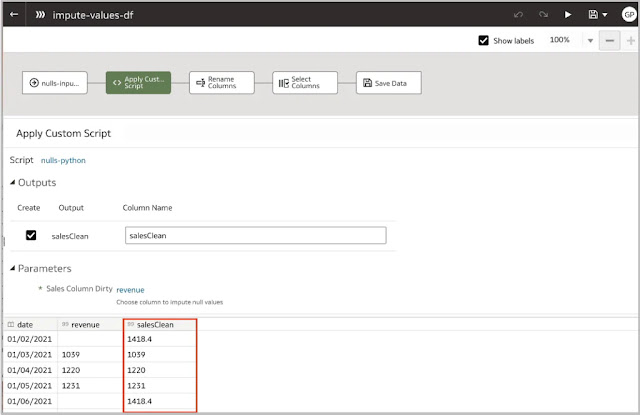

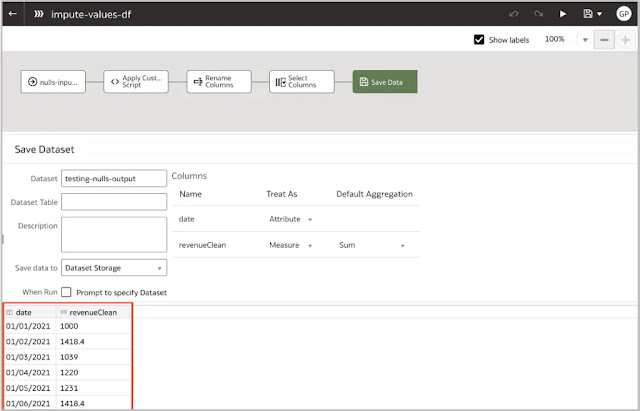

How to leverage custom scripts in your Oracle Analytics Cloud data flow

Custom Script Overview

Custom Script Use Case

Friday, October 7, 2022

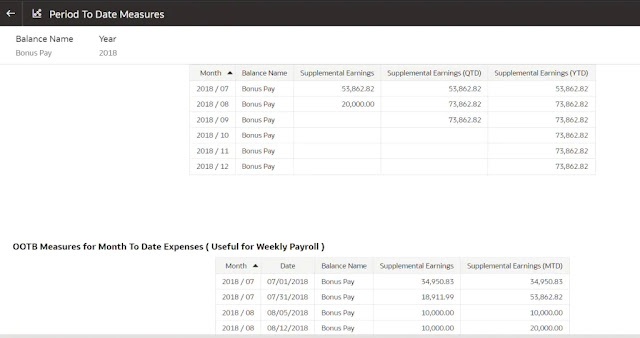

Oracle Fusion Analytics Warehouse makes Payroll Analytics available in Beta

| Duty Role Name | OA4F_HCM_PAYROLL_ANALYSIS_DUTY |

| Duty Role Code Name | Payroll Analysis Duty |

| OOTB Mapping to Groups | Payroll Administrator Payroll Manager |

| Data Role | FAW HCM View All Data Role |