This article provides an overview of how machine learning (ML) applications, a new capability of

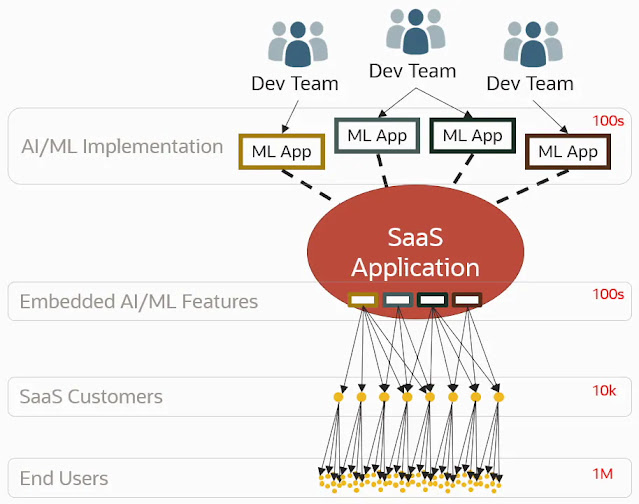

Oracle Cloud Infrastructure (OCI) Data Science, help software-as-a-service (SaaS) organizations industrialize artificial intelligence (AI) and machine learning and embed AI and ML functionality into their product experiences. It discusses our experience and the challenges we had to overcome when we faced the problem of delivering hundreds of AI and ML features to tens of thousands of customers. It describes key capabilities of ML applications that shorten the time-to-market for AI and ML features by standardizing implementation, packaging, and delivery. The article also provides an outlook into other areas beyond SaaS that can benefit from using ML applications.

It has been widely recognized that building an

AI and ML solution isn’t the same as building a general software solution. Today, businesses understand that moving from a data science proof-of-concept to production deployment is a challenging discipline.

Many known success stories of AI and ML solution productizations exist, not only among high-tech IT companies but across many industries like healthcare, retail, energy, agriculture, and more. Thanks to AI and ML frameworks, toolkits, and especially services provided by major hyper-scale cloud vendors like Oracle, it is becoming easier to develop, deploy and operate AI and ML solutions. The rate of successful projects is also positively influenced by the fact that companies are adopting practices like MLOps that streamline the ML lifecycle.

However, with SaaS applications, the percentage of successful projects is lower. For example, let’s imagine you want to enrich your SaaS application with a new AI and ML feature. It’s on a completely different level! You need to develop and run thousands of pipelines on thousands of varied customer datasets, training and deploying thousands of models. To avoid this management nightmare, you must automate these tasks as much as possible.

The success of these projects depends on the efforts and knowledge of large, professional teams of experienced software engineers, data engineers, and data scientists. This investment can be expensive for an organization, and not every attempt gets a happy ending. AI and ML projects tend to go over budget in general. According to a

McKinsey & Co. survey, the cost of 51% of AI projects was higher than expected when AI high performers were excluded. Another serious issue can be delays. Teams building AI and ML features for SaaS on their own can experience setbacks that extend the project by a year or more.

Some problems and challenges associated with delivery and operations of AI and ML feature for SaaS applications are too complex for every team or organization to address repeatedly. A better strategy relies on ML applications to solve them for you, enabling your development and data science teams to better focus on business problems.

Our experience: Building bespoke solutions for SaaS

At Oracle, we have been delivering market-leading

SaaS applications for decades and taking the ML applications approach for years through working with SaaS teams to help them add new AI and ML capabilities to their SaaS applications. This work enabled us to gain a deep understanding of both the needs of SaaS products and challenges related to delivering AI and ML features within SaaS applications.

To stay competitive, SaaS organizations like Oracle Fusion Applications or Oracle NetSuite need the ability to introduce new AI and ML use cases as intelligent features for their customers. They need to rapidly develop, deploy, and operate AI and ML features. The development lifecycle needs to be shortened from months to weeks. This goal poses a challenge because of the large number of SaaS customers and the size of SaaS organizations. To give a sense of the scale, with thousands of customers who each have hundreds of AI and ML features, Oracle runs millions of ML pipelines and models in production!

We also must cover the entire lifecycle of AI and ML features. To accomplish this goal, implementing business requirements, debug, test, and deploy solutions to production must be simple for data scientists and engineers. Then these organizations must be able to monitor and troubleshoot the production fleet of solution instances provisioned for thousands of customers, used by millions of users.

Taking into consideration the scale of AI and ML development and operations, SaaS organizations need to standardize the implementation and development of AI and ML features to efficiently manage and evolve them.

ML application origins: Adaptive intelligent applications

The roots of ML Applications go back to our work with

Adaptive Intelligent Applications (AIA). Under the umbrella of AIA organization, we have been building several generations of a framework that helps SaaS teams build AI and ML features for SaaS applications. We focused primarily on Fusion applications like enterprise resource planning (ERP), human capital management (HCM), and customer experience (CX). However, other non-Fusion applications and teams were involved even in the early days.

To make a long story short, we helped AIA to move most of their applications to production. If you are a Fusion user, you have likely already interacted with AIA functionality. To find out more about AI Apps for finance, human resources, sales, service, and procurement, visit

AI Apps Embedded in Oracle Cloud Applications.

For further explanation, we provide a brief description for three examples of successfully productized AIA features.

ERP intelligent account combination defaulting

This AIA feature assists the payables function by using AI and ML to create default code combination segments when processing invoices don’t match purchase orders (POs). Predicting and defaulting code combination segments reduces manual keystroke effort, reduces human errors, saves invoice processing time, and reduces costs.

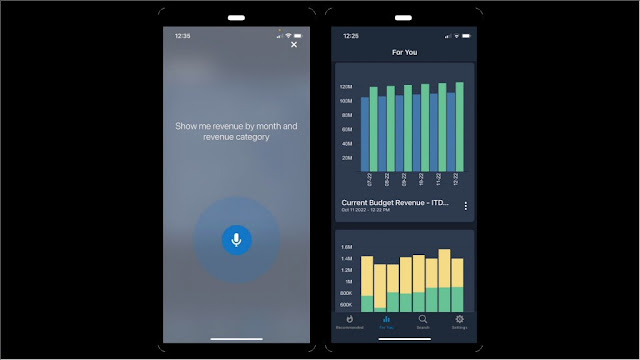

AI-UX suggested actions

News feed suggestions make Oracle customers more productive by recommending important tasks to them in a timely fashion. Fusion apps have a wide range of functionality and knowing what you have access to across those apps at a particular time and how to navigate to important tasks can be challenging.

News Feed Suggestions track the navigation behavior of a user and users like them to make recommendations for the tasks they are most likely to perform at that time. For example, if a group of users historically submits timecards each Friday, they see a suggestion for that task on that day. If tasks related to closing at the end of each month exist, the users with roles associated with those tasks see suggestions for those tasks. With news feed suggestions, Oracle helps your users get to the tasks that matter faster.

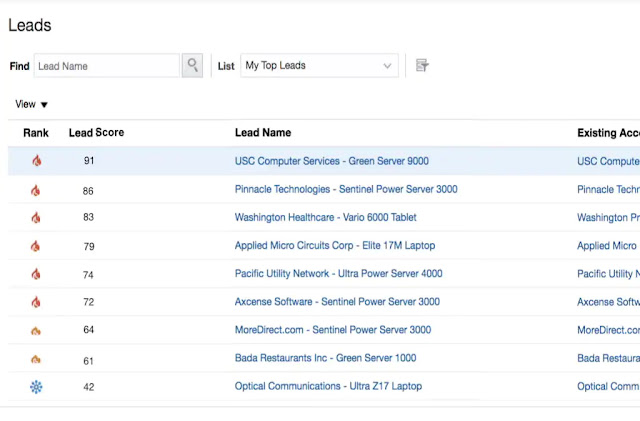

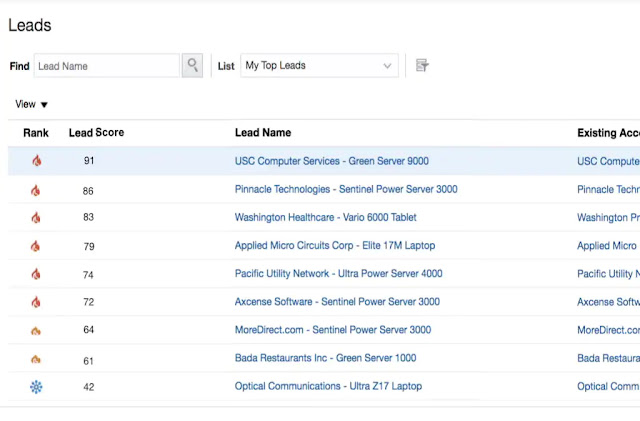

CX sales lead conversion probability

Within CX sales, AI and ML models analyze data on profiles, sales, and the interactions you have with company prospects and customers to create a score to indicate the propensity of each lead to progress to a sale close. This functionality enables sales teams to have more effective lead management with better prioritization, which leads to more incremental sales, more efficient processes through a reduced need to wrangle data, and improved marketing effectiveness and return on investment driven by tighter targeting and personalized content.

ML applications: Basics

ML applications can help SaaS teams address most of the challenges they face when trying to add AI and ML features to their SaaS applications. Let’s look to a few basic properties of ML applications to help understand how ML applications work and their benefits.

Provider and consumer roles

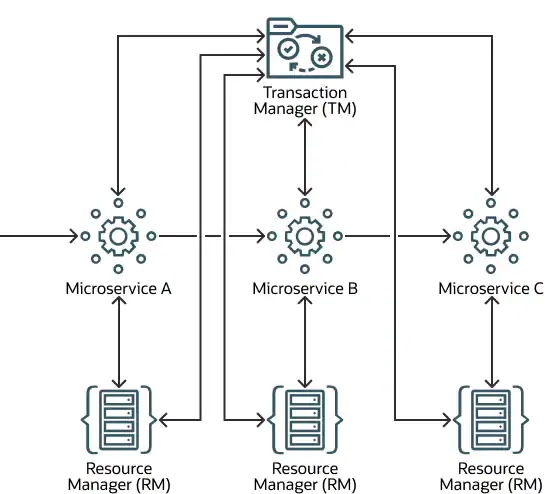

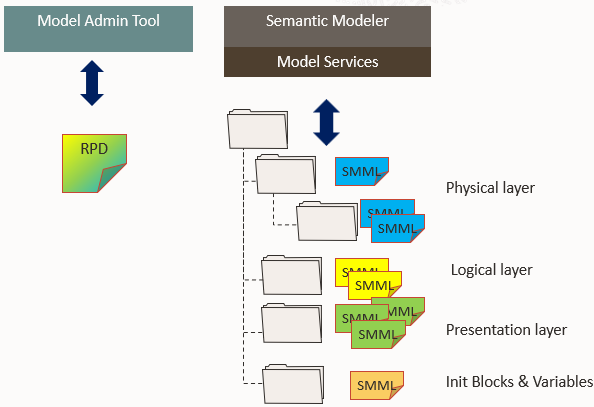

ML applications distinguish between users providing or authoring ML applications (Providers) and the users consuming or using ML applications (Consumers). These roles are even reflected in the Oracle Cloud Console. ML applications provide two UI areas, as shown in the following image.

ML application resource

An ML application resource, or just ML app, is a self-contained representation of an AI and ML use case. It has well-defined boundaries defined by contracts and components that define its implementation.

A typical ML app consists of the following features:

◉ Data pipelines responsible for the preparation of training data

◉ ML pipelines that train and deploy models

◉ Triggers and schedules that define workflows

◉ Storage for the data used by other components

◉ Model deployments that serve predictions

◉ AI services that can be used instead of or in addition to custom pipelines and models

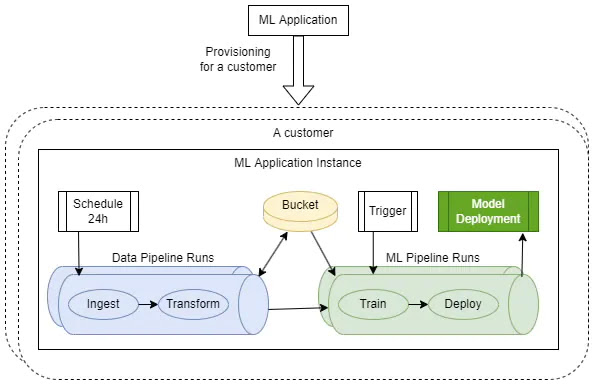

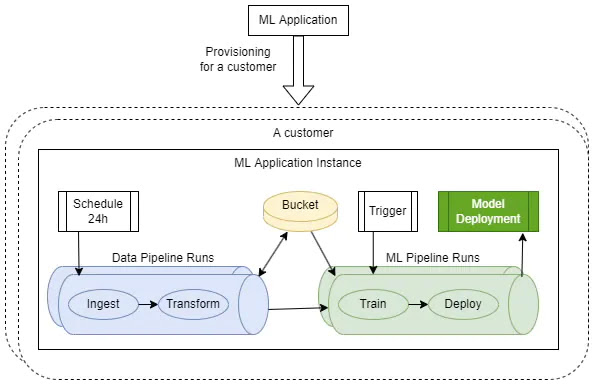

You can think of an ML app as a blueprint that defines how an AI and ML use case is implemented for a customer. This blueprint is used to instantiate a solution for each SaaS customer.

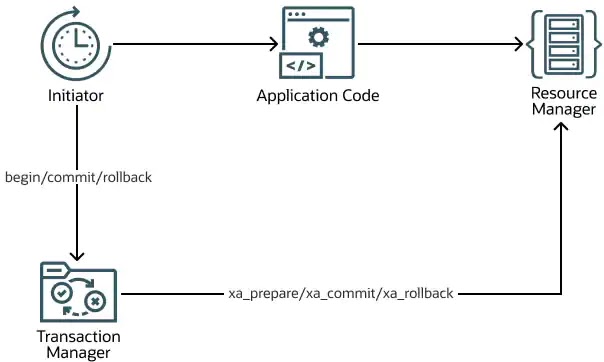

ML application instance resource

An ML application instance resource, or ML app instance, represents an ML app prepared or instantiated for a specific customer. An ML app Instance is created for a customer during provisioning, according to the blueprint defined by the ML app for the end-to-end solution. The solution typically trains customer-specific models with customer data sets. SaaS applications then use the prediction services provided by ML app Instances that were created for them.

As-a-service delivery across tenancies

A key characteristic and benefit provided by ML applications is the standardization of implementation, packaging, and the delivery of AI and ML use cases, which allows ML application providers to offer prediction services that they implement as-a-service. Consumers can see only the surface of the provided ML apps. They interact with ML apps through defined contracts. They can’t see the implementations.

On the other hand, providers are responsible for the management and operations of the implementations. They ensure that prediction services implemented in the ML apps are up and running. They monitor them, react to outages, and roll out fixes and new updates.

Unlike other OCI services that operate in a single OCI tenancy, ML applications support the interactions between consumers and providers across OCI tenancies. Providers and consumers can work independently in their own separate OCI tenancies. It allows for loose coupling between providers and consumers. This separation is a huge advantage because teams of engineers and data scientists developing ML apps don’t need to ask for access to the tenancy of the SaaS application. This configuration simplifies and improves security for the overall solution. Compute and storage resources used by ML apps also don’t interfere with resource consumption and service limits in SaaS tenancy.

You might ask how providers can support consumers without access to the SaaS tenancy. ML applications guarantee observability to providers. For every ML app instance created, ML app instance view resource is created for providers in their tenancies. Instance views mirror ML app instances from consumer tenancies and link all related resources, such as buckets or model deployments. This setup benefits operations and troubleshooting. Providers can easily navigate to the resources used by a specific customer and review monitoring metrics and logs.

Democratization of AI: ML applications and AI services

Although ML applications drastically simplify the development of AI and ML features for SaaS applications, you still need data scientists to build ML pipelines and models for your ML apps.

We found a way to further accelerate the development of AI and ML features. In many cases, you don’t need to develop new pipelines and models and can use a generic AI and ML solution. For example, when you need sentiment analysis, anomaly detection, or forecasting, you can use AI Services. AI Services provide the best-in-class implementation of common AI and ML problems. You can use AI Services to implement the machine learning part of your ML apps.

By adopting AI Services in conjunction with ML applications, you don’t need a large data science department. Instead, your citizen data scientists can enrich your SaaS applications with cutting-edge AI features. Under the hood, ML applications and AI services apply transfer learning and tailor AI and ML models to the specific data sets used by your customers.

Best of all, this shortcut doesn’t close the door to future evolution. You can update your implementation later and provide your own ML pipelines and models if you choose. Thanks to the versioning capabilities, you can roll out even such a substantial change without affecting clients using your initial implementation.

ML applications: Advanced features

The delivery and operations of AI and ML features for SaaS applications too complex for every team or organization to repeatedly address have problems and challenges associated with. A better strategy relies on ML applications to solve them for you, enabling your development and data science teams to focus on business problems. The following sections can give you insight into these problems and solutions.

Versioning: Evolving the fleet

Versioning is one of the most important benefits of ML applications for providers. Typically, teams developing AI and ML use cases don’t want to solve this type of problem on their own. They’re aware of or soon realize how complex it can get, and they start looking for tools or services that solve these problems.

When you decide on a custom solution, think through how to support versioning and consider the following questions:

◉ When and how is a new version provisioned for new customers?

◉ How can you roll out a new version to all the customers who have been already using an older version?

◉ How can you update implementations for existing customers when a pipeline is changed? What if the change isn’t backwards compatible?

◉ What if the interface of your prediction service needs to be changed?

◉ What if the change of your prediction service is backwards incompatible?

◉ How do you deal with migrating customers from old to new versions?

◉ How do you migrate without downtime?

◉ What if your SaaS application has been updated only for some customers and your prediction services are receiving different versions of incompatible requests?

You can imagine that engineers must answer hundreds of tough questions.

On the other hand, consider usability and user experience. You don’t want your data scientists to spend days and weeks following a complex change management process, filling in forms, and asking for approvals. Imagine that a data scientist fixes a typo in an ML pipeline. It must be easy to implement the change, test it, and roll it out to production without needless delay.

ML applications allow providers to release changes to production within minutes independently of the SaaS application release process. Still, ML application’s strong versioning capabilities give providers confidence that the validated changes are delivered to customers without them noticing it. Providers must only update the implementation of their ML apps. ML applications ensure that the whole fleet of existing instances used by customers is updated without outages.

Finally, versioning guarantees reproducibility and traceability. Providers will always know which version, even code revision and line of code, is used by a particular customer in an environment and which changes were applied to the customer’s implementation. Without this feature, troubleshooting problems can be challenging. You also struggle with answering questions from auditors. For every change ever implemented, you must track who introduced the change and when.

Fleet monitoring and management

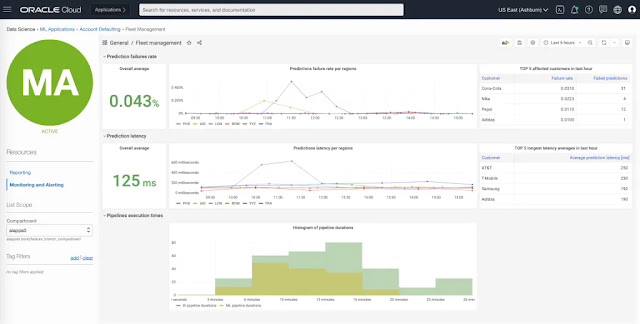

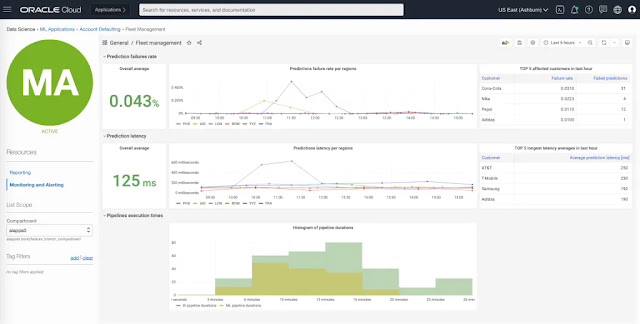

ML Applications are a powerful tool that allows for the deployment of AI and ML functionality at a mass scale. This brings the question of how to monitor and manage the fleet of millions of AI and ML models.

When you have numbers of customers in the low hundreds and a few ML apps, the basic features of ML applications with OCI Monitoring and Logging services can be sufficient for you to monitor and troubleshoot customers’ ML app instances. However, when the adoption grows and you operate dozens of ML apps for thousands of customers, you need more sophisticated tools. The situation usually gets even more complicated because of the necessity to provide the applications in multiple regions and environments.

Different roles in your organization can benefit from an aggregated view that collects information across teams, regions, and environments. Your managers can use fleet monitoring to answer the following questions:

◉ How many customers are affected by a failure or defect?

◉ Are sudden failures of prediction services limited to a region?

◉ How many instances of an ML app are provisioned within a region?

◉ How many ML apps are used by a major customer?

Your product managers can analyze trends around customer adoption and usage. They also control the exposure of new features to customers by defining customer segments, such as early adopters. Without aggregated monitoring metrics, they’re flying blind.

Data scientists can get great assistance from fleet monitoring. Typically, data scientists face a difficult problem. They need to generalize their models across diverse data sets that customers have. They need to ensure that the performance metrics of models meet business requirements for most customers, while managing the tradeoffs between accuracy and operational performance and efficiency. Using fleet monitoring, they can evaluate model performance metrics and take corrective actions.

Reusable components and patterns

ML applications reflect the observation that machine learning implementations tend to follow a relatively small number of patterns. We provide a library of common patterns that you can use as a foundation for the implementation of new ML applications. Providers can pick a pattern and build a new ML application on top of the pattern by adding more business logic instead of building the complete end-to-end implementation from scratch. The pattern ensures that the implementation follows best practices, is efficient and scalable, addresses all corner cases, and covers nonfunctional aspects like security and monitoring.

Patterns define extensibility points that allow providers to add customization into the overall end-to-end implementation of the ML application. For example, a pattern can introduce a transformation extensibility point that runs your Spark SQL in the transformation step within a data pipeline that’s part of the pattern’s implementation.

Demonstrating the benefits of this approach is easy by considering, for example, a pattern that ingests data from Fusion and serves predictions with a REST endpoint. The pattern implements a data pipeline using OCI Data Integration that incrementally ingests Fusion data and transforms them using

OCI Data Flow. Next, an ML pipeline reads transformed training data, trains a new model, and finally deploys the newly trained model as a model deployment. Then, active monitoring triggers when something unusual happens, such as a data ingestion failure or an out-of-memory error in training. Providers are notified and can fix the problem or take proactive action to avoid failures.

You can imagine how inefficient and error-prone it would be to let every team implement their own ML apps from scratch instead of applying the pattern. Better to let engineers and data scientists focus on their business problems instead of challenging them with questions like, “What if the data connection is interrupted while a data increment is downloaded? Is the ingestion going to resume without data loss? What if the training pipeline fails?”

Another essential feature of ML Applications is that Providers can customize patterns. Providers can customize provided patterns and even build new greenfield patterns that they can reuse across their organization.

Vision of ML applications

ML Applications have already been a game changer for Oracle SaaS organizations for years. Now, as part of OCI Data Science, we’re expanding the core functionality and addressing new business problems so all our customers can take advantage.

Helping OCI customers: Independent software vendors building SaaS applications

The obvious extension to the internal usage of ML applications by Oracle teams is to open this capability to you: OCI customers building your own SaaS applications. You can add AI and ML features to your SaaS applications with the same efficiency as the Oracle SaaS organizations.

These days, it can take several years before teams mature their AI and ML development and manage to integrate AI and ML features into their SaaS applications. Most of those teams understand the challenges and expect to get the required functionality as a service instead of implementing everything from scratch on their own. A typical example of functionality that no one wants to build is versioning. Neither engineers nor data scientists want to think through all the corner cases and define how to introduce backward compatible or even incompatible changes into various parts of their AI and ML implementation. They don’t want to define update strategies for models and services used by SaaS customers.

Similarly, ML applications can help you when you need to start AI and ML features for multiple lines of businesses or geographic locations. For example, financial institutions might want to instantiate ML features with different configurations for AMER, EMEA, and APAC regions. Healthcare providers might need to provide an independent instance of an ML App to each hospital.

SaaS application customization: Bring your own implementation (BYOI)

Large companies using SaaS applications with ML application-based features, such as customers of Fusion ERP, might need to customize the out-of-the-box provided AI and ML capabilities and utilize extra data they have outside of the SaaS application or use their private intellectual property. ML applications are designed to enable those companies to build custom ML applications and replace out-of-the-box available functionality provided by Oracle.

Third-party partners who provide specialized functionality for business verticals can even provide such customizations, as described in the next section.

Beyond SaaS: ML application marketplace

One of the key benefits of ML Applications is the ability to offer prediction services as-a-service to the SaaS applications consuming them. SaaS applications using the prediction services don’t need to worry about their implementations and operations. They simply use the provided functionality. On the other hand, the implementers of prediction services are responsible for the operations of their ML app deployments.

We plan to allow anyone who builds any applications on OCI to benefit from this capability. Anyone can build ML apps on OCI, becoming a provider of ML applications. Provided ML apps can be registered in a marketplace allowing any OCI customer to discover them and use them.

Anyone who needs a specific AI and ML functionality can consume ML applications registered in the marketplace. They can use prediction services designed for a certain business problem without needing to invest in the development of their own machine learning pipelines and models. The marketplace can also assist customers and suggest ML apps that a customer can use with data sets they own.

Source: oracle.com