Search mechanism

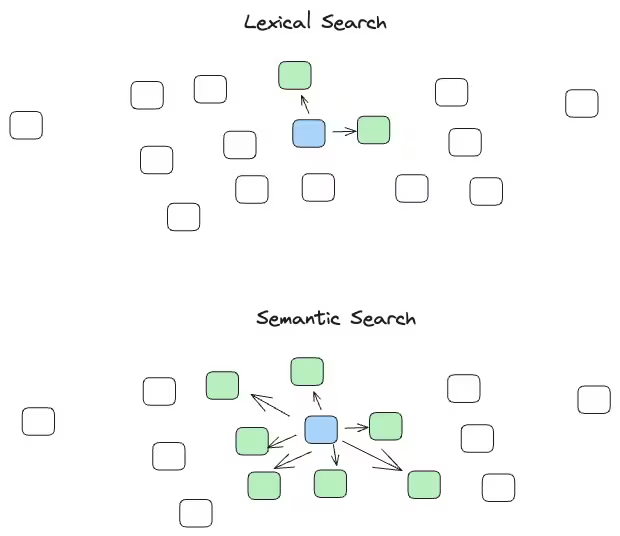

Lexical search and semantic search are two major approaches used in information retrieval and search engines to enhance the accuracy and relevance of search results. Lexical search relies on matching the literal or surface-level representation of words and phrases in the query with the content in the search index. It primarily involves looking for exact matches of keywords without considering the broader meaning or context of the words. Lexical search can be precise but might lead to missed results if the exact terms aren’t present in the document. If a user searches for “apple pie recipes,” a lexical search engine returns results that specifically contain the words “apple,” “pie,” and “recipes.”

Semantic search focuses on understanding the meaning of the query and the context of the information rather than just matching keywords. It uses natural language processing (NLP), machine learning (ML), and other advanced techniques to comprehend the intent behind a user’s query. Semantic search considers synonyms, related concepts, and the relationship between words to provide more contextually relevant results. If a user searches for “healthy recipes,” a semantic search engine might also include recipes that use terms like “nutritious meals” or “wholesome cooking.”

Semantic search aims to understand the meaning behind user queries and provide contextually relevant results, while lexical search relies on literal matching of keywords. Both approaches have their advantages and can be used in combination to improve the overall search experience by considering both the explicit terms and the underlying meaning of the user’s intent.

Strategies to improve search results

Improving search results involves optimizing the search algorithm and user experience to provide more accurate, relevant, and personalized information. You can enhance search results in the following ways:

- Implementing semantic search algorithms that understand the meaning behind words and user intent

- Utilizing NLP to analyze and interpret the context of queries

- Incorporating entity recognition to identify and prioritize key concepts and entities in search queries

- Personalization

- Customizing search results based on user preferences, search history, and behavior

- Using ML algorithms to adapt to individual user patterns and provide more relevant recommendations

- Context awareness

- Considering the user’s context, such as location, device, and previous interactions, to refine search results

- Incorporating temporal aspects to prioritize recent and time-sensitive information

- Multimodal search

- Supporting various types of content, including text, images, videos, and audio, and enabling users to search across different modalities

- Integrating image recognition and speech-to-text capabilities for more comprehensive search functionality

- Feedback mechanisms

- Implementing user feedback loops to learn from user interactions and continuously improve the relevance of search results

- Allowing users to provide explicit feedback on the quality of search results

- Combining lexical and semantic approaches

- Integrating both lexical and semantic search methods to apply the precision of exact matches and the contextual understanding of meaning

- Balancing the importance of keywords with the broader context of user queries

- Rich snippets and structured data

- UtiliUtilizing structured data to enhance the display of search results with rich snippets, making it easier for users to find relevant information quickly

- Ensuring that content is marked up using schema.org or similar markup languages to provide search engines with structured information

- Mobile optimization: Optimizing search results for mobile devices by considering mobile-friendly design, loading speed, and user interface elements

- Accessibility: Ensuring that search results are accessible to users with disabilities by following web accessibility guidelines

- Regular updates and maintenance

- Keeping search algorithms up-to-date with the latest advancements in technology and user expectations

- Regularly reviewing and refining the search index to remove outdated or irrelevant content

By incorporating these strategies, search engines can enhance the overall search experience and provide users with more accurate and valuable information.

In this blog post, we focus on the idea of combining the strength of lexical text and vector search, which has gained momentum in the field of search systems to improve search relevancy and accuracy. By integrating text-based lexical search with vector-based semantic search, we can enhance both the latency and accuracy of our search results.

This post depicts how easily you can create a hybrid setup that combines the power of text and vector search. This setup gives you the most comprehensive and accurate search results. We use OpenSearch as the search engine and Hugging Face’s SentenceTransformers for generating embeddings. The dataset we chose for this task is the XMarket dataset, described in greater depth in Cross-Market Product Recommendation, where we embed the title field into a vector representation during the indexing process. The data used in this script here is public data consisting of electronic products.

Dataset preparation

To begin, we initiate the indexing of our documents using SentenceTransformers. This library features pretrained models capable of producing embeddings for sentences or paragraphs, serving as distinctive fingerprints for text segments. In the indexing phase, we transform the title field into a vector representation and incorporated it into OpenSearch. You can achieve this goal by importing the model and encoding any textual field effortlessly.

We create an index named “products” by passing the following mapping:

Payload 1

{

"products":{

"mappings":{

"properties":{

"asin":{

"type":"keyword"

},

"description_vector":{

"type":"knn_vector",

"dimension":384

},

"item_image":{

"type":"keyword"

},

"text_field":{

"type":"text",

"fields":{

"keyword_field":{

"type":"keyword"

}

},

"analyzer":"standard"

}

}

}

}

}

Let’s define the following parameters:

- asin: The document unique ID taken from the product metadata

- description_vector: Where we store our encoded product title field

- item_image: An image url of the product

- text_field: The title of the product

We’re using standard OpenSearch analyzer, which knows to tokenize each word in a field into single keywords. OpenSearch takes these keywords and uses them for the Okapi BM25 algorithm.

We then use the model to encode the title field and create documents, which loaded to OpenSearch.

Code snippet 1

### Necessary imports ###

import json

import datetime

import numpy as np

from opensearchpy import helpers

from sentence_transformers import SentenceTransformer

from opensearchpy import OpenSearch, RequestsHttpConnection

### Necessary server and index definition ###

SERVER_URL = "http://localhost:9200"

INDEX_NAME = "products"

### Necessary model and file definition ###

METADATA_PATH = "metadata_in_Electronics.json"

model = SentenceTransformer(’sentence-transformers/all-MiniLM-L6-v2’)

### Normalize Data ###

def normalize_data(data):

return data / np.linalg.norm(data, ord=2)

### Load Dataset ###

def load_file(file_path):

try:

json_objects = []

with open(file_path, "r") as json_file:

for line in json_file:

data = json.loads(line)

if type(data) is list:

for element in data:

if "imgUrl" in element and type(element["imgUrl"]) is not list:

imgUrl = list(json.loads(element["imgUrl"]).keys())[0]

element["imgUrl"] = imgUrl

json_objects.append(element)

elif "imgUrl" in data and type(data["imgUrl"]) is not list:

imgUrl = list(json.loads(data["imgUrl"]).keys())[0]

data["imgUrl"] = imgUrl

json_objects.append(data)

print("Done")

finally:

json_file.close()

return json_objects

### Get Open Search Host Details ###

def get_client(server_url: str) -> OpenSearch:

os_client_instance = OpenSearch(SERVER_URL, use_ssl=False, verify_certs=False,

connection_class=RequestsHttpConnection)

print("OS connected")

print(datetime.datetime.now())

return os_client_instance

### Create Index ###

def create_index(index_name: str, os_client: OpenSearch, metadata: np):

mapping = {

"mappings": {

"properties": {

"asin": {

"type": "keyword"

},

"description_vector": {

"type": "knn_vector",

"dimension": get_vector_dimension(metadata),

},

"item_image": {

"type": "keyword",

},

"text_field": {

"type": "text",

"analyzer": "standard",

"fields": {

"keyword_field": {

"type": "keyword"

}

}

}

}

},

"settings": {

"index": {

"number_of_shards": "1",

"knn": "false",

"number_of_replicas": "0"

}

}

}

os_client.indices.create(index=index_name, body=mapping)

### Create Vector Dimension ###

def get_vector_dimension(metadata: list):

title = metadata[0]["title"]

embeddings = model.encode(title)

return len(embeddings)

### Bulk Upload Data to Index ###

def store_index(index_name: str, data: np.array, metadata: list, os_client: OpenSearch):

documents = []

for index_num, vector in enumerate(data):

metadata_line = metadata[index_num]

text_field = metadata_line["title"]

embedding = model.encode(text_field)

norm_text_vector_np = normalize_data(embedding)

document = {

"_index": index_name,

"_id": index_num,

"asin": metadata_line["asin"],

"description_vector": norm_text_vector_np.tolist(),

"item_image": metadata_line["imgUrl"],

"text_field": text_field

}

documents.append(document)

if index_num % 1000 == 0 or index_num == len(data):

helpers.bulk(os_client, documents, request_timeout=1800)

documents = []

print(f"bulk {index_num} indexed successfully")

os_client.indices.refresh(INDEX_NAME)

os_client.indices.refresh(INDEX_NAME)

Hybrid search implementation

The strategy involves developing a user interface that accepts input, utilizes the SentenceTransformers model to generate embeddings, and runs a hybrid search. The user is also prompted to specify a boost level, indicating the degree of importance assigned to either text or vector search. This process enables users to prioritize one search type over the other based on their preferences. For example, if a user wants the semantic meaning of their query to carry more weight than the simple textual appearance in the description, they can assign a higher boost to vector search than text search.

Lexical search

We start a text search on the index using OpenSearch’s search method. This method accepts a query string and provides a list of documents that align with the query. The processing of text search in OpenSearch involves sending the following request body:

Payload 2

{

"size": 20,

"query":

{

"match":

{

"text_field": "DSLR Camera"

}

},

"_source": ["asin", "text_field", "item_image"]

}

Because the ranking score algorithms for text and vector searches differ, we must standardize the scores to a common scale for result combination, which involves normalizing the scores for each document obtained from the text search. The maximum BM25 score signifies the highest possible score assigned to a document in a collection for a specific query, representing the utmost relevance. The value of this score relies on BM25 formula parameters like the average document length, term frequency, and inverse document frequency. Consequently, we computed the maximum score attained from OpenSearch for each query and divided the scores of each result by this maximum, ensuring scores range between 0 and 1. The normalization algorithm is illustrated in the following function:

Code snippet 2

def normalize_bm25_formula(score, max_score):

return score / max_score

def normalize_bm25(bm_results):

hits = (bm_results["hits"]["hits"])

max_score = bm_results["hits"]["max_score"]

for hit in hits:

hit["_score"] = normalize_bm25_formula(hit["_score"], max_score)

bm_results["hits"]["max_score"] = hits[0]["_score"]

bm_results["hits"]["hits"] = hits

return bm_results

Semantic search

Next, we run a vector search using the vector search method. This method accepts a list of embeddings and provides a list of documents that exhibit semantic similarity to the given embeddings.

The OpenSearch search query for this process is structured like the following example:

Payload 3

cpu_request_body = {

"size": 20,

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "knn_score",

"lang": "knn",

"params": {

"field": "description_vector",

"query_value": get_vector_sentence_transformers(query).tolist(),

"space_type": "cosinesimil"

}

}

}

},

"_source": ["asin", "text_field", "item_image"],

}

Hybridize the outcomes and implement the specific boost

Now, we combine the two search results by interpolating the results so that every document that occurred in both searches appears higher in the hybrid results list. This way, we can take advantage of the strengths of both text and vector search to get the most comprehensive results.

The following function interpolates the results of keyword search and vector search. It returns a dictionary containing the common elements between the two sets of hits and the scores for each document. If the document appears in only one of the search results, we assign it the lowest score that was retrieved.

Code snippet 3

def interpolate_results(vector_hits, bm25_hits):

# gather all product ids

bm25_ids_list = []

vector_ids_list = []

for hit in bm25_hits:

bm25_ids_list.append(hit["_source"]["asin"])

for hit in vector_hits:

vector_ids_list.append(hit["_source"]["asin"])

# find common product ids

common_results = set(bm25_ids_list) & set(vector_ids_list)

results_dictionary = dict((key, []) for key in common_results)

for common_result in common_results:

for index, vector_hit in enumerate(vector_hits):

if vector_hit["_source"]["asin"] == common_result:

results_dictionary[common_result].append(vector_hit["_score"])

for index, BM_hit in enumerate(bm25_hits):

if BM_hit["_source"]["asin"] == common_result:

results_dictionary[common_result].append(BM_hit["_score"])

min_value = get_min_score(common_results, results_dictionary)

# assign minimum value scores for all unique results

for vector_hit in vector_hits:

if vector_hit["_source"]["asin"] not in common_results:

new_scored_element_id = vector_hit["_source"]["asin"]

results_dictionary[new_scored_element_id] = [min_value]

for BM_hit in bm25_hits:

if BM_hit["_source"]["asin"] not in common_results:

new_scored_element_id = BM_hit["_source"]["asin"]

results_dictionary[new_scored_element_id] = [min_value]

return results_dictionary

In the end, we possess a dictionary where the document ID serves as the key and an array of score values acts as the corresponding value. The initial array element represents the vector search score, while the second element signifies the normalized score from the text search. To conclude, we implement a boost to refine our search results. Iterating through the result scores, we multiply the first element by the vector boost level and the second element by the text boost level.

Code snippet 4

def apply_boost(combined_results, vector_boost_level, bm25_boost_level):

for element in combined_results:

if len(combined_results[element]) == 1:

combined_results[element] = combined_results[element][0] * vector_boost_level + \

combined_results[element][0] * bm25_boost_level

else:

combined_results[element] = combined_results[element][0] * vector_boost_level + \

combined_results[element][1] * bm25_boost_level

# sort the results based on the new scores

sorted_results = [k for k, v in sorted(combined_results.items(), key=lambda item: item[1], reverse=True)]

return sorted_results

Complete code snippet

import numpy as np

from sentence_transformers import SentenceTransformer

from opensearchpy import OpenSearch, RequestsHttpConnection

SERVER_URL = "http://localhost:9200"

INDEX_NAME = "products"

model = SentenceTransformer(’sentence-transformers/all-MiniLM-L6-v2’)

def normalize_bm25_formula(score, max_score):

return score / max_score

def normalize_bm25(bm_results):

hits = (bm_results["hits"]["hits"])

max_score = bm_results["hits"]["max_score"]

for hit in hits:

hit["_score"] = normalize_bm25_formula(hit["_score"], max_score)

bm_results["hits"]["max_score"] = hits[0]["_score"]

bm_results["hits"]["hits"] = hits

return bm_results

def run_queries(os_client):

while True:

query = input("Enter your vector search query: ")

vector_boost_level = float(input("Enter how much vector search boost you want to apply: "))

if query == "exit":

break

else:

bm25_boost_level = float(input("Enter how much keyword search boost you want to apply: "))

apu_request_body = {

"size": 20,

"query": {

"gsi_knn": {

"field": "description_vector",

"vector": get_vector_sentence_transformers(query).tolist(),

}

},

"_source": ["asin", "text_field", "item_image"],

}

# reduce the scores by 1 when using cpu

cpu_request_body = {

"size": 20,

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "knn_score",

"lang": "knn",

"params": {

"field": "description_vector",

"query_value": get_vector_sentence_transformers(query).tolist(),

"space_type": "cosinesimil"

}

}

}

},

"_source": ["asin", "text_field", "item_image"],

}

bm25_query = {

"size": 20,

"query": {

"match": {

"text_field": query

}

},

"_source": ["asin", "text_field", "item_image"],

}

vector_search_results = os_client.search(body=cpu_request_body, index=INDEX_NAME)

print("vector_search_results")

print(vector_search_results)

bm25_results = os_client.search(body=bm25_query, index=INDEX_NAME)

bm25_results = normalize_bm25(bm25_results)

combined_results = interpolate_results(vector_search_results["hits"]["hits"],

bm25_results["hits"]["hits"])

sorted_elements = apply_boost(combined_results, vector_boost_level, bm25_boost_level)

result_data_dictionary = extract_results_data(vector_search_results["hits"]["hits"],

bm25_results["hits"]["hits"])

construct_response(result_data_dictionary, sorted_elements)

def extract_results_data(vector_data, bm25_data):

result_data_dictionary = {}

for vector_hit in vector_data:

product_id = vector_hit["_source"]["asin"]

img_url = vector_hit["_source"]["item_image"]

text_description = vector_hit["_source"]["text_field"]

result_data_dictionary[product_id] = [img_url, text_description]

for bm25_hit in bm25_data:

product_id = bm25_hit["_source"]["asin"]

img_url = bm25_hit["_source"]["item_image"]

text_description = bm25_hit["_source"]["text_field"]

result_data_dictionary[product_id] = [img_url, text_description]

return result_data_dictionary

def construct_response(result_data_dictionary, sorted_elements):

for index, sorted_element in enumerate(sorted_elements):

print(index + 1, result_data_dictionary[sorted_element])

def get_vector_sentence_transformers(text_input):

return model.encode(text_input)

def normalize_data(data):

return data / np.linalg.norm(data, ord=2)

def get_client(server_url: str) -> OpenSearch:

os_instance = OpenSearch(SERVER_URL, use_ssl=False, verify_certs=False,

connection_class=RequestsHttpConnection)

# print("OS connected")

return os_instance

def get_min_score(common_elements, elements_dictionary):

if len(common_elements):

return min([min(v) for v in elements_dictionary.values()])

else:

# No common results - assign arbitrary minimum score value

return 0.01

def interpolate_results(vector_hits, bm25_hits):

# gather all product ids

bm25_ids_list = []

vector_ids_list = []

for hit in bm25_hits:

bm25_ids_list.append(hit["_source"]["asin"])

for hit in vector_hits:

vector_ids_list.append(hit["_source"]["asin"])

# find common product ids

common_results = set(bm25_ids_list) & set(vector_ids_list)

results_dictionary = dict((key, []) for key in common_results)

for common_result in common_results:

for index, vector_hit in enumerate(vector_hits):

if vector_hit["_source"]["asin"] == common_result:

results_dictionary[common_result].append(vector_hit["_score"])

for index, BM_hit in enumerate(bm25_hits):

if BM_hit["_source"]["asin"] == common_result:

results_dictionary[common_result].append(BM_hit["_score"])

min_value = get_min_score(common_results, results_dictionary)

# assign minimum value scores for all unique results

for vector_hit in vector_hits:

if vector_hit["_source"]["asin"] not in common_results:

new_scored_element_id = vector_hit["_source"]["asin"]

results_dictionary[new_scored_element_id] = [min_value]

for BM_hit in bm25_hits:

if BM_hit["_source"]["asin"] not in common_results:

new_scored_element_id = BM_hit["_source"]["asin"]

results_dictionary[new_scored_element_id] = [min_value]

return results_dictionary

def apply_boost(combined_results, vector_boost_level, bm25_boost_level):

for element in combined_results:

if len(combined_results[element]) == 1:

combined_results[element] = combined_results[element][0] * vector_boost_level + \

combined_results[element][0] * bm25_boost_level

else:

combined_results[element] = combined_results[element][0] * vector_boost_level + \

combined_results[element][1] * bm25_boost_level

# sort the results based on the new scores

sorted_results = [k for k, v in sorted(combined_results.items(), key=lambda item: item[1], reverse=True)]

return sorted_results

os_client = get_client(SERVER_URL)

run_queries(os_client)

Results outcome

We searched for “headphones” with a 0.5 boost for vector search and a 0.5 boost for text search, and got the following examples in the top four lexical results:

- B&O Play 1108426 Ear Set 3i Headphones with Mic (Black)

- Compact Ball Head W/Rc2

- Benro S4 Video Head (Black)

- GoPro Head Strap and Quick Clip

The results aren’t very accurate from user experience is concerned, apart from the first one. The pure semantic search returned the following results:

- Wireless Headphone, Rowkin Mini Sports Bluetooth Earbud Headset with Built-in Mic and Portable Charging Case

- JVC Riptidz HA-S200-B On-the-ear Headphone

- Parasom Headphones (Black)

- Betron HD in-ear, Noise Isolating, Heavy Deep Base Headphone for iPhone, iPod, iPad, MP3 Players, Samsung Galaxy, Nokia, HTC

The results are quite accurate for user experience. All the results are providing the headphones and not the accessories.

For hybrid search with 0.5 boost for vector search and a 0.5 boost for text search, we got the following top four results:

- Wireless Headphone, Rowkin Mini Sports Bluetooth Earbud Headset with Built-in Mic and Portable Charging Case

- JVC Riptidz HA-S200-B On-the-ear Headphone

- Parasom Headphones (Black)

- Betron HD in-ear, Noise Isolating, Heavy Deep Base Headphone for iPhone, iPod, iPad, MP3 Players, Samsung Galaxy, Nokia, HTC

The results are accurate where user experience is concerned, all the results providing the headphones and not the accessories. More importantly, at least the top four results are exact the same as the pure semantic search, although the boost provided the same for both text and semantic-based algorithm.

Source: oracle.com

0 comments:

Post a Comment