◉ VARCHAR2 : 4000 bytes

◉ NVARCHAR2 : 4000 bytes

◉ RAW : 2000 bytes

With the introduction of Extended Data Types, Oracle 12c optionally increases these maximum sizes.

◉ VARCHAR2 : 32767 bytes

◉ NVARCHAR2 : 32767 bytes

◉ RAW : 32767 bytes

Remember, these figures are in bytes, not characters. The total number of characters that can be stored will depend on the character sets being used.

◉ 12cR2 Update

Prior to the on-prem release, people were suggesting extended data types would be the default in Oracle 12.2. The Database Cloud Service on the Oracle Public Cloud has this feature turned on by default, which added some weight to this suggestion. The on-prem release of Oracle 12.2 does not have extended data types enabled by default.

Extended data types are necessary if you want to use the column-level collation feature available in 12.2 onward.

◉ Enabling Extended Data Types in a non-CDB

The extended data types functionality is controlled using the MAX_STRING_SIZE initialization parameter. The default value is STANDARD, which restricts the maximum sizes to the traditional lengths. Setting the parameter value to EXTENDED allows for the new maximum lengths.

The process of switching to the extended data types is a one-way operation. Once you switch to extended data types you can't switch back without some form of database recovery. In addition to changing the parameter, you must run the "utl32k.sql" script to invalidate and recompile any objects that may be affected by the maximum length changes. An example of the commands required to enable extended data types in a single instance non-CDB database are shown below.

A commenter (Hristiyan) also suggested running PURGE DBA_RECYCLEBIN before proceeding. I've never done this and I've not had any issues as a result of it, but it's probably a good idea.

CONN / AS SYSDBA

-- Precaution to prevent possible failures. Suggested by Hristiyan.

PURGE DBA_RECYCLEBIN

SHUTDOWN IMMEDIATE;

STARTUP UPGRADE;

ALTER SYSTEM SET max_string_size=extended;

@?/rdbms/admin/utl32k.sql

SHUTDOWN IMMEDIATE;

STARTUP;

The MAX_STRING_SIZE documentation includes the procedure for other types of database, including:

◉ Pluggable Databases (PDBs)

◉ RAC Databases

◉ Oracle Data Guard Logical Standby Database

The "utl32k.sql" script produces output that looks something like this.

Session altered.

DOC>################################################

DOC>################################################

DOC> The following statement will cause an "ORA-01722: invalid number"

DOC> error if the database has not been opened for UPGRADE.

DOC>

DOC> Perform a "SHUTDOWN ABORT" and

DOC> restart using UPGRADE.

DOC>###############################################

DOC>###############################################

DOC>#

no rows selected

DOC>################################################

DOC>################################################

DOC> The following statement will cause an "ORA-01722: invalid number"

DOC> error if the database does not have compatible >= 12.0.0

DOC>

DOC> Set compatible >= 12.0.0 and retry.

DOC>#################################################

DOC>#################################################

DOC>#

PL/SQL procedure successfully completed.

Session altered.

0 rows updated.

Commit complete.

System altered.

PL/SQL procedure successfully completed.

Commit complete.

System altered.

Session altered.

PL/SQL procedure successfully completed.

No errors.

Session altered.

PL/SQL procedure successfully completed.

Commit complete.

Package altered.

TIMESTAMP

--------------------------------------------------------------------------------

COMP_TIMESTAMP UTLRP_BGN 2013-07-10 10:11:26

DOC> The following PL/SQL block invokes UTL_RECOMP to recompile invalid

DOC> objects in the database. Recompilation time is proportional to the

DOC> number of invalid objects in the database, so this command may take

DOC> a long time to execute on a database with a large number of invalid

DOC> objects.

DOC>

DOC> Use the following queries to track recompilation progress:

DOC>

DOC> 1. Query returning the number of invalid objects remaining. This

DOC> number should decrease with time.

DOC> SELECT COUNT(*) FROM obj$ WHERE status IN (4, 5, 6);

DOC>

DOC> 2. Query returning the number of objects compiled so far. This number

DOC> should increase with time.

DOC> SELECT COUNT(*) FROM UTL_RECOMP_COMPILED;

DOC>

DOC> This script automatically chooses serial or parallel recompilation

DOC> based on the number of CPUs available (parameter cpu_count) multiplied

DOC> by the number of threads per CPU (parameter parallel_threads_per_cpu).

DOC> On RAC, this number is added across all RAC nodes.

DOC>

DOC> UTL_RECOMP uses DBMS_SCHEDULER to create jobs for parallel

DOC> recompilation. Jobs are created without instance affinity so that they

DOC> can migrate across RAC nodes. Use the following queries to verify

DOC> whether UTL_RECOMP jobs are being created and run correctly:

DOC>

DOC> 1. Query showing jobs created by UTL_RECOMP

DOC> SELECT job_name FROM dba_scheduler_jobs

DOC> WHERE job_name like 'UTL_RECOMP_SLAVE_%';

DOC>

DOC> 2. Query showing UTL_RECOMP jobs that are running

DOC> SELECT job_name FROM dba_scheduler_running_jobs

DOC> WHERE job_name like 'UTL_RECOMP_SLAVE_%';

DOC>#

PL/SQL procedure successfully completed.

TIMESTAMP

--------------------------------------------------------------------------------

COMP_TIMESTAMP UTLRP_END 2013-07-10 10:11:33

DOC> The following query reports the number of objects that have compiled

DOC> with errors.

DOC>

DOC> If the number is higher than expected, please examine the error

DOC> messages reported with each object (using SHOW ERRORS) to see if they

DOC> point to system misconfiguration or resource constraints that must be

DOC> fixed before attempting to recompile these objects.

DOC>#

OBJECTS WITH ERRORS

-------------------

0

DOC> The following query reports the number of errors caught during

DOC> recompilation. If this number is non-zero, please query the error

DOC> messages in the table UTL_RECOMP_ERRORS to see if any of these errors

DOC> are due to misconfiguration or resource constraints that must be

DOC> fixed before objects can compile successfully.

DOC>#

ERRORS DURING RECOMPILATION

---------------------------

0

Function created.

PL/SQL procedure successfully completed.

Function dropped.

...Database user "SYS", database schema "APEX_040200", user# "98" 11:26:30

...Compiled 0 out of 2998 objects considered, 0 failed compilation 11:26:31

...263 packages

...255 package bodies

...453 tables

...11 functions

...16 procedures

...3 sequences

...458 triggers

...1322 indexes

...207 views

...0 libraries

...6 types

...0 type bodies

...0 operators

...0 index types

...Begin key object existence check 11:26:31

...Completed key object existence check 11:26:31

...Setting DBMS Registry 11:26:31

...Setting DBMS Registry Complete 11:26:31

...Exiting validate 11:26:31

PL/SQL procedure successfully completed.

SQL>

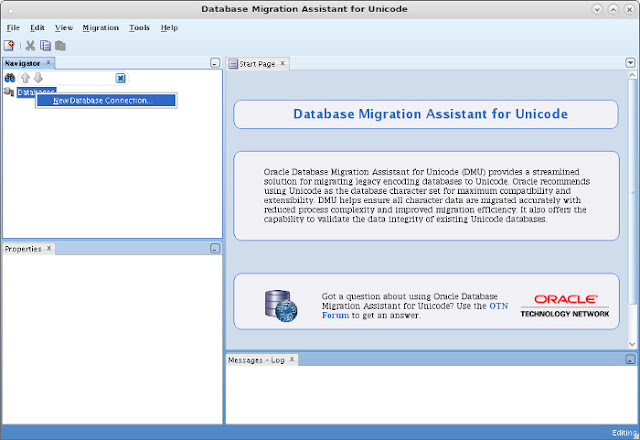

◉ Enabling Extended Data Types in a PDB

The process of enabling extended data types is similar for pluggable databases, but you have to remember to perform the change on the root container and all pluggable databases.

Prepare the root container and all pluggable databases so we can run the "utl32k.sql" script in them.

CONN / AS SYSDBA

ALTER SYSTEM SET max_string_size=extended SCOPE=SPFILE;

SHUTDOWN IMMEDIATE;

STARTUP UPGRADE;

ALTER PLUGGABLE DATABASE ALL OPEN UPGRADE;

EXIT;

A commenter (Hristiyan) also suggested running PURGE DBA_RECYCLEBIN before proceeding. I've never done this and I've not had any issues as a result of it, but it's probably a good idea. Remember to run it in all containers.

Run the "utl32k.sql" script using "catcon.pl", so the change is made to the root container and all the pluggable databases.

$ cd $ORACLE_HOME/rdbms/admin/

$ $ORACLE_HOME/perl/bin/perl catcon.pl -d $ORACLE_HOME/rdbms/admin -l /tmp -b utl32k_output utl32k.sql

Check the output in the log files to make sure the scripts ran correctly.

$ ls /tmp/utl32k_output*

/tmp/utl32k_output0.log /tmp/utl32k_output3.log

/tmp/utl32k_output1.log /tmp/utl32k_output_catcon_4581.lst

/tmp/utl32k_output2.log /tmp/utl32k_output_catcon_4740.lst

$

Restart the database to complete the process.

CONN / AS SYSDBA

SHUTDOWN IMMEDIATE;

STARTUP;

◉ Using Extended Data Types

As stated previously, the maximum sizes are quoted in bytes, so database columns defined using character semantics have differing maximum sizes dependent on the character set used. Remember, NVARCHAR2 is always defined using character semantics.

With extended data types enabled we can see the change to the standard behaviour.

DROP TABLE t1 PURGE;

CREATE TABLE t1 (

id NUMBER,

varchar2_data VARCHAR2(32767),

nvarchar2_data NVARCHAR2(16383),

raw_data RAW(32767)

);

Table created.

SQL>

The following code inserts some maximum size data into the table and queries the lengths of the data.

INSERT INTO t1

SELECT 1,

RPAD('X', 32767, 'X') AS varchar2_data,

RPAD('X', 16383, 'X') AS nvarchar2_data,

UTL_RAW.cast_to_raw(RPAD('X', 32767, 'X')) as raw_data

FROM dual;

SELECT id,

LENGTH(varchar2_data),

LENGTH(nvarchar2_data),

LENGTH(raw_data)

FROM t1;

ID LENGTH(VARCHAR2_DATA) LENGTH(NVARCHAR2_DATA) LENGTH(RAW_DATA)

---------- --------------------- ---------------------- ----------------

1 32767 16383 32767

1 row selected.

SQL>

◉ Implicit LOBs

If we look at the contents of our schema, we can see that our extended data types are really just a veneer hiding LOB processing. Each of the large columns has an associated LOB segment and LOB index.

COLUMN object_name FORMAT A40

SELECT object_type, object_name

FROM user_objects

ORDER BY object_type, object_name;

OBJECT_TYPE OBJECT_NAME

-------------------- ----------------------------------------

INDEX SYS_IL0000092349C00002$$

INDEX SYS_IL0000092349C00003$$

INDEX SYS_IL0000092349C00004$$

LOB SYS_LOB0000092349C00002$$

LOB SYS_LOB0000092349C00003$$

LOB SYS_LOB0000092349C00004$$

TABLE T1

7 rows selected.

SQL>

These implicit LOBs come with a number of restrictions beyond those seen with conventional LOBs.

◉ VARCHAR2 and NVARCHAR2 columns with a declared size greater than 4000 bytes are considered extended data types.

◉ RAW columns with a declared size greater than 2000 bytes are considered extended data types.

◉ All extended data types (see the previous two definitions) are stored out-of-line in LOB segments.

◉ You have no manual control over these LOB segments. Their management is totally internal.

◉ The LOB segments will always be stored in the same tablespace as the associated table.

◉ LOBs stored in Automatic Segment Space Management (ASSM) tablespaces are stored as SecureFiles. Those stored in non-ASSM tablespaces are stored as BasicFiles.

◉ All normal LOB restrictions apply.

◉ Thoughts

I think it is worth considering this feature if you are involved in a migration project and don't want to re-factor code to switch to conventional LOB processing, but I don't feel it should be used in a conventional Oracle project.

If you do decide to use this feature in an existing project, you need to do some serious testing before you commit yourself.

◉ Bugs

Petar Spasov mentioned the following bug in the comments related to one of data pump AQ tables (KUPC$DATAPUMP_QUETAB*), which prevented him for using extended data types until the bug was patched.

UTL32K.SQL FAILING WITH ORA-24005 ON AQ QUEUE TABLE (Doc ID 2092248.1)

As mentioned previously, such a large scale change needs to be tested in your system. Don't just assume everything will be fine.

Jwaleet mentioned getting a "ORA-01441: cannot decrease column length because some value is too big" error when trying to enable extended data types. The resolution was to drop a materialized view to allow the database to function. Presumably they rebuilt it after the database was working again.