Oracle launches GoldenGate data integration service on Oracle Cloud Infrastructure

Oracle is reinventing its GoldenGate real-time replication capability as a managed cloud service running in the Oracle Cloud Infrastructure (OCI) that is designed to provide event-based integration of operational and analytic databases, regardless of where they run.

Oracle has taken another step in rounding out its cloud managed services portfolio by adding its GoldenGate data integration service to the mix. The OCI GoldenGate service is a serverless offering that runs on the Oracle Cloud, providing an event-based approach to integrating operational and analytic databases between on-premises data centers and any public, private, or hybrid clouds.

GoldenGate, which has been typically employed with change-data-capture feeds for keeping different instances of databases across data centers or geographic locations in sync, has often been used for disaster recovery use cases with remote hot sites. While Oracle still supports that use case, with the managed cloud service, Oracle is broadening the focus to integrate data across on-premises and one (or more) public clouds under a common umbrella.

Since its acquisition by Oracle back in 2009, GoldenGate has largely existed outside the limelight; although offered as standalone, GoldenGate was also a component of Oracle’s broader Data Integration suite and Maximum Availability Architecture (MAA). Part of the lack of recognition is that, unlike many Oracle acquisitions, GoldenGate kept the same brand name as the formerly independent company, and unlike a number of Oracle products like Oracle’s Zero Data Loss Recovery Appliance, its branding is not exactly evocative of its function.

Even in the background, GoldenGate built a fairly substantial installed base, counting over 80% of the Fortune Global 100. It was designed to provide a real-time alternative to the batch-oriented data extraction and movement tools of the time. And although Oracle has owned GoldenGate for over a decade, the tool never became Oracle database-specific. In recent years, Oracle has expanded GoldenGate by adding support for big data streaming integration and analytics.

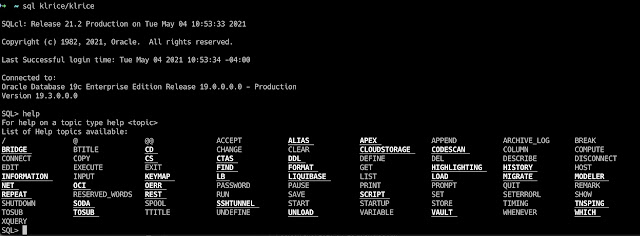

When Oracle acquired GoldenGate, it differentiated by not requiring the staging servers that were the norm at the time; instead, introspected change logs from source databases as a change-data-capture (CDC) tool. Ahead of its time in the early 2000s, fast forward to the present and in the cloud, intercepting database change logs at the source has become the norm for data replication. The pathway to the new managed cloud service offering was opened several years ago when Oracle reengineered GoldenGate with a microservices architecture.

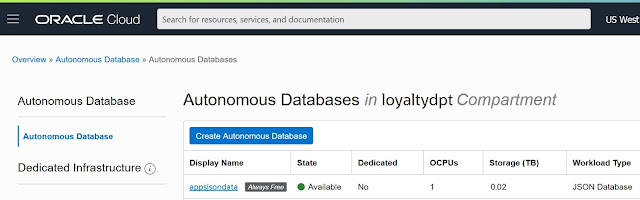

Today, Oracle GoldenGate competes with replication tools from niche providers like Striim (whose cofounders came from Oracle), Qlik Data Integration (formerly Attunity), and HVR; while most of these tools are available in cloud provider marketplaces, they are not delivered as managed PaaS services. Rival cloud services, such as AWS Glue, which is a serverless ETL offering, provides some similar services within the AWS cloud but otherwise is not directly comparable with OCI GoldenGate.Oracle’s service, which only runs in the OCI, takes advantage of the same autoscaling technologies used by the Oracle Autonomous Database. It can manage connections to remote databases sitting in other clouds. Nonetheless, as Oracle has always kept GoldenGate as a service that would play friendly with non-Oracle databases, we believe that Oracle should offer this service in other public clouds.

Just like the Autonomous Database, Oracle is aggressively pricing the OCI GoldenGate service, in this case at $0.67/hour per vCPU, which it claims is cheaper than rival cloud database migration services. With elastic cloud pricing and by making it much easier to use, Oracle is seeking to expand beyond its enterprise comfort zone to midsized businesses that would not normally use them. The OCI GoldenGate service is generally available now in all of Oracle’s cloud regions globally.

Source: oracle.com