Friday, June 30, 2023

Oracle Announces Oracle Database for Arm Architectures in the Cloud & On-Premises

Wednesday, June 28, 2023

US Treasury Department Affirms What Leading Exadata Cloud@Customer Financial Services Customers Already Know!

1. Insufficient transparency to support due diligence and monitoring by financial institutions.

2. Gaps in human capital and tools to securely deploy cloud services.

3. Exposure to potential operational incidents, including those originating at a Cloud Service Provider (CSP).

4. Potential impact of market concentration in cloud service offerings on the financial sector’s resilience.

5. Dynamics in contract negotiations given market concentration.

6. International landscape and regulatory fragmentation.

Monday, June 26, 2023

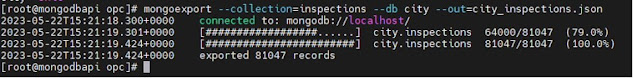

Develop MongoDB Applications with Oracle Autonomous Database on Dedicated Exadata Infrastructure

Friday, June 23, 2023

Benefits of Passing 1Z0-1091-22 Exam and Becoming an Oracle Certified Professional

In today's rapidly evolving technological landscape, staying ahead in your career requires continuous learning and upskilling. For professionals in the field of Oracle Fusion Cloud, the 1Z0-1091-22 exam is a crucial milestone. This exam tests your knowledge and expertise in Oracle Utilities Meter Solution Cloud Service 2022 Implementation Professional, a vital Oracle Fusion Cloud suite component. This article will explore essential tips to help you excel in the 1Z0-1091-22 exam and pave the way for a successful career in Oracle Fusion Cloud.

Understanding of Oracle Utilities Meter Solution Cloud Service 2022 Implementation Professional Certification

Oracle Utilities Meter Solution Cloud Service 2022 Implementation Professional is a comprehensive solution that enables utility companies to effectively manage and analyze the vast amounts of meter data they collect. It provides a centralized data storage, validation, estimation, editing, and reporting platform. Oracle Fusion Cloud's 1Z0-1091-22 exam evaluates your proficiency in configuring and implementing Oracle to address real-world challenges utility organizations face.

Preparing for the 1Z0-1091-22 Exam

To maximize your chances of success in the 1Z0-1091-22 exam, it is crucial to establish a well-structured study plan. Here are some essential tips to help you prepare effectively:

- Set Clear Goals: Define specific goals and objectives for your exam preparation journey. This will help you stay focused and motivated throughout the process.

- Gather Study Materials: Collect comprehensive study materials, including official Oracle documentation, textbooks, online courses, and practice exams. Referencing multiple resources will provide a well-rounded understanding of the subject matter.

- Practice Hands-on: Oracle Fusion Cloud is a practical platform, and hands-on experience is invaluable. Set up a practice environment and actively engage with Oracle Utilities MDM functionalities.

- Join Study Groups: Collaborate with fellow professionals preparing for the 1Z0-1091-22 exam. Participating in study groups allows for knowledge sharing, discussion of complex topics, and exposure to different perspectives.

- Manage Time Effectively: Create a study schedule that suits your routine and commitments. Allocate dedicated time slots for studying and stick to the plan consistently.

Effective Study Strategies

While studying for the 1Z0-1091-22 exam, employ these effective strategies to enhance your learning experience:

- Chunking: Break down complex topics into smaller, manageable chunks. This approach aids comprehension and retention of information.

- Active Recall: Engage in active recall techniques such as flashcards, summarizing concepts in your own words, or teaching the material to someone else. This strengthens memory and understanding.

- Interleaving: Instead of studying a single topic extensively, interleave your study sessions with different subjects. This technique promotes better long-term retention and improves overall understanding.

- Spaced Repetition: Regularly review previously covered topics at spaced intervals. Spaced repetition helps reinforce knowledge over time and prevents forgetting.

- Utilize Mnemonics: Mnemonic devices like acronyms or visual associations can assist in memorizing complex information, formulas, or critical concepts.

Recommended Learning Resources

Here are some highly recommended learning resources for comprehensive 1Z0-1091-22 exam preparation:

- Official Oracle Documentation: The official documentation provides in-depth information about Oracle Utilities Meter Solution Cloud Service 2022 Implementation Professional and related functionalities.

- Oracle University Training: Oracle University offers instructor-led training courses specifically designed for Oracle Fusion Cloud certifications. Enroll in their "Oracle Utilities Meter Solution Cloud Service 2022 Implementation Professional" course for comprehensive exam preparation.

- Online 1Z0-1091-22 Practice Exams: Several online platforms provide 1Z0-1091-22 practice exams that simulate the actual exam environment. Practice exams help you assess your readiness and identify areas that require further attention.

1Z0-1091-22 Exam Day Tips

On the day of the 1Z0-1091-22 exam, follow these tips to optimize your performance:

- Get Sufficient Rest: Ensure you have a good night's sleep before the exam day to be mentally alert and focused.

- Arrive Early: Plan to arrive at the exam center well in advance to avoid any last-minute rush or anxiety.

- Read Instructions Carefully: Take your time to read the exam instructions thoroughly before starting the test. Understand the format and any specific requirements.

- Manage Time: Budget your time wisely throughout the exam. Pace yourself to complete all the questions within the allocated timeframe.

- Review Your Answers: Review your answers before submitting the exam if time permits. Check for any errors or omissions.

Exploring Career Prospects with the 1Z0-1091-22 Certification

The 1Z0-1091-22 certification holds significant value in today's technology-driven world. By obtaining this certification, professionals can unlock a wide range of career opportunities and enhance their prospects in the industry.

- Database Administrator: With the 1Z0-1091-22 certification, you can pursue a career as a database administrator. This role involves managing and organizing data within an organization's databases. Database administrators are critical in ensuring data security, optimizing database performance, and troubleshooting issues. This certification equips you with the necessary skills to excel in this field and opens doors to opportunities with organizations that rely on efficient data management.

- Database Developer: You can explore the database developer role as a certified professional. Database developers are responsible for designing, implementing, and maintaining databases. They work closely with software developers to ensure that applications are appropriately integrated with databases and meet the organization's requirements. The 1Z0-1091-22 certification provides you with the knowledge and expertise to excel in this field, allowing you to contribute to developing robust database solutions.

- Data Analyst: In the age of big data, the demand for skilled data analysts is soaring. With the 1Z0-1091-22 certification, you can pursue a career as a data analyst, where you will analyze and interpret data to uncover valuable insights and support decision-making processes. This certification equips you with the necessary skills to handle large datasets, perform data mining and analysis, and present findings effectively, making you a valuable asset to organizations across various industries.

- Database Consultant: Certified professionals can explore opportunities as database consultants, providing expert advice and guidance on database design, implementation, and optimization. As a consultant, you will work closely with clients to understand their unique requirements, analyze existing systems, and recommend solutions to improve performance, scalability, and security. The 1Z0-1091-22 certification enhances your credibility and positions you as a trusted advisor in database management.

- IT Project Manager: The 1Z0-1091-22 certification can also pave the way for a career in IT project management. As a project manager, you will oversee the planning, execution, and successful delivery of IT projects, including database-related initiatives. This certification equips you with a strong foundation in database technologies, enabling you to communicate effectively with technical teams, manage project timelines, and ensure successful project outcomes.

Conclusion

The 1Z0-1091-22 certification opens up a plethora of career prospects in the field of database management. Whether you aspire to become a database administrator, developer, data analyst, consultant, or project manager, this certification validates your expertise and enhances your professional credibility. Embrace the opportunities with this certification and embark on a rewarding career path in the ever-evolving realm of data management and technology.

Wednesday, June 21, 2023

Introduction to JavaScript in Oracle Database 23c Free - Developer Release

Developing Applications for Oracle Database: Client & Server

- Ensure that the compatible initialization parameter is set to 23.0.0 or higher

- The new initialization parameter multilingual_engine is set to enable

- The following system privileges have been granted to your user:

- create mle

- create procedure

- any other privileges such as creating tables, indexes, etc. The db_developer_role might prove to be useful

- The execute on javascript object privilege has been granted to the user

- Your platform is Linux x86-64

Monday, June 19, 2023

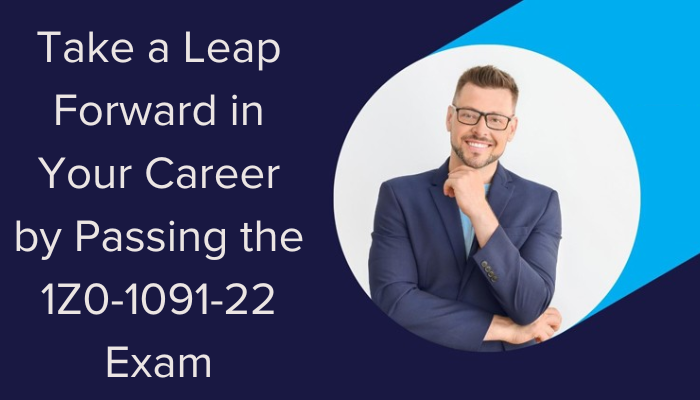

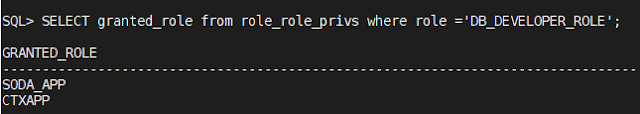

Oracle Database 23c: New feature - DB_DEVELOPER_ROLE

Friday, June 16, 2023

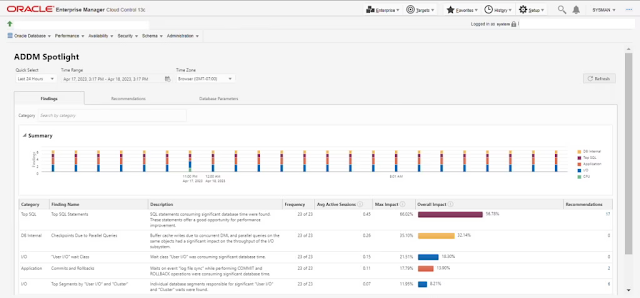

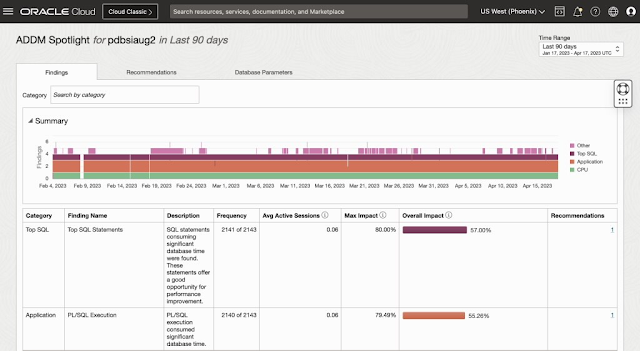

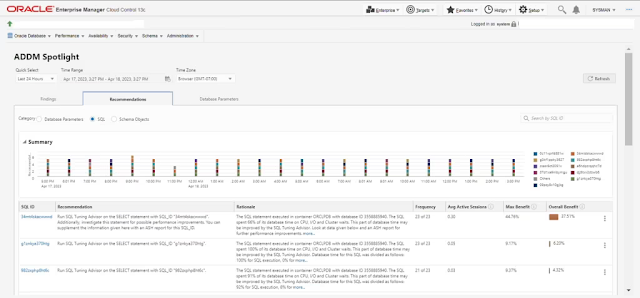

ADDM Spotlight provides strategic advice to optimize Oracle Database performance

ADDM Spotlight provides performance analysis for a variety of personas

Rich visualization with in-context drill-downs provides deeper insight into performance

ADDM Spotlight is available in EM and Operations Insights

| OPSI ADDM Spotlight | EM ADDM Spotlight | |

| Retention period | 25 month | ADDM data is retained for 30 days in the database |

| Scope | Fleet-wide or compartment | Single target database |

| Supported versions | PDBs: 19c or higher* Non-PDBs: 18c or higher |

19c or higher |

| Deployment type | Cloud and on-premises, ADB coming soon | Cloud and on-premises |

Wednesday, June 14, 2023

Second Quarterly Update on Oracle Graph (2023)

Graph Visualization App

PGQL Updates

- WALK: the default path mode, where no filtering of paths happens.

- TRAIL: where path bindings with repeated edges are not returned.

- ACYCLIC: where path bindings with repeated vertices are not returned.

- SIMPLE: where path bindings with repeated vertices are not returned unless the repeated vertex is the first and the last in the path.

- FETCH [FIRST/NEXT] 10 [ROW/ROWS] ONLY as standard form of LIMIT 10

- v [IS] SOURCE/DESTINATION [OF] e as standard form of is_source_of(e, v) / is_destination_of(e, v)

- e IS LABELED transaction as standard form of has_label(e, 'TRANSACTION')

- MATCH SHORTEST 10 (n) –[e]->* (m) as standard form of MATCH TOP 10 SHORTEST (n) –[e]->* (m)

- MATCH (n) –[e]->{1,4} (m) as alternative for MATCH ALL (n) –[e]->{1,4} (m)

- The ALL keyword became optional)

- VERTEX_ID(v) / EDGE_ID(e) as alternative for ID(v) / ID(e)

- VERTEX_EQUAL(v1, v2) / EDGE_EQUAL(e1, e2) as alternative for v1 = v2 / e1 = e2