When size matters

This blog looks at the effect that Linux page size can have on database performance and hence how you can optimize your database Kubernetes nodes.

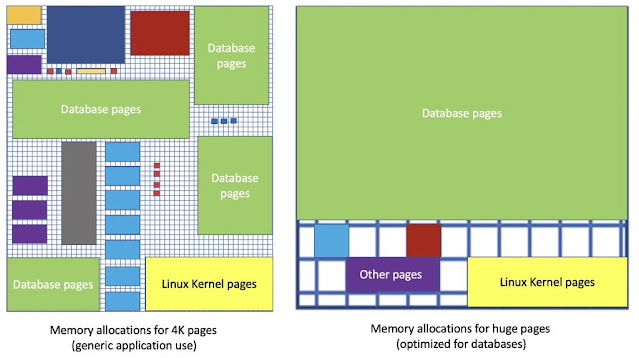

Most popular databases benefit from Linux huge pages, but most Kubernetes clusters use 4K Linux pages which is non optimal for popular databases.

Kubernetes was originally designed to orchestrate at scale, the life cycle of containers for light weight, stateless applications like Ngnix, Java and Node.js. For this use case, Linux 4K pages are the correct choice. More recently Kubernetes has been enhanced to support large, stateful, persistent databases by adding features like Statefulsets, Persistent Volumes and huge pages.

The following picture shows just how much effect that using Linux huge pages can have on database performance.

The above picture shows that for the same database, with the same data, for the same workload, that the throughput can be up to 8x better when Linux 2MB pages are used rather than 4K pages. The graph also shows that as the level of concurrency increases, the benefit of huge pages also increases.

The rest of this blog covers some background concepts and looks at the factors which affect Linux page sizes for database workloads.

Linux Page Sizes

All modern multi-user operating system use virtual memory to enable different processes to use memory without worring about the low level details. Linux x8664 systems use paging for its virtual memory management.

Linux x8664 supports the following page sizes:

◉ 4K

◉ 2MB

◉ 1GB

The page size is the smallest unit of contiguous data that can be used for virtual memory management.

The size of a page is a trade off. A 4K page minimizes memory wastage for small memory allocations. For large memory allocations, using 2MB or 1GB pages requires less pages in total and can be significantly faster as there is a cost associated with translating virtual memory to physical memory addresses.

TLB cache hits and misses

Every memory access for any process on Linux (eg whether it is for Ngix, Node.js or MySQL) requires a translation from virtual memory to physical memory. As this is such a common operation, all CPUs have some form of Translation Lookaside Buffer [TLB] which acts as a cache for recently translated memory addresses.

All translations from virtual memory to physical memory first look to see whether the mapping already exists in the TLB. If the mapping already exits, it is called a TLB cache hit. TLB cache hits are very fast and occur in hardware. When a translation from virtual memory to physical memory does not exist in the TLB cache, it is called a TLB cache miss. TLB cache misses require the mapping to be resolved in software in the Linux kernel page table via a page walk. Although a page walk is efficient C code, it is significantly slower than doing the mapping in hardware via the TLB cache.

Why TLB cache misses matter for databases

All databases ultimately need to access data in memory for reads or writes. All of these database reads or writes need to do at least one TLB lookup. TLB cache misses can significantly slow down database reads and writes:

◉ The larger the database and the more distinct pages are accessed, the more TLB lookups are required. This is effectively the database workingset size.

◉ The greater the concurrency the more TLB lookups are needed per unit time

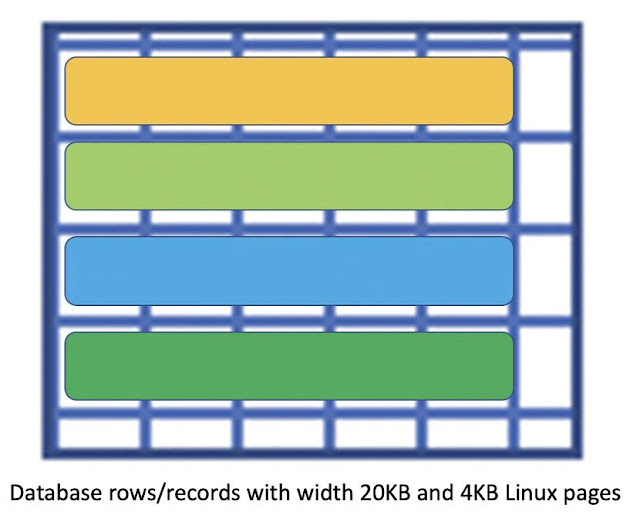

If you have rows/records with variable length data types (eg character strings, JSON, CLOBs or BLOBs) then these rows/records can easily be wider than 4KB. Accessing a single row/record with a width of 20KB will usually require at least five TLB lookups when the Linux page size is 4KB. Accessing the same 20KB row/record would usually only take one TLB lookup if 2MB or 1GB Linux pages are used. So generally, the wider the database rows/record, the greater the benefit of huge pages vs 4K Linux pages.

The challenge is that CPUs have a small number of TLB cache entries:

◉ Intel Xeon Ice Lake

◉ 64 entries in L1 TLB for 4K pages, 32 entries for 2MB pages, 8 entries for 1G pages

◉ 1024 entries in L2 TLB for 4K + 2MB pages

◉ 1024 entries in L2 TLB for 4K + 1GB pages

◉ AMD EPYC Zen 3

◉ 64 entries in L1 TLB for 4K + 2MB pages + 1GB pages

◉ 512 entries in L2 TLB for 4K and 2MB pages

As there are usually only about 64 TLB 4K entries for the L1 CPU cache, and between 512 to 1024 4K entries for the L2 cache on the latest Intel and AMD CPUs, if your database has wide rows/records and accesses a lot of different rows/records, then it will tend to almost always get TLB cache misses.

If you use 2MB pages, then you are less likely to get a TLB cache miss as you effectively have made the TLB cache larger:

◉ 512 times larger on AMD EPYC Zen 3 CPUs for both the L1 and L2 CPU caches

◉ 256 times larger for Intel Ice Lake CPUs for the L1 CPU cache and 512 times larger for the L2 CPU cache

Reducing the number of TLB cache misses can have a significant positive effect on database performance.

Benchmarks

Linux does not care whether your database is MySQL, PostgreSQL or Oracle. Linux does not care whether your application was written in Node.js, Java, Go, Rust or C. Linux performance is dependent on metrics like how many TLB cache misses occur per unit time for your workload.

The following benchmarks look at several configurations:

◉ Narrow rows/records [128 Bytes] with an even probability of accessing 100 million different rows/records

◉ The entire row/record should fit within a single 4KB Linux page

◉ Medium rows/records [8 KB] with an even probability of accessing 100 million different rows/records

◉ The row/record should fit within at least two 4KB Linux pages

◉ Wider rows/records [16 KB] with an even probability of accessing 100 million different rows/records

◉ The row/record should fit within at least four 4KB Linux pages

◉ 16 KB is not very wide, but the results were significant

To minimize the number of variables:

◉ Only database reads were performed, all 100 millions rows in the database could easily fit into DRAM and the database was 'warmed up'

◉ The database clients used IPC rather than TCP sockets to access the database

This configuration meant that there was no disk IO or network processing, so the workload would bottleneck on CPU and/or memory accesses.

4K Linux pages for 128 Byte rows/records

The above picture shows that it is possible to execute just over 3.5 millions database reads per second on a single Linux machine using 4K Linux pages with 128 database connections on an AMD EPYC 7J1C3 @ 2.55 GHz processor.

4K vs 2MB pages for 128 Byte rows/records

The above picture show that for the same hardware, same database, same table, same data, same queries, that 2 MB huge pages can enable up to 8x more throughput than when using 4K Linux pages.

8x more throughput is a significant result for narrow rows/records.

4K vs 2MB pages for 8 KB rows/records

With database rows/records which are 8KB wide, 2MB pages can provide up to 8x more throughput than 4K pages.

8x more throughput is a significant result for medium width rows/records.

4K vs 2MB pages for 16 KB rows/records

The above picture show that for the same hardware, same database, same table, same data, same queries, that 2 MB huge pages can enable up to 5x more throughput than when using 4K Linux pages.

5x more throughput is a significant result for wider rows/records.

What about 2MB vs 1GB Linux Pages

The benefit of 2MB Linux pages vs 4K pages is easy to see, eg up to 8x better. Should you also expect to see significant differences between 2MB and 1GB Linux pages?

As all of the tested row widths could fit into a 2MB page, the only variable was the TLB cache miss ratios for 2MB vs 1GB Linux pages for 100 million different rows/records.

For all tested row widths [128 Bytes, 8KB and 16KB] 1GB Linux pages gave between between 1 and 21% better throughput than 2MB Linux pages.

While a throughput improvement of up to 21% is not as impressive as 8x, it is still something.

Maybe tests with rows/records wider than 2MB would show a significant difference?

Kubernetes Node Specialization

In the early days of Kubernetes, the workloads tended to be for small, stateless 'web based' applications, eg load balancers, web servers, proxies and various application servers. For this use case, using Linux 4K pages is an appropriate choice.

Recently, more specialized workloads are being run in Kubernetes clusters which have distinct hardware and/or software requirements. For example machine learning workloads can run on generic x8664 CPUs, but tend to run much faster on Kubernetes Nodes which have GPUs or ASICs. Also some Kubernetes nodes may be specialized to have fast local storage, have more RAM or maybe run ARM64 CPUs.

So instead of all Kubernetes Nodes having the exact same CPU, RAM, storage etc, some Nodes could use daemonsets or node labels to define and expose the specific capabilities of those Nodes. Using POD labels [with selectors to match node labels], allows the Kubernetes scheduler to automatically run PODs on the most appropriate Nodes.

The above picture shows a Kubernetes cluster with four types of specialized Nodes.

What can you do to optimize database performance on Kubernetes

Things which are usually outside your control:

◉ The width of your database rows/records

◉ How many rows/records are in your database

◉ Your database workingset size

◉ The concurrency and frequency of data access in your database

◉ The TLB cache size of your CPU

Things that you can control in your Kubernetes cluster:

◉ Whether the Linux kernel uses 4KB, 2MB or 1GB Linux pages on your Linux x8664 Kubernetes nodes

◉ How many Linux huge pages [2MB or 1GB] that you configure

◉ The requests and limits for the memory and huge page resources of your Kubernetes applications

◉ A database is considered an application in Kubernetes

You can choose to configure the Kubernetes Node [ie Linux host] with either 2MB or 1GB huge pages for a set of machines that you want to run database workloads on.

The way to configure huge pages on Linux is independent of Kubernetes. You must configure huge pages in the Linux kernel as you cannot do it at the Kubernetes or container level. Usually you want to turn off transparent huge pages as they generally do not benefit database performance and just wastes RAM.

Configuring 2MB pages on Linux x8664 is fairly simple for any Linux distribution and can normally be done without changes to boot time parameters.

The steps to configure 1GB Linux pages varies slightly by distribution and requires boot time parameters. I was able to configure 1 GB Linux pages on recent Intel Xeon and AMD CPUs for:

◉ Red Hat Enterprise Linux 7.9 and 8.4

◉ Oracle Linux 7.9 and 8.4

◉ CentOS 7 and 8

◉ Ubuntu 18.04 and 20.04

◉ SuSE 12 and SuSE15

How many huge pages should you configure for your database on Kubernetes

This question is database specific. It depends on how much RAM your Kubernetes Node has, how many other [non database] PODs you want to run on the Node, how much RAM those PODs need, and ultimately how much your database benefits by using more memory.

Source: oracle.com

0 comments:

Post a Comment