First, a very happy new year to all ! I wanted to start my first blog post of the year thanking our customers and partners for adopting the Oracle Autonomous Database and believing in our vision of providing a fully automated, enterprise class database platform service. When we embarked on this journey 6 years ago, little did we know how time would change so quickly and that automation would become the ultimate driver of business success in a short period of time. Every database customer and prospect I have talked with in the past year has been super excited about what we are building and most decided to try out autonomous as an enabler in their digital transformation journey. So, once again, a big thank you to all our customers! We are fully committed to supporting you on your journey and building the most amazing database platform service ever.

Here's a quick recap of top features we added to the Autonomous Database on Dedicated Infrastructure in our public cloud regions and Cloud@Customer deployments.

Key Management Service ( OCI Vault) support for Cross region Data Guard deployments

We added support for Data Guard deployments across regions to use an external, HSM based key management service in OCI for database encryption keys. This enabled customers to deploy their tier 1 mission critical database deployment with cross-region dataguard standby while retaining database encryption keys in an external key management system. Deployment of Data Guard configuration and TDE key configuration is completely automated through console, API and SDKs.

Integration with local Key Vault for Autonomous on Cloud@Customer deployments

Customers who choose to run autonomous databases in their own data centers like to retain the encryption keys locally within their network. Therefore, cloud@customer deployments of autonomous have built-in integration with Oracle Key Vault out-of-the-box. Customers can on-board their existing key vault and it simply shows up as a cloud resource on their console. Autonomous databases can then source encryption keys from this customer provided key vault. We added support for cross data center deployments to use a clustered OKV deployment for disaster management.

PDB level Access Control Lists (ACLs)

Security is everything in a vendor managed cloud service. Therefore, we added an additional layer of network security by providing ACLs at a PDB level. Customers can now control access to each PDB by IP address / CIDR ranges.

PCI and FedRAMP certification

Autonomous database on dedicated infrastructure achieved PCI certification in OCI commercial regions and FedRamp High certification in OCI Gov regions.

Fractional OCPU and 32 GB min storage allocation

This was a much-awaited feature for hyper dev/test consolidation at lower costs. Customer can now deploy databases with 0.1 OCPU and a 32 GB Exadata storage unit providing a 10x multiple on the number of databases that can be deployed per Exadata cluster for the same cost.

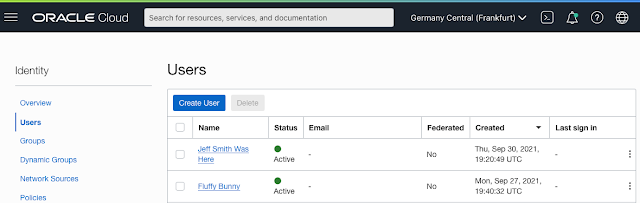

Operator Access Control

An industry first, Operator Access Control for autonomous database dedicated provides customers with near real-time control and visibility into Oracle operator actions as they work on customer systems. Customers approve/deny access requests, get streams of operator commands directly into their local or cloud SIEM and can terminate operator session with a button click.

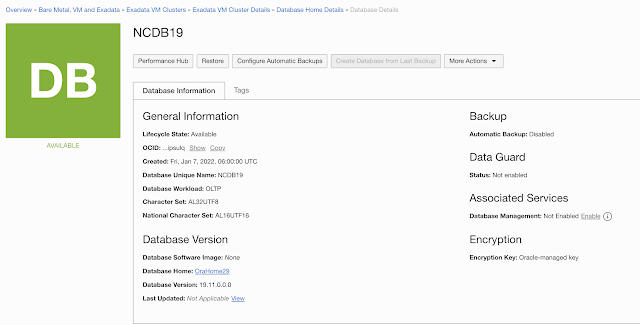

Integration with Database Management Service

This OCI native service provides seamless monitoring of Autonomous, non-autonomous, on-premises or cloud databases deployed by customers through a single pane.

X9M Exadata Systems for Cloud@Customer

With 496 cores, 10 TB of DRAM on a rack you can now run 600+ TB data warehouses on a single (unexpanded) 8-node cluster or densely consolidate your workloads for cost, power, cooling, and space savings.

Support for Goldengate Capture and Replicat

Oracle Goldengate is a widely used data replication tool with many active-active sites worldwide. Autonomous dedicated now fully supports bi-directional replication using Goldengate

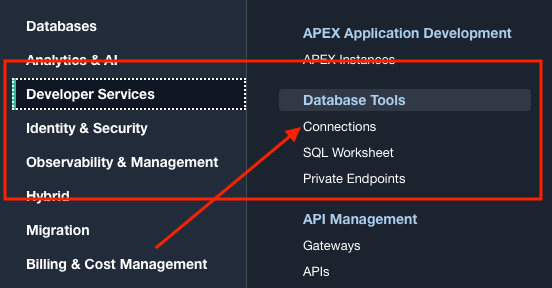

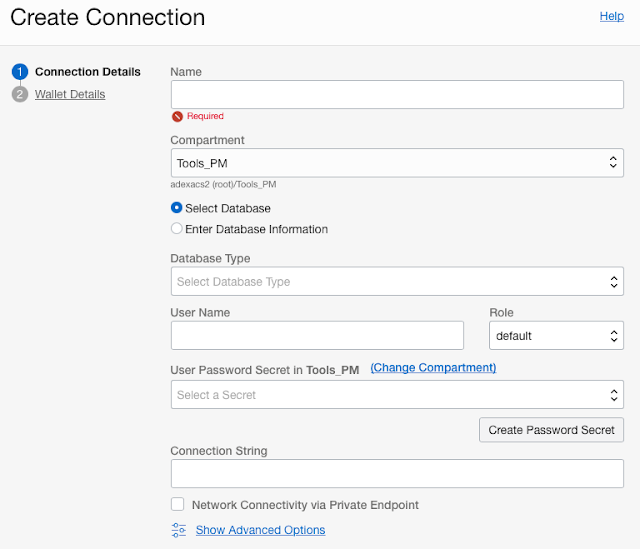

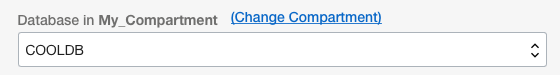

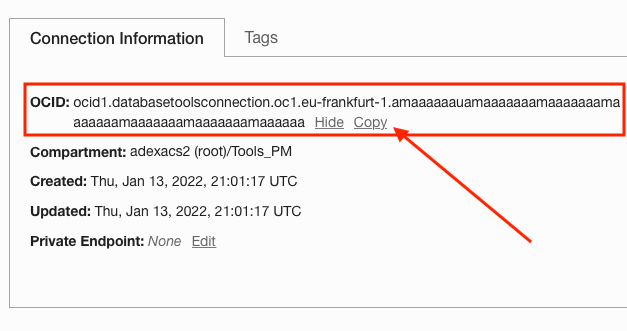

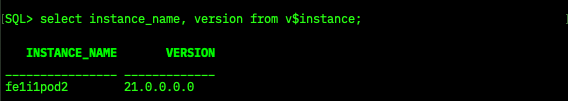

Oracle Database Actions

Database Actions is a web-based interface that provides development tools, data tools, administration, and monitoring features for Autonomous Database. Developers can now load data, write and execute SQL & PL/SQL code, and carry out many functions similar to SQL*Developer through a web UI. So, one more addition to some great tools in Autonomous for developers

We released numerous other features such as support for one-off patching, shared server support for high session count per core, support for utl_http / utl_smtp packages and various other UX improvements. Its been a busy year and our development teams have been going full throttle. A big thank you to them as well, they are one of the most amazing, customer focused teams I've worked with.

Here's how we did in the 2021 Gartner magic quadrant, released December 2021 - among 16 vendors, Oracle Autonomous Transaction Processing (ATP) ranked highest for all four use cases in Gartner Critical Capabilities for Cloud DBMS for Operational Use Cases. Among 18 vendors, Oracle Autonomous Datawarehouse (ADW) ranked in the top three for all the analytical use cases.

We announced many new regions around the world, as you may have read in various posts. Autonomous is now available in 30 commercial regions worldwide. We have also been expanding on our partnership with Azure and expanded the list of Azure connected regions - so, if you are an Azure customer, you should check out what's now possible with Azure and OCI Autonomous service.

We also did Database World, our annual customer event, online this year. How times have changed! There are many informative session recordings from product managers across the database org if you are interested. I did a best practices session on migrating to Autonomous with useful tips on all the 'gotchas' to watch out for when you move workloads to autonomous

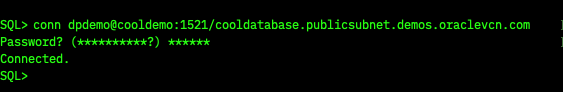

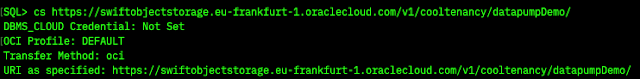

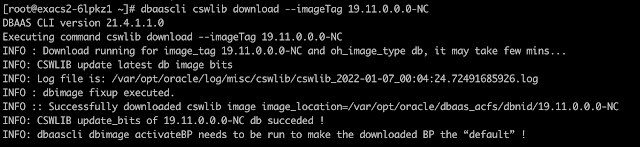

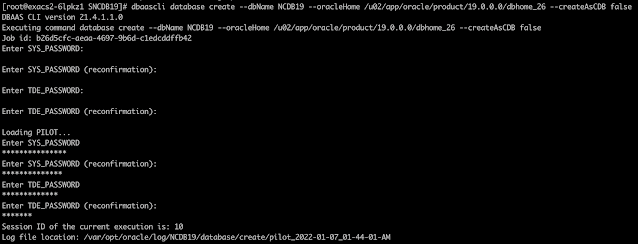

We also added many new hands-on-labs for Autonomous dedicated - there are 40+ lab guides with step-by-step instructions on provisioning, security management and developing applications.

For those looking to upskill for career advancement, we made OCI certification free. You can now train to be an Autonomous Database specialist using our LiveLabs free training platform and then pass the certification exam at no cost. The free certification offer is until Feb 28, 2022 so you may need to put a rush order on that one!

Me and my PM colleagues also did an Autonomous Master class session at the All India Oracle User Group late last year and they graciously allowed me to share the session recording even though it's for AIOUG members only. Many thanks AIOUG!

So once again, thank you all. We will continue to work to make the Autonomous services easy to use, move existing workloads and most importantly, help build your next generation applications.

Source: oracle.com