Wednesday, March 30, 2022

Oracle Database 19c Running @Memory Speed

Sunday, March 27, 2022

How to Apply patch on DBCS with Data guard

Oracle Database Cloud Service (DBCS) runs Enterprise and Standard Edition databases on Virtual Machine (VM) DB Systems and enables you to easily build, scale, and secure Oracle database cost-effectively in the cloud. Database patching is an important and critical subject. This article provides a walkthrough of patching process for DBCS with Data Guard.

PATCHING

To patch DBCS with Data Guard. We need to first apply the patch on the standby database then the primary. Follow below steps for applying the patch.

Friday, March 25, 2022

Multiple VM Autonomous Database on Exadata Cloud@Customer debuts

I am excited to announce the launch of Multiple VM Autonomous Database on Exadata Cloud@Customer. One of our customers' most frequently requested features has been the ability to create Autonomous Database VM clusters and Exadata Database VM clusters on a single Exadata Cloud@Customer infrastructure. With the launch of Multiple VM Autonomous Database, customers can now create multiple Autonomous Exadata VM Clusters and Exadata Database VM Clusters on all their existing Exadata Cloud@Customer platforms from X7 Gen 2 to the newest generation.

Oracle's Exadata Cloud@Customer is the world's simplest path for customers to realize cloud benefits for database: self-service API agility, pay-per-use financial model, high availability, security, and standardization that reduces business risk. It brings these cloud benefits to the customer, behind their firewall, inside their data centers, and fully managed by Oracle using cloud native APIs. Exadata Cloud@Customer allows organizations to modernize their database estate and take advantage of cloud benefits without changing anything at the application layer in their enterprise architecture while meeting security, governance, and regulatory requirements.

Oracle Autonomous Database is the most operationally complete and simple to use database service for developers and administrators of database applications. The service provides machine-learning driven touchless mission critical capabilities with automatic and dynamic scaling, performance, and security. The service is especially well suited for modern application architectures that utilize multiple data types, workloads, and analytic functions in a single solution.

Autonomous Database on Exadata Cloud@Customer (ADB-C@C) were announced together in July 2020, providing an operationally complete and simple to use database service with all the benefits of the cloud in customers’ data centers.

To take advantage of ADB-C@C, customers have to create four primary resources:

◉ Exadata Cloud@Customer infrastructure

◉ Autonomous Exadata VM Cluster

◉ Autonomous Container Database

◉ Autonomous Database

However, as shown in Figure 1, the original deployment architecture required customers to dedicate the entire platform to either an Autonomous Exadata VM Cluster or Exadata Database VM Clusters.

- Single Infrastructure with both Exadata Database Service and Autonomous Database Service

- Gain Autonomous Database experience using capacity on your existing infrastructure

- Efficiently allocate resources for different workloads on the same physical resources

- Incrementally upgrade all databases and conveniently migrate to Autonomous Database on the same Exadata Cloud@Customer

- Lowest Cost to Adopt Autonomous Database

- Set up Autonomous VM Clusters at no cost, enabling the creation of a cost-effective private Database as-a-Service (DBaaS) environment

- Pay only for running Autonomous Database workloads

- Leverage fractional CPU cores, auto-scale consumption as needed, and start/stop individual databases to reduce cost

- Simplify new and existing workloads

- Fully automate and optimize existing workloads

- Provide developer self-service databases for creating new applications

- Flexible License types

- Use both BYOL and License Included Autonomous Databases on the same Exadata Cloud@Customer infrastructure

- Defer production costs for critical deployments

- Create and test Autonomous Data Guard between Autonomous Exadata VM Clusters on the same Cloud@Customer infrastructure

- Enable dev/test use cases that require Autonomous Data Guard at a low cost

- Enable specialized workloads

- Customize compute, storage, and memory of each Autonomous Exadata VM Cluster configuration to optimally support different workloads

- Secure environment separation

- Network isolated Autonomous Exadata VM Clusters to provide enhanced security and predictable performance for specific workloads (dev-test, staging, and production)

OCI Console Experience

Availability

Wednesday, March 23, 2022

Use Oracle Enterprise Manager data with OCI to unlock new insights

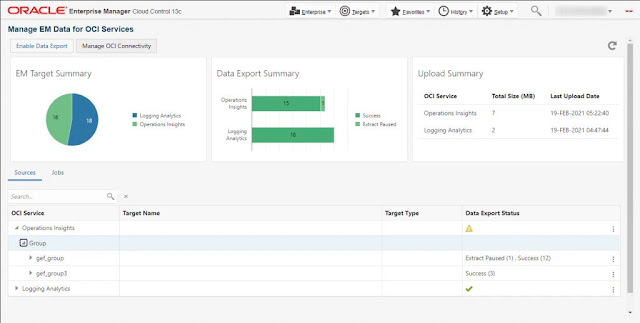

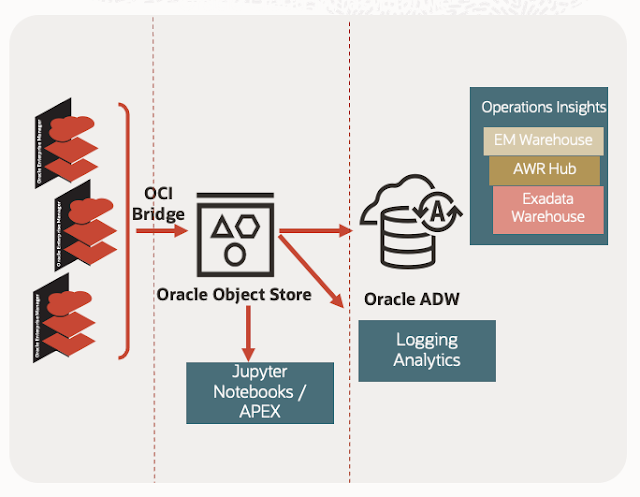

Oracle Enterprise Manager 13.5 customers gain greater operations, capacity planning, and forecasting insight by enabling rich Enterprise Manager target-level data and sharing it with Oracle Cloud Observability & Management platform services. Enterprise Manager transfers data from managed targets and Oracle Management Repository (OMR) to OCI Object Storage, where it is accessible by Oracle Cloud O&M services like Operations Insights, Logging Analytics, etc.

Gain greater insight from the data Enterprise Manager already collects

Oracle Cloud enables new storage, processing, and machine learning technologies to help customers solve more business problems using target-level data already collected and managed by Enterprise Manager. It helps eliminate custom scripting, warehousing building, or the need to have extra hardware or storage onsite to perform IT Operational analytics and planning.

Customers configure Oracle Enterprise Manager to transfer data from the targets it manages and the Oracle Management Repository (OMR) into Oracle Cloud Infrastructure (OCI) Object Storage, enabling it to be securely accessible by Oracle Cloud services. Once OCI connectivity is set up, data automatically and routinely uploads to OCI Object Storage to enable a data lake. IT Operations and DBAs then use OCI Operations Insights to perform capacity planning and forecasting activities from Enterprise Manager data and other sources. Prerequisites for this capability include: customers should already have the Oracle Diagnostics Pack licensed or add it, and have an Oracle Cloud account set up with credentials and connectivity as detailed in the EM 13.5 documentation.

The following graphic illustrates how to target data flows from Enterprise Manager to an Oracle Cloud service once the configuration process has been completed.

Set up and configure Enterprise Manager to target data flows to an Oracle Cloud service

Setup OCI Bridge and target groups in OCI to validate configuration worked

Monday, March 21, 2022

Enhancing Database and Apps Troubleshooting with ML Driven Log Analytics using EM OCI Bridge

Enterprise applications are becoming complex by the day and the ability to analyze log data generated by different systems easily is critical for ensuring smooth business operations.

Oracle Enterprise Manager is Oracle’s premier monitoring solution for on-premises and cloud environments. Oracle Cloud Infrastructure services can take advantage of this valuable set of telemetry data to perform further resource analysis. Oracle Cloud Logging Analytics is a cloud solution that allows you to index, enrich, aggregate, explore, search, analyze, correlate, visualize and monitor all log data from your applications and system infrastructure on cloud or on-premises.

Enterprise Manager (EM) users can now set up cloud bridge to import their target model data like associations, and properties information into Oracle Cloud Logging Analytics to start analyzing logs in a few steps.

EM collects detailed information, from managed targets such as configuration necessary to locate log files and hierarchical relationships between entities to enable topology views of applications and infrastructure components. Logging Analytics EM integration automatically builds the topology view across the application stack to help app, IT, and database admins troubleshoot availability and performance issues quickly.

OCI Bridge

Friday, March 18, 2022

Difference between Structured, Semi-structured and Unstructured data

Big Data includes huge volume, high velocity, and extensible variety of data. These are 3 types: Structured data, Semi-structured data, and Unstructured data.

1. Structured data

Structured data is data whose elements are addressable for effective analysis. It has been organized into a formatted repository that is typically a database. It concerns all data which can be stored in database SQL in a table with rows and columns. They have relational keys and can easily be mapped into pre-designed fields. Today, those data are most processed in the development and simplest way to manage information. Example: Relational data.

2. Semi-Structured data

Semi-structured data is information that does not reside in a relational database but that has some organizational properties that make it easier to analyze. With some processes, you can store them in the relation database (it could be very hard for some kind of semi-structured data), but Semi-structured exist to ease space. Example: XML data.3. Unstructured data

Unstructured data is a data which is not organized in a predefined manner or does not have a predefined data model, thus it is not a good fit for a mainstream relational database. So for Unstructured data, there are alternative platforms for storing and managing, it is increasingly prevalent in IT systems and is used by organizations in a variety of business intelligence and analytics applications. Example: Word, PDF, Text, Media logs.

Differences between Structured, Semi-structured and Unstructured data:

| Properties | Structured data | Semi-structured data | Unstructured data |

| Technology | It is based on Relational database table | It is based on XML/RDF(Resource Description Framework). | It is based on character and binary data |

| Transaction management | Matured transaction and various concurrency techniques | Transaction is adapted from DBMS not matured | No transaction management and no concurrency |

| Version management | Versioning over tuples,row,tables | Versioning over tuples or graph is possible | Versioned as a whole |

| Flexibility | It is schema dependent and less flexible | It is more flexible than structured data but less flexible than unstructured data | It is more flexible and there is absence of schema |

| Scalability | It is very difficult to scale DB schema | It’s scaling is simpler than structured data | It is more scalable. |

| Robustness | Very robust | New technology, not very spread | - |

| Query performance | Structured query allow complex joining | Queries over anonymous nodes are possible | Only textual queries are possible |

Wednesday, March 16, 2022

Oracle Zero Downtime Migration 21.3

Oracle Zero Downtime Migration 21.3

Zero Downtime Migration (ZDM) 21.3 is available for download! ZDM is Oracle’s premier solution for moving Oracle Database workloads to Oracle Cloud, supporting various Oracle Database versions as the source and most Oracle Cloud Database Services as targets. Zero Downtime Migration 21.3 enhances the existing functionality by adding online cross-platform migration, Standby Databases as a source, Data Guard Broker Integration, and many more features!