- “What are our total streams?”

- “Break that out by genre”

- “Add customer segment”

- “Keep the top 5 customer segments and genres by total views. Include a rank in the result”

Wednesday, February 28, 2024

Conversations are the next generation in natural language queries

Monday, February 26, 2024

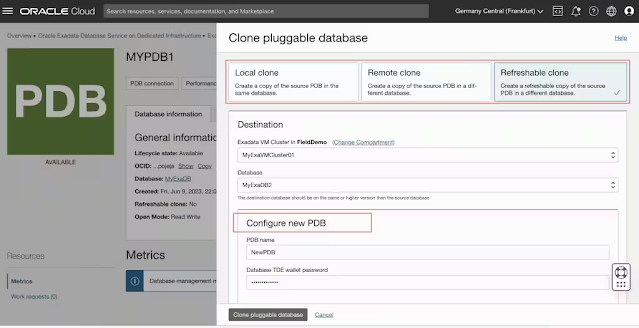

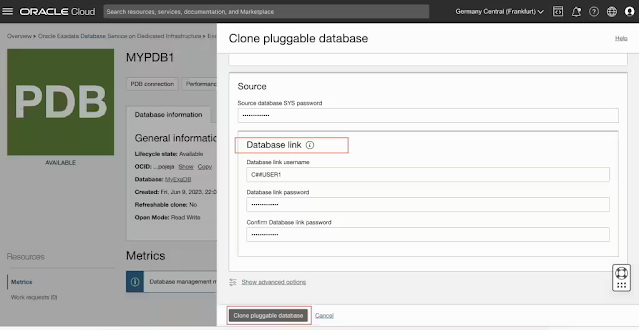

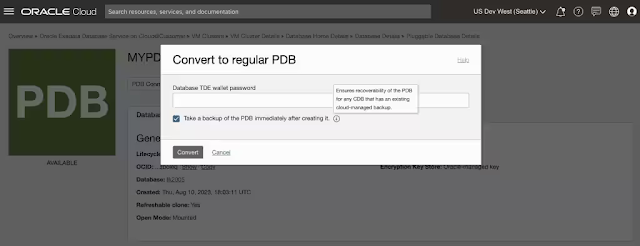

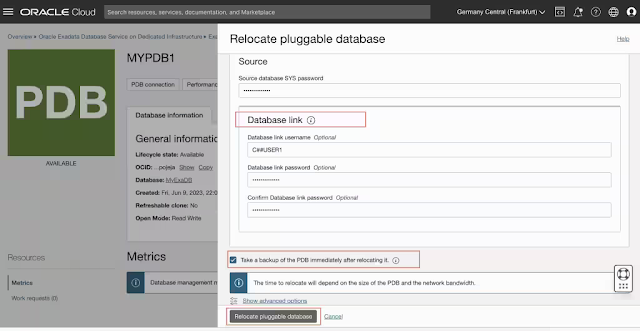

Enhanced PDB automation on Exadata and Base Database Services

Key Benefits

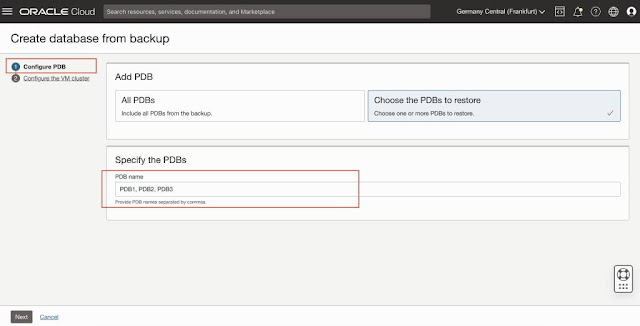

PDB backup

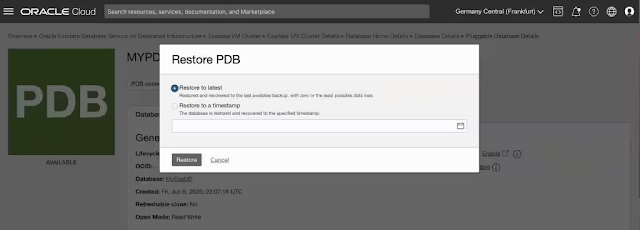

PDB restore - In-place and Out-of-place restore

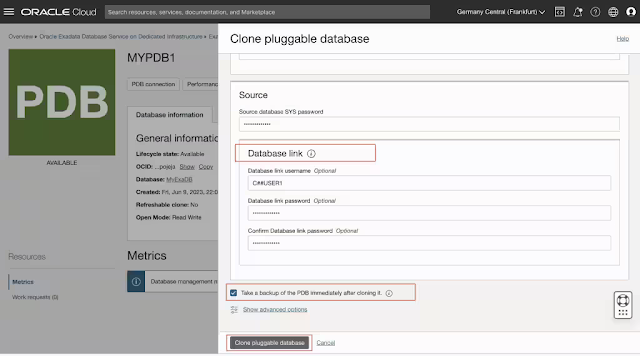

Remote clone

Refreshable Clone

PDB relocate

PDB open modes

Considerations

- Enhanced PDB automation is supported on database releases 19c and later.

- PDB clone (remote, refreshable) and relocate are supported across the Compartment, VM Cluster, DB System (BaseDB), VCN, Network (ExaDB-C@C), and database version (same or higher). The same is not supported across the Availability Domain (AD) and Exadata Infrastructure.

- A Data Guard standby cannot be either a source or a destination for the PDB clone and PDB relocate operations.

- A refreshable clone PDB created on a Data Guard primary will not appear on standby - its existence will be deferred until converted to a regular PDB.

- Currently, PDB automation is supported for the databases using TDE wallet-based encryption (Oracle-managed keys).

Thursday, February 22, 2024

Oracle 1Z0-076 Exam Prep: Tips & Resources

Are you planning to take the Oracle 1Z0-076 exam and become a certified Oracle Database 19c: Data Guard Administration? If yes, you might wonder how to prepare for this challenging exam and what resources to use. This article will share tips and resources to help you ace the 1Z0-076 exam and boost your career as an Oracle Data Guard expert.

What Is the Oracle 1Z0-076 Exam?

The Oracle 1Z0-076 certification exam for Oracle Database 19c: Data Guard Administrations. It tests your knowledge and skills on Oracle Data Guard concepts, configuration, management, optimization, monitoring, data protection, high availability, and disaster recovery. The exam consists of 74 multiple-choice questions you must answer in 120 minutes. The passing score is 61%.

Why Should You Take the Oracle 1Z0-076 Exam?

Oracle Data Guard is a powerful feature of Oracle Database that enables you to create and maintain one or more standby databases to protect your data from failures, disasters, human errors, and data corruption. Oracle Data Guard also provides high availability, scalability, and performance benefits for your database applications.

By taking the Oracle 1Z0-076 exam and becoming a certified Oracle Database 19c: Data Guard Administration, you can prove your proficiency and expertise in Oracle Data Guard and gain a competitive edge in the job market. You can also improve your career prospects and credibility as an Oracle Data Guard professional.

According to ZipRecruiter, the average salary of an Oracle Data Guard Administrator in the US is $115,000 annually. The demand for Oracle Data Guard Administrators is also expected to grow as more and more organizations adopt Oracle Database 19c and leverage its Data Guard capabilities.

How to Prepare for the Oracle 1Z0-076 Exam: Tips and Resources

Preparing for the Oracle 1Z0-076 exam requires a lot of dedication, practice, and revision. Here are some tips and resources that can help you prepare effectively and efficiently for the exam:

- Review the exam objectives and topics: The first step in your preparation is to review the exam objectives and issues and understand what the exam expects from you. You can reference the official website and list the topics you must study and master.

- Study the official Oracle documentation: The official Oracle documentation is the best source of information and guidance for Oracle Data Guard 19c. You can find the documentation on the Oracle website and read the relevant sections that cover the exam topics. The documentation provides detailed explanations, examples, and Oracle Data Guard 19c best practices.

- Take an online course: If you prefer a more structured and interactive way of learning, you can take an online course that covers the Oracle 1Z0-076 exam topics. Many online platforms, such as Udemy, Pluralsight, and Oracle University, offer high-quality and affordable courses for Oracle Data Guard 19c. You can choose a course that suits your learning style, budget, and schedule. An online course can help you learn the concepts, techniques, and best practices for Oracle Data Guard 19c from experienced instructors and experts.

- Practice with hands-on labs: One of the best ways to reinforce your learning and test your skills is to practice with hands-on labs. Hands-on labs permit you to apply your knowledge and gain practical experience with Oracle Data Guard 19c. You can use a virtual machine, a cloud service, or a physical server to set up your own Oracle Data Guard environment and perform various tasks and scenarios that simulate the actual exam. You can also use the Oracle LiveLabs service, which provides free access to Oracle Cloud environments and guided workshops for Oracle Data Guard 19c.

- Take practice tests: Another effective way to prepare for the Oracle 1Z0-076 exam is to take practice tests. Practice tests can help you evaluate your knowledge, recognize your strengths and weaknesses, and familiarize yourself with the exam format and question types. You should take practice tests regularly and review your results and feedback carefully. It would help if you also aimed to score at least 80% on the practice tests before taking the exam.

- Revise and relax: The final step in your preparation is to revise and relax. You should review the exam objectives, topics, official Oracle documentation, online course materials, and practice test questions and answers. You should also make a summary or a cheat sheet of the key points and concepts you must remember for the exam. You should also relax and rest well before the exam day. You should avoid cramming and stressing yourself out. You should also eat well, sleep well, and stay hydrated. You should also arrive at the exam center early and be confident and calm.

Apply Your Knowledge and Skills in Real or Simulated Scenarios

Preparing for the Oracle 1Z0-076 exam requires applying your knowledge and skills in actual or simulated scenarios. The best way to master Oracle Data Guard 19c is to practice it in a realistic and relevant context, where you can apply the concepts, techniques, and tools you have learned.

You can apply your knowledge and skills in actual or simulated scenarios by:

- Setting up your own Oracle Data Guard 19c environment: You can set up your own Oracle Data Guard 19c environment by using Oracle VirtualBox, Oracle Cloud, or Oracle Database Appliance. You can then create, configure, manage, optimize, monitor, protect, and recover your Oracle Data Guard 19c databases and experiment with different scenarios and options.

- Taking online or offline Oracle Data Guard 19c courses or labs: You can take online or offline Oracle Data Guard 19c courses or labs that provide hands-on and guided learning experiences on Oracle Data Guard 19c features and functions. These courses or labs are found on Oracle University, Oracle Learning Library, or Oracle LiveLabs.

- Participating in online or offline Oracle Data Guard 19c projects or challenges: You can join in online or offline Oracle Data Guard 19c projects or challenges that provide real-world and complex problems or tasks on Oracle Data Guard 19c features and functions. You can find some of these projects or challenges on Oracle Developer Community, Oracle Code, or Oracle Hackathons.

By applying your knowledge and skills in actual or simulated scenarios, you can develop your practical and analytical abilities and prepare yourself for the exam and the job.

Conclusion

The Oracle 1Z0-076 exam is a challenging but rewarding exam that can help you become a certified Oracle Database 19c: Data Guard Administration and advance your career as an Oracle Data Guard professional.

Following the tips and resources we shared in this article, you can prepare effectively and efficiently for the exam and achieve your certification goal.

We wish you all the best for your exam and your future endeavors.

Wednesday, February 21, 2024

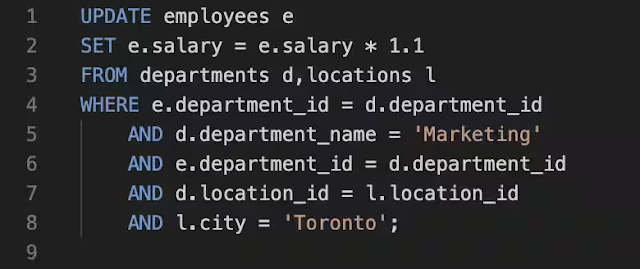

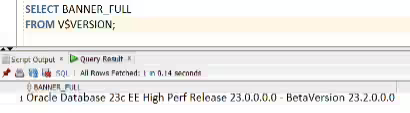

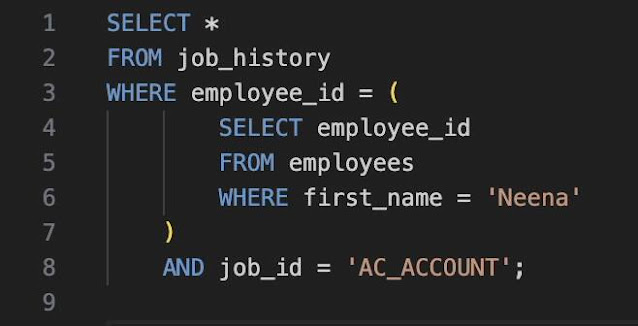

Oracle Database 23c: New feature - Direct Joins for UPDATE and DELETE Statements

Scenario 1: Updating Salaries based on Department and City

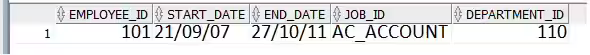

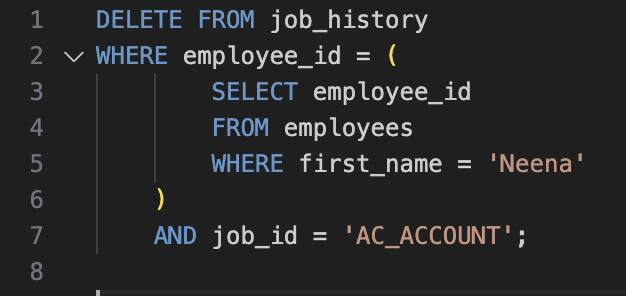

Scenario 2: Delete employee Neena's job history where she worked as AC_ACCOUNT

Monday, February 19, 2024

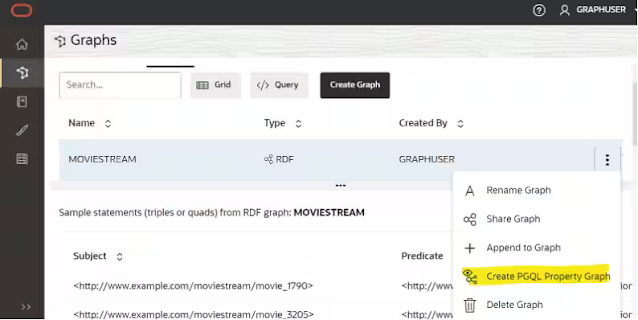

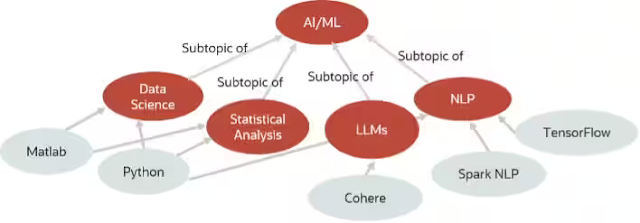

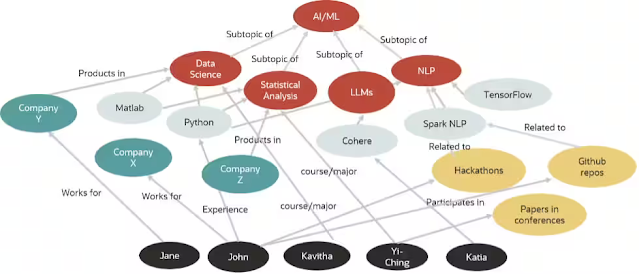

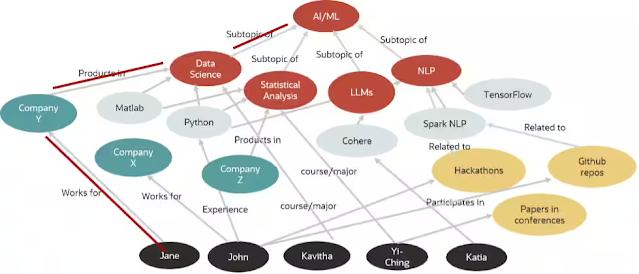

Accelerate your Informed Decision-Making: Enable Path Analytics on Knowledge Graphs

What is a Knowledge Graph?

What is a Property Graph?

What Does a Property Graph View on a Knowledge Graph Do?

More Informed Decision Making

Friday, February 16, 2024

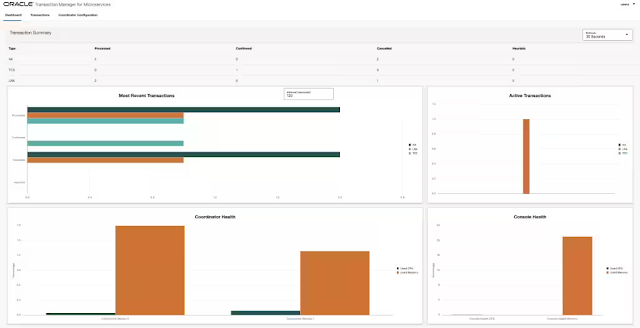

MicroTx Enterprise Edition Features

No Limit on Transaction Throughput

Admin Console

Transaction Coordinator Clusters

Persistent Transaction State

RAC Support for XA Transactions

Common XID

XA Transaction Promotion

Grafana Dashboard

Wednesday, February 14, 2024

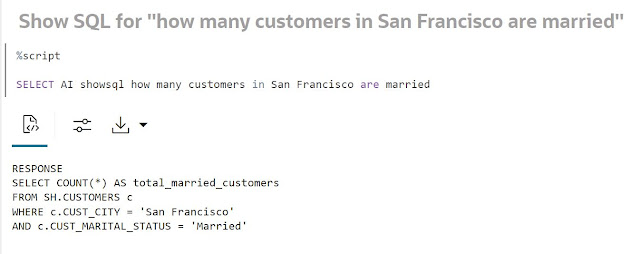

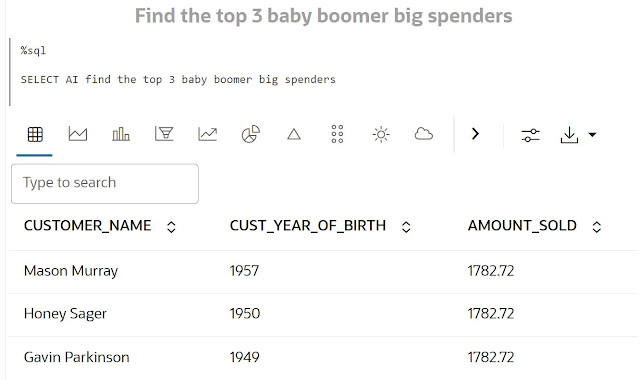

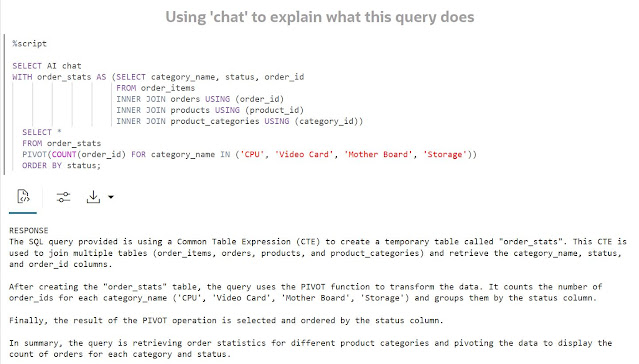

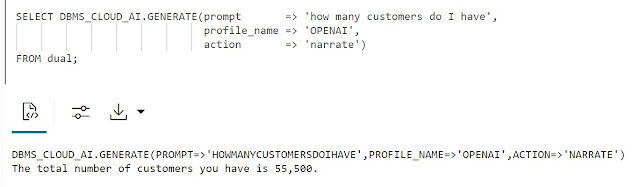

Introducing Select AI - Natural Language to SQL Generation on Autonomous Database

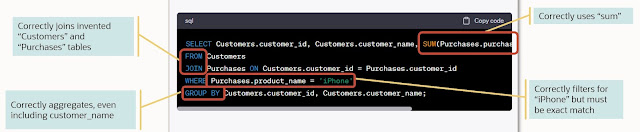

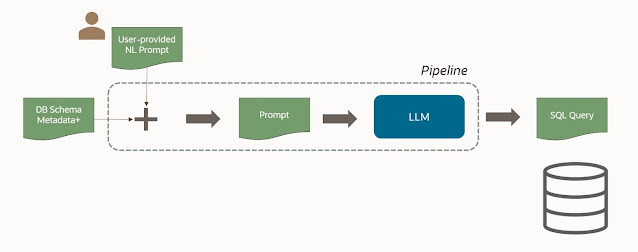

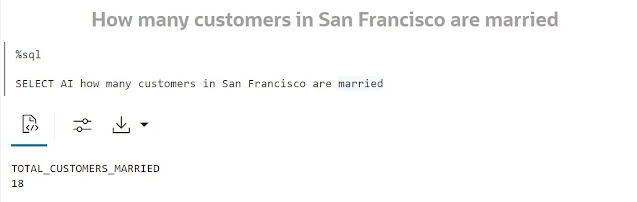

Introducing Autonomous Database Select AI - enabling you to query your data using natural language. Combining generative AI large language models (LLMs) with Oracle SQL empowers you to describe what you want - declarative intent - and let the database generate the SQL query relevant to your schema. Some LLMs might be good at generating SQL, but being able to run that SQL against your database is another matter. Select AI enables generating SQL that is specific to your database.

Since LLMs are trained on huge volumes of text data, they can understand the nuances and even intended meaning in most natural language queries. Using natural language to generate SQL reduces the time required to generate queries, simplifies query building, and helps to minimize or eliminate specialized SQL knowledge. Using natural language, deriving information from your database that involves writing queries with multiple joins, nested subqueries, and other SQL constructs becomes much easier and faster. While analytics tools make it easy to query and understand database data, the ability to interact with your SQL database using natural language prompts can help increase productivity of expert and non-expert SQL users to query their database without writing queries.

By learning effective SQL query patterns from curated training data, LLMs can produce more efficient queries - enabling them to perform better. As part of Oracle Autonomous Database, Select AI inherits all security and authentication features of the database.

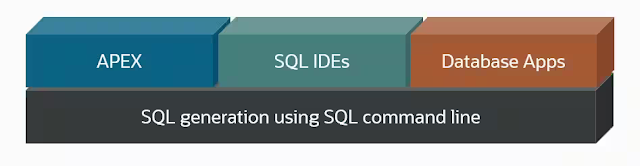

By virtue of being integrated with Oracle SQL, this capability is available through any SQL IDE, SQL Developer Web, Oracle Application Express (APEX), and Oracle Machine Learning Notebooks. Any application that invokes SQL and has an AI provider account also has access to Select AI.