In the evolving landscape of conversational artificial intelligence (AI), the Retrieval-Augmented Generation (RAG) framework has emerged as a pivotal innovation, particularly in enhancing the capabilities of chatbots. RAG addresses a fundamental challenge in traditional chatbot technology: The limitation of relying solely on pretrained language models, which often leads to responses that lack current, specific, or contextually nuanced information. By seamlessly integrating a retrieval mechanism with advanced language generation techniques, RAG-based systems can dynamically pull in relevant and up-to-date content from external sources. This ability not only significantly enriches the quality and accuracy of chatbot responses but also ensures that they remain adaptable and informed by the latest information.

In an era where users expect highly intelligent and responsive digital interactions, the need for RAG-based systems in chatbots has become increasingly critical, marking a transformative step in realizing truly dynamic and knowledgeable conversational agents. Traditional chatbots, constrained by the scope of their training data, often struggle to provide up-to-date, specific, and contextually relevant responses. RAG overcomes this issue by integrating a retrieval mechanism with language generation, allowing chatbots to access and incorporate external, current information in real time. This approach not only improves the accuracy and relevance of responses but also enables chatbots to handle niche or specialized queries more effectively. Furthermore, RAG’s dynamic learning capability ensures that chatbot responses remain fresh and adapt to new trends and data.

By providing more detailed and reliable information, RAG significantly enhances user engagement and trust in chatbot interactions, marking a substantial advancement in the field of conversational AI. This technique is particularly useful in the context of chatbots for the following reasons:

- Enhanced knowledge and information retrieval: RAG enables a chatbot to pull in relevant information from a large body of documents or a database. This feature is particularly useful for chatbots that need to provide accurate, up-to-date, or detailed information that isn’t contained within the model’s pretrained knowledge base.

- Improved answer quality: By retrieving relevant documents or snippets of text as context, RAG can help a chatbot generate more accurate, detailed, and contextually appropriate responses. This capability is especially important for complex queries where the answer might not be straightforward or requires synthesis of information from multiple sources.

- Balancing generative and retrieval capabilities: Traditional chatbots are either generative (creating responses from scratch) or retrieval-based (finding the best match from a set of predefined responses). RAG allows for a hybrid approach, where the generative model can create more nuanced and varied responses based on the information retrieved, leading to more natural and informative conversations.

- Handling long-tail queries: In situations where a chatbot encounters rare or unusual queries (known as long-tail queries), RAG can be particularly useful. It can retrieve relevant information even for these less common questions, allowing the generative model to craft appropriate responses.

- Continuous learning and adaptation: Because RAG-based systems can pull in the latest information from external sources, they can remain up-to-date and adapt to new information or trends without requiring a full retraining of the model. This ability is crucial for maintaining the relevance and accuracy of a chatbot over time.

- Customization and specialization: For chatbots designed for specific domains, such as medical, legal, or technical support, RAG can be tailored to retrieve information from specialized databases or documents, making the chatbot more effective in its specific context.

The need for vector databases and embeddings

When we investigate the retrieval augmentation generation systems, we must grasp the nuanced, semantic relationships inherent in human language and complex data patterns. But traditional databases, which are intended to be structured around exact keyword matches, often fall short in this regard. However, vector databases use embeddings—dense, multidimensional representations of text, images, or other data types—to capture these subtleties. By converting data into vectors in a high-dimensional space, these databases enable more sophisticated, context-aware searches. This capability is crucial in retrieval-augmentation-generation tasks, where the goal is not just to find the most directly relevant information but to understand and generate responses or content that are semantically aligned with the query. Trained on large datasets, embeddings can encapsulate a vast array of relationships and concepts, allowing for more intuitive, accurate, and efficient retrieval and generation of information, thereby significantly enhancing user experience and the effectiveness of data-driven applications.

In this post, we use the Llama2 model and deploy an endpoint using Oracle Cloud Infrastructure (OCI) Data Science Model Deployment. We create a question and answering application using Streamlit, which takes a question and responds with an appropriate answer.

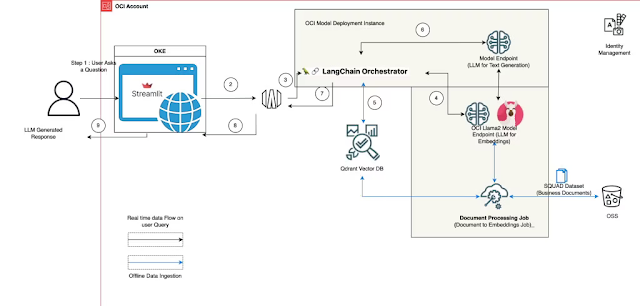

High-level solution overview

Deployment of the solution uses the following steps:

- The user provides a question through the Streamlit web application.

- The Streamlit application invokes the predict call API to the model deployment.

- The model deployment invokes Langchain to convert user questions into embeddings.

- The function invokes an Qdrant Service API to send the request to the vector database to find the top k similar documents.

- The function creates a prompt with the user query and the similar documents as context and asks the large language model (LLM) to generate a response.

- The response is provided from the function to the API gateway, which is sent to the Streamlit server.

- The user can view the response on the Streamlit application.

Getting started

This post walks you through the following steps:

- Setting up the Qdrant database instance

- Building Qdrant with Langchain

- Setting up RAG

- Deploying a Streamlit server

To implement this solution, you need an OCI account with familiarity with LLMs, access to OCI OpenSearch, and OCI Data Science Model Deployment. We also need access to GPU instances, preferably A10.2. We used the following GPU instances to get started.

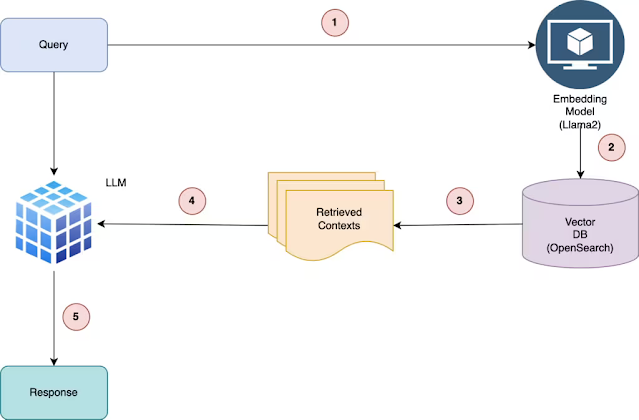

The workflow diagram moves through the following steps:

- Pass the query to the embedding model to semantically represent it as an embedded query vector.

- Pass the embedded query vector to our vector database.

- Retrieve the top-k relevant contexts, measured by k-nearest neighbors (KNN) between the query embedding and all the embedded chunks in our knowledge base.

- Pass the query text and retrieved context text to our LLM.

- The LLM generates a response using the provided content.

Hardware requirements

For the deployment of our models, we use a distinct OCI setup that uses the NVIDIA A10 GPU. In our scenario, we deployed 7b parameter model using NVIDIA A10.2 instance. We suggest using the Llama 7b model with the VM.GPU.A10.2 shape (24-GB RAM per GPU, two A10).

Prerequisites

Set up the key prerequisites before you can proceed to run the distributed fine-tuning process on OCI Data Science:

- Configure a custom subnet with a security list to allow ingress into any port from the IPs originating within the CIDR block of the subnet to ensure that the hosts on the subnet can connect to each other during distributed training.

- Create an Object Storage bucket to save the documents which are provided at time of ingestion in the vector database.

- Set the policies to allow OCI Data Science resources to access OCI Object Storage buckets, networking, and others.

- Access the token from HuggingFace to download the Llama2 model. To fine-tune the model, you first must access the pretrained model. Obtain the pretrained model from Meta or HuggingFace. In this example, we use the HuggingFace access token to download the pretrained model from HuggingFace by setting the HUGGING_FACE_HUB_TOKEN environment variable.

- Log group and log from logging service to monitor the progress of the training.

- Go to OCI Logging and select Log Groups.

- Select an existing log groups or create one.

- In the log group, create one predict log and one access log.

- Select Create custom log.

- Specify a name (predict|access) and select the log group you want to use.

- Under "Create agent configuration," select Add configuration later.

- Select Create agent configuration.

- Notebook session: Used to initiate the distributed training and to access the fine-tuned model

- Install the latest version of Oracle Accelerated Data Science (ADS) with the command, pip install oracle-ads[opctl] -U

Deploying the Llama2 model

Refer to the blog, Deploy Llama 2 in OCI Data Science, where we depicted on how to deploy a Llama2 model on an A10.2 instance.

To estimate model memory needs, Hugging Face offers a Model Memory Calculator. FurtherFurthermore, for insights into the fundamental calculations of memory requirements for transformers, Eleuther has published an informative article on the subject. Use the custom egress functionality while setting up the model deployment to access the Qdrant database.

Setting up the Qdrant database

To set up the Qdrant database, you can use the following options:

- Create a Docker container instance

- Use a Python client

Initialize Qdrant with Langchain

Qdrant integrates smoothly with LangChain, and you can use Qdrant within LangChain with the VectorDBQA class. The first step is to compile all the documents that act as the foundational knowledge for our LLM. Imagine that we place these in a list called docs. Each item in this list is a string containing segments of paragraphs.

Qdrant initialization

The next task is to produce embeddings from these documents. To illustrate, we use a compact model from the sentence-transformers package:

from langchain.vectorstores import Qdrant

from langchain.embeddings import LlamaCppEmbeddings

import qdrant_client

#Load the embeddings model

embedding = LlamaCppEmbeddings(model_path=model_folder_directory,n_gpu_layers=1000)

# Get your Qdrant URL and API Key

url =

api_key =

# Setting up Qdrant

client = qdrant_client.QdrantClient(

url,

api_key=api_key

)

qdrant = Qdrant(

client=client, collection_name="my_documents",

embeddings=embeddings

)

Qdrant upload to vector database

# If adding for the first time, this method recreate the collection

qdrant = Qdrant.from_texts(

texts, # texts is a list of documents to convert in embeddings and store to vector DB

embedding,

url=url,

api_key=api_key,

collection_name="my_documents"

)

# Adding following texts to the vector DB by calling the same object

qdrant.add_texts(texts) # texts is a list of documents to convert in embeddings and store to vector DB

Qdrant retrieval from vector database

Qdrant provides retrieval options in similarity search methods, such as batch search, range search, geospatial search, and distance metrics. Here, we use similarity search based on the prompt question.

qdrant = Qdrant(

client=client, collection_name="my_documents",

embeddings=embeddings

)

# Similarity search

docs = qdrant.similarity_search(prompt)

Setting up RAG

We use the prompt template and QA chain provided by Langchain to make the chatbot, which helps pass the context and question directly to the Llama2-based model.

from langchain.chains.question_answering import load_qa_chain

from langchain.prompts.prompt import PromptTemplate

template = """You are an assistant to the user, you are given some context below, please answer the query of the user with as detail as possible

Context:\"""

{context}

\"""

Question:\"

{question}

\"""

Answer:"""

chain = load_qa_chain(llm, chain_type="stuff", prompt=qa_prompt)

## Retrieve docs from Qdrant Vector DB based upon user prompt

docs = qdrant.similarity_search(user_prompt)

answer = chain({"input_documents": docs, "question": question,"context": docs}, return_only_outputs=True)['output_text']

Hosting Streamlit application

To set up Compute instances and host the Streamlit application, follow the readme on Github.

Source: oracle.com

0 comments:

Post a Comment