A database is just like a room in an office where all the files and important information can be stored related to a project. Every company needs a database to store and organize the information. The information that we store can be very sensitive so we always have to be careful while accessing or manipulating the information in the database. Choosing the right database is completely dependent on the purpose of the project and over the years programmers and industry specialists have shown their love for databases that fulfilled their requirements.

Wednesday, September 29, 2021

Top 7 Database You Must Know For Software Development Projects

Monday, September 27, 2021

Oracle Database Connection in Python

Sometimes as part of programming, we required to work with the databases because we want to store a huge amount of information so we use databases, such as Oracle, MySQL, etc. So In this article, we will discuss the connectivity of Oracle database using Python. This can be done through the module name cx_Oracle.

Oracle Database

For communicating with any database through our Python program we require some connector which is nothing but the cx_Oracle module.

Read More: 1Z0-067: Upgrade Oracle 9i/10g/11g OCA to Oracle Database 12c OCP

For installing cx-Oracle :

If you are using Python >= 3.6 use the below command in Linux: –

pip install cx-Oracle

If you are using Python >= 3.6 use the below command in Windows: –

py -m pip install cx-Oracle

By this command, you can install cx-Oracle package but it is required to install Oracle database first on your PC.

◉ Import database specific module

Ex. import cx_Oracle

◉ connect(): Now Establish a connection between the Python program and Oracle database by using connect() function.

con = cx_Oracle.connect('username/password@localhost')

◉ cursor(): To execute a SQL query and to provide results some special object is required that is nothing but cursor() object.

cursor = cx_Oracle.cursor()

◉ execute/executemany method :

cursor.execute(sqlquery) – – – -> to execute a single query.

cursor.executemany(sqlqueries) – – – -> to execute a single query with multiple bind variables/place holders.

◉ commit(): For DML(Data Manipulation Language) queries that comprise operations like update, insert, delete. We need to commit() then only the result reflects in the database.

◉ fetchone(), fetchmany(int), fetchall():

1. fetchone() : This method is used to fetch one single row from the top of the result set.

2. fetchmany(int): This method is used to fetch a limited number of rows based on the argument passed in it.

3. fetchall() : This method is used to fetch all rows from the result set.

◉ close(): After all done it is mandatory to close all operations.

cursor.close()

con.close()

Execution of SQL statement:

1. Creation of table

# importing module

import cx_Oracle

# Create a table in Oracle database

try:

con = cx_Oracle.connect('tiger/scott@localhost:1521/xe')

print(con.version)

# Now execute the sqlquery

cursor = con.cursor()

# Creating a table employee

cursor.execute("create table employee(empid integer primary key, name varchar2(30), salary number(10, 2))")

print("Table Created successfully")

except cx_Oracle.DatabaseError as e:

print("There is a problem with Oracle", e)

# by writing finally if any error occurs

# then also we can close the all database operation

finally:

if cursor:

cursor.close()

if con:

con.close()

Friday, September 24, 2021

Difference between Centralized Database and Distributed Database

1. Centralized Database :

A centralized database is basically a type of database that is stored, located as well as maintained at a single location only. This type of database is modified and managed from that location itself. This location is thus mainly any database system or a centralized computer system. The centralized location is accessed via an internet connection (LAN, WAN, etc). This centralized database is mainly used by institutions or organizations.

2. Distributed Database :

Difference between Centralized database and Distributed database :

| Centralized database | Distributed database |

| It is a database that is stored, located as well as maintained at a single location only. | It is a database which consists of multiple databases which are connected with each other and are spread across different physical locations. |

| The data access time in the case of multiple users is more in a centralized database. | The data access time in the case of multiple users is less in a distributed database. |

| The management, modification, and backup of this database are easier as the entire data is present at the same location. | The management, modification, and backup of this database are very difficult as it is spread across different physical locations. |

| This database provides a uniform and complete view to the user. | Since it is spread across different locations thus it is difficult to provide a uniform view to the user. |

| This database has more data consistency in comparison to distributed database. | This database may have some data replications thus data consistency is less. |

| The users cannot access the database in case of database failure occurs. | In distributed database, if one database fails users have access to other databases. |

| Centralized database is less costly. | This database is very expensive. |

Wednesday, September 22, 2021

Fine grained Network Access Control for Oracle Autonomous Database on Exadata Cloud@Customer

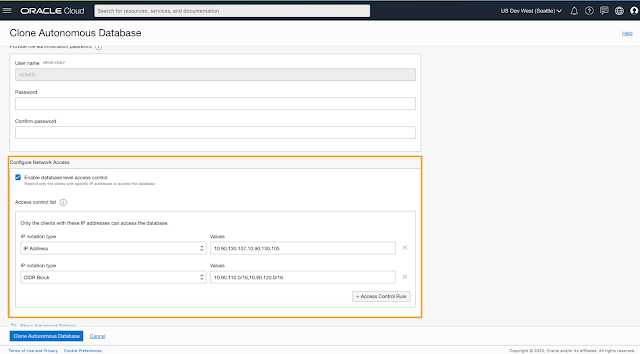

Network perimeter security is the primary method for securing cloud resources. This is generally done by creating virtual networks/subnets, security lists and firewalls. For Oracle multi-tenant databases deployed on Exadata Cloud@Customer, there is now an additional layer of network security available. Access Control Lists at the pluggable database level. What this means is that while the Exadata Infrastructure may be deployed in a private customer subnet and access is governed by the security rules associated with that subnet, each ADB or pluggable database can have its own set of access rules. This is done by defining an Access Control List at the time of provisioning an ADB or at a later stage if desired. Access Control Lists can be one or more IP addresses or a CIDR block.

More Info: 1Z0-888: MySQL 5.7 Database Administrator

Typically, an Autonomous Container Database (ACD) may have multiple Autonomous Databases (ADB) providing a higher degree of consolidation and cost efficiency by leveraging service features such as online auto-scaling. By defining Access Control Lists (ACL) for each ADB, you now have much better control on which specific SQL clients or users can access the database.

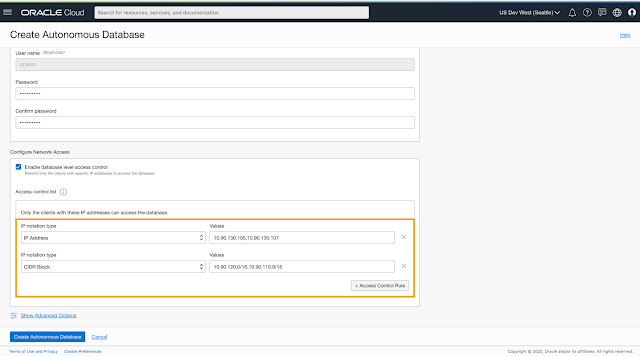

1. Set up ACLs at the time of your Autonomous Database provisioning

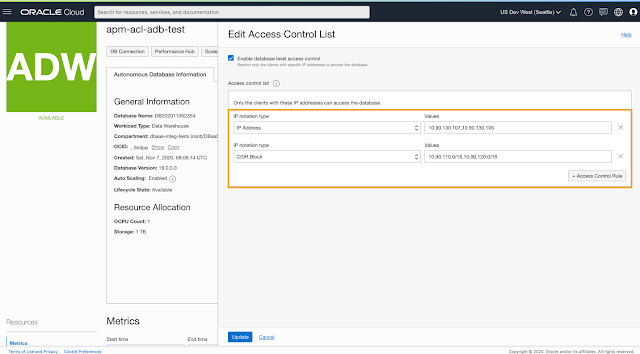

2. Setup or modify ACLs for existing ADBs

Monday, September 20, 2021

Multitenant : Unplug/Plugin PDB Upgrade to Oracle Database 21c (AutoUpgrade)

This article provides an overview of upgrading an existing PDB to Oracle 21c on the same server using AutoUpgrade Unplug/Plugin. Upgrades can be very complicated, so you must always read the upgrade manual, and test thoroughly before considering an upgrade of a production environment.

◉ Assumptions

This article is focused on upgrading a pluggable database using unplug/plugin. If you want to upgrade a CDB and all PDBs directly, you need to follow this article.

Multitenant : Upgrade to Oracle Database 21c (AutoUpgrade)

This article assumes your source database is of a version supported for direct upgrade to 21c.

19c, 18c, 12.2

In this example we are doing an upgrade from 19c multitenant to 21c. The process is very similar for all supported versions.

Read More: 1Z0-432: Oracle Real Application Clusters 12c Essentials

It's important to have backups of everything before you start! Some of these steps are destructive, and if something goes wrong you have no alternative but to restore from backups and start again.

◉ Prerequisities

Make sure you have all the OS prerequisites in place by running the 21c preinstall package. On Oracle Linux you can do this by installing the preinstall package. It probably makes sense to update the remaining packages also.

yum install -y oracle-database-preinstall-21c

yum update -y

◉ Install 21c Software

You can read about the installation process in more detail here (OL7, OL8), but for this example we'll keep it brief. The following commands will perform a silent installation of the 21c software.

export ORACLE_HOME=$ORACLE_BASE/product/21.0.0/dbhome_1

export SOFTWARE_DIR=/vagrant/software

export ORA_INVENTORY=/u01/app/oraInventory

mkdir -p ${ORACLE_HOME}

cd $ORACLE_HOME

/bin/unzip -oq ${SOFTWARE_DIR}/LINUX.X64_213000_db_home.zip

./runInstaller -ignorePrereq -waitforcompletion -silent \

-responseFile ${ORACLE_HOME}/install/response/db_install.rsp \

oracle.install.option=INSTALL_DB_SWONLY \

ORACLE_HOSTNAME=${ORACLE_HOSTNAME} \

UNIX_GROUP_NAME=oinstall \

INVENTORY_LOCATION=${ORA_INVENTORY} \

SELECTED_LANGUAGES=en,en_GB \

ORACLE_HOME=${ORACLE_HOME} \

ORACLE_BASE=${ORACLE_BASE} \

oracle.install.db.InstallEdition=EE \

oracle.install.db.OSDBA_GROUP=dba \

oracle.install.db.OSBACKUPDBA_GROUP=dba \

oracle.install.db.OSDGDBA_GROUP=dba \

oracle.install.db.OSKMDBA_GROUP=dba \

oracle.install.db.OSRACDBA_GROUP=dba \

SECURITY_UPDATES_VIA_MYORACLESUPPORT=false \

DECLINE_SECURITY_UPDATES=true

Run the root scripts when prompted.

As a root user, execute the following script(s):

1. /u01/app/oracle/product/21.0.0/dbhome_1/root.sh

At this point you should also patch the new Oracle home, but in this case we will forgo that step to keep things simple.

◉ Create 21c Container Database

We need to create a container database (CDB) as the destination for the resulting PDB. The following example create a CDB called "cdb2" with no PDBs.

#dbca -silent -deleteDatabase -sourceDB cdb2 -sysDBAUserName sys -sysDBAPassword SysPassword1

dbca -silent -createDatabase \

-templateName General_Purpose.dbc \

-gdbname cdb2 -sid cdb2 -responseFile NO_VALUE \

-characterSet AL32UTF8 \

-sysPassword SysPassword1 \

-systemPassword SysPassword1 \

-createAsContainerDatabase true \

-numberOfPDBs 0 \

-databaseType MULTIPURPOSE \

-memoryMgmtType auto_sga \

-totalMemory 1536 \

-storageType FS \

-datafileDestination "/u02/oracle/" \

-redoLogFileSize 50 \

-emConfiguration NONE \

-ignorePreReqs

Prepare for db operation

10% complete

Copying database files

40% complete

Creating and starting Oracle instance

42% complete

46% complete

52% complete

56% complete

60% complete

Completing Database Creation

66% complete

69% complete

70% complete

Executing Post Configuration Actions

100% complete

Database creation complete. For details check the logfiles at:

/u01/app/oracle/cfgtoollogs/dbca/cdb2.

Database Information:

Global Database Name:cdb2

System Identifier(SID):cdb2

Look at the log file "/u01/app/oracle/cfgtoollogs/dbca/cdb2/cdb2.log" for further details.

$

We enable the fast recovery area, Oracle Managed Files (OMF) and archivelog mode.

sqlplus / as sysdba <<EOF

alter system set db_recovery_file_dest_size=40g;

alter system set db_recovery_file_dest='/u01/app/oracle/fast_recovery_area';

alter system set db_create_file_dest = '/u02/oradata';

shutdown immediate;

startup mount;

alter database archivelog;

alter database open;

exit;

EOF

You will need to adjust the instance parameters to make sure the container can cope with the demands of the final PDB, but for this example we will ignore that.

◉ Run AutoUpgrade Analyze

Download the latest "autoupgrade.jar" file from MOS 2485457.1. If you don't have MOS access you can miss out the next step.

cd $ORACLE_BASE/product/21.0.0/dbhome_1/rdbms/admin

mv autoupgrade.jar autoupgrade.jar.`date +"%Y"-"%m"-"%d"`

cp /tmp/autoupgrade.jar .

Make sure you are using the original Oracle home before running the "autoupgrade.jar" commands.

export ORACLE_SID=cdb1

export ORAENV_ASK=NO

. oraenv

export ORAENV_ASK=YES

export ORACLE_HOME=$ORACLE_BASE/product/19.0.0/dbhome_1

Generate a sample file for a full database upgrade.

$ORACLE_BASE/product/21.0.0/dbhome_1/jdk/bin/java \

-jar $ORACLE_BASE/product/21.0.0/dbhome_1/rdbms/admin/autoupgrade.jar \

-create_sample_file config /tmp/config.txt unplug

Edit the resulting "/tmp/config.txt" file, setting the details for your required upgrade. In this case we used the following parameters. We are only upgrading a single PDB, but if we had multiple we could use a comma-separated list of PDBs.

upg1.log_dir=/u01/app/oracle/cfgtoollogs/autoupgrade/pdb1

upg1.sid=cdb1

upg1.source_home=/u01/app/oracle/product/19.0.0/dbhome_1

upg1.target_cdb=cdb2

upg1.target_home=/u01/app/oracle/product/21.0.0/dbhome_1

upg1.pdbs=pdb1 # Comma delimited list of pdb names that will be upgraded and moved to the target CDB

#upg1.target_pdb_name.mypdb1=altpdb1 # Optional. Name of the PDB to be created on the target CDB

#upg1.target_pdb_copy_option.mypdb1=file_name_convert=('mypdb1', 'altpdb1') # Optional. file_name_convert option used when creating the PDB on the target CDB

#upg1.target_pdb_name.mypdb2=altpdb2

upg1.start_time=NOW # Optional. [NOW | +XhYm (X hours, Y minutes after launch) | dd/mm/yyyy hh:mm:ss]

upg1.upgrade_node=localhost # Optional. To find out the name of your node, run the hostname utility. Default is 'localhost'

upg1.run_utlrp=yes # Optional. Whether or not to run utlrp after upgrade

upg1.timezone_upg=yes # Optional. Whether or not to run the timezone upgrade

upg1.target_version=21 # Oracle version of the target ORACLE_HOME. Only required when the target Oracle database version is 12.2

Run the upgrade in analyze mode to see if there are any expected issues with the upgrade.

$ORACLE_BASE/product/21.0.0/dbhome_1/jdk/bin/java \

-jar $ORACLE_BASE/product/21.0.0/dbhome_1/rdbms/admin/autoupgrade.jar \

-config /tmp/config.txt -mode analyze

AutoUpgrade tool launched with default options

Processing config file ...

+--------------------------------+

| Starting AutoUpgrade execution |

+--------------------------------+

1 databases will be analyzed

Type 'help' to list console commands

upg> Job 100 completed

------------------- Final Summary --------------------

Number of databases [ 1 ]

Jobs finished [1]

Jobs failed [0]

Jobs pending [0]

Please check the summary report at:

/u01/app/oracle/cfgtoollogs/autoupgrade/cfgtoollogs/upgrade/auto/status/status.html

/u01/app/oracle/cfgtoollogs/autoupgrade/cfgtoollogs/upgrade/auto/status/status.log

The output files list the status of the analysis, and any manual intervention that is needed before an upgrade can take place. The output of the "status.log" file is shown below. The detail section gives a file containing the details of the steps in the upgrade process. If you've seen the output from the "preupgrade.jar", it will look familiar. If there are any required manual actions in the main log file, the detail file should give more information.

==========================================

Autoupgrade Summary Report

==========================================

[Date] Sun Aug 22 14:49:01 UTC 2021

[Number of Jobs] 1

==========================================

[Job ID] 100

==========================================

[DB Name] cdb1

[Version Before Upgrade] 19.12.0.0.0

[Version After Upgrade] 21.3.0.0.0

------------------------------------------

[Stage Name] PRECHECKS

[Status] SUCCESS

[Start Time] 2021-08-22 14:48:44

[Duration] 0:00:16

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/100/prechecks

[Detail] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/100/prechecks/cdb1_preupgrade.log

Precheck passed and no manual intervention needed

------------------------------------------

The log directory contains a number of files, including a HTML format of the detailed report. It's the same information as the log file, but some people my prefer reading this format.

Once any required manual fixups are complete, run the analysis again and you should see a clean analysis report.

◉ Run AutoUpgrade Deploy

We are now ready to run the database upgrade with the following command. The upgrade takes some time, so you will be left at the "upg" prompt until it's complete.

$ORACLE_BASE/product/21.0.0/dbhome_1/jdk/bin/java \

-jar $ORACLE_BASE/product/21.0.0/dbhome_1/rdbms/admin/autoupgrade.jar \

-config /tmp/config.txt -mode deploy

AutoUpgrade tool launched with default options

Processing config file ...

+--------------------------------+

| Starting AutoUpgrade execution |

+--------------------------------+

1 databases will be processed

Type 'help' to list console commands

upg>

Use the "help" command to see the command line options. We can list the current jobs and check on the job status using the following commands.

upg> lsj

+----+-------+---------+---------+-------+--------------+--------+----------------+

|Job#|DB_NAME| STAGE|OPERATION| STATUS| START_TIME| UPDATED| MESSAGE|

+----+-------+---------+---------+-------+--------------+--------+----------------+

| 101| cdb1|DBUPGRADE|EXECUTING|RUNNING|21/08/22 14:51|14:56:28|22%Upgraded PDB1|

+----+-------+---------+---------+-------+--------------+--------+----------------+

Total jobs 1

upg> status -job 101

Progress

-----------------------------------

Start time: 21/08/22 14:51

Elapsed (min): 5

End time: N/A

Last update: 2021-08-22T14:56:28.019

Stage: DBUPGRADE

Operation: EXECUTING

Status: RUNNING

Pending stages: 7

Stage summary:

SETUP <1 min

PREUPGRADE <1 min

PRECHECKS <1 min

PREFIXUPS 1 min

DRAIN <1 min

DBUPGRADE 3 min (IN PROGRESS)

Job Logs Locations

-----------------------------------

Logs Base: /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1

Job logs: /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101

Stage logs: /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/dbupgrade

TimeZone: /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/temp

Additional information

-----------------------------------

Details:

[Upgrading] is [22%] completed for [cdb1-pdb1]

+---------+-------------+

|CONTAINER| PERCENTAGE|

+---------+-------------+

| PDB1|UPGRADE [22%]|

+---------+-------------+

Error Details:

None

upg>

Once the job completes a summary message is displayed.

upg> Job 101 completed

------------------- Final Summary --------------------

Number of databases [ 1 ]

Jobs finished [1]

Jobs failed [0]

Jobs pending [0]

Please check the summary report at:

/u01/app/oracle/cfgtoollogs/autoupgrade/cfgtoollogs/upgrade/auto/status/status.html/u01/app/oracle/cfgtoollogs/autoupgrade/cfgtoollogs/upgrade/auto/status/status.log

The "status.log" contains the top-level information about the upgrade process.

==========================================

Autoupgrade Summary Report

==========================================

[Date] Sun Aug 22 15:15:50 UTC 2021

[Number of Jobs] 1

==========================================

[Job ID] 101

==========================================

[DB Name] cdb1

[Version Before Upgrade] 19.12.0.0.0

[Version After Upgrade] 21.3.0.0.0

------------------------------------------

[Stage Name] PREUPGRADE

[Status] SUCCESS

[Start Time] 2021-08-22 14:51:03

[Duration] 0:00:00

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/preupgrade

------------------------------------------

[Stage Name] PRECHECKS

[Status] SUCCESS

[Start Time] 2021-08-22 14:51:03

[Duration] 0:00:20

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/prechecks

[Detail] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/prechecks/cdb1_preupgrade.log

Precheck passed and no manual intervention needed

------------------------------------------

[Stage Name] PREFIXUPS

[Status] SUCCESS

[Start Time] 2021-08-22 14:51:24

[Duration] 0:01:43

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/prefixups

------------------------------------------

[Stage Name] DRAIN

[Status] SUCCESS

[Start Time] 2021-08-22 14:53:08

[Duration] 0:00:12

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/drain

------------------------------------------

[Stage Name] DBUPGRADE

[Status] SUCCESS

[Start Time] 2021-08-22 14:53:20

[Duration] 0:15:56

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/dbupgrade

------------------------------------------

[Stage Name] NONCDBTOPDBXY

[Status] SUCCESS

[Start Time] 2021-08-22 15:09:16

[Duration] 0:00:00

------------------------------------------

[Stage Name] POSTCHECKS

[Status] SUCCESS

[Start Time] 2021-08-22 15:09:16

[Duration] 0:00:09

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/postchecks

[Detail] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/postchecks/cdb1_postupgrade.log

------------------------------------------

[Stage Name] POSTFIXUPS

[Status] SUCCESS

[Start Time] 2021-08-22 15:09:26

[Duration] 0:06:23

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/postfixups

------------------------------------------

[Stage Name] POSTUPGRADE

[Status] SUCCESS

[Start Time] 2021-08-22 15:15:49

[Duration] 0:00:00

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/postupgrade

------------------------------------------

[Stage Name] SYSUPDATES

[Status] SUCCESS

[Start Time] 2021-08-22 15:15:50

[Duration] 0:00:00

[Log Directory] /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/sysupdates

------------------------------------------

Upgrade Summary: /u01/app/oracle/cfgtoollogs/autoupgrade/pdb1/cdb1/101/dbupgrade/upg_summary.log

Check out the "upg_summary.log" file, and if anything looks wrong, check out the associated log files. At this point I do a shutdown and startup to make sure everything is running in the correct mode.

export ORACLE_HOME=$ORACLE_BASE/product/21.0.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

export ORACLE_SID=cdb2

sqlplus / as sysdba <<EOF

alter pluggable database PDB1 save state;

shutdown immediate;

startup;

show pdbs

exit;

EOF

◉ Final Steps

If you've finished with the 19c CDB1 instance, you can remove it.

export ORACLE_HOME=/u01/app/oracle/product/19.0.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

export ORACLE_SID=cdb1

dbca -silent -deleteDatabase -sourceDB mydb -sysDBAUserName sys -sysDBAPassword SysPassword1

Edit the "/etc/oratab" file and any environment files as required.

◉ Appendix

The following commands are used to rebuild the databases if you want to rerun the examples.

Rebuild the 21c CDB1 multitenant database with no PDBS.

export ORACLE_HOME=/u01/app/oracle/product/21.0.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

export ORACLE_SID=cdb2

#dbca -silent -deleteDatabase -sourceDB cdb2 -sysDBAUserName sys -sysDBAPassword SysPassword1

dbca -silent -createDatabase \

-templateName General_Purpose.dbc \

-gdbname cdb2 -sid cdb2 -responseFile NO_VALUE \

-characterSet AL32UTF8 \

-sysPassword SysPassword1 \

-systemPassword SysPassword1 \

-createAsContainerDatabase true \

-numberOfPDBs 0 \

-databaseType MULTIPURPOSE \

-memoryMgmtType auto_sga \

-totalMemory 1536 \

-storageType FS \

-datafileDestination "/u02/oradata/" \

-redoLogFileSize 50 \

-emConfiguration NONE \

-ignorePreReqs

sqlplus / as sysdba <<EOF

alter system set db_recovery_file_dest_size=40g;

alter system set db_recovery_file_dest='/u01/app/oracle/fast_recovery_area';

alter system set db_create_file_dest = '/u02/oradata';

shutdown immediate;

startup mount;

alter database archivelog;

alter database open;

exit;

EOF

Rebuild the 19c CDB1 multitenant database with one PDB.

export ORACLE_HOME=/u01/app/oracle/product/19.0.0/dbhome_1

export PATH=$ORACLE_HOME/bin:$PATH

export ORACLE_SID=cdb1

#dbca -silent -deleteDatabase -sourceDB mydb -sysDBAUserName sys -sysDBAPassword SysPassword1

dbca -silent -createDatabase \

-templateName General_Purpose.dbc \

-gdbname cdb1 -sid cdb1 -responseFile NO_VALUE \

-characterSet AL32UTF8 \

-sysPassword SysPassword1 \

-systemPassword SysPassword1 \

-createAsContainerDatabase true \

-numberOfPDBs 1 \

-pdbName pdb1 \

-pdbAdminPassword SysPassword1 \

-databaseType MULTIPURPOSE \

-memoryMgmtType auto_sga \

-totalMemory 1536 \

-storageType FS \

-datafileDestination "/u02/oradata/" \

-redoLogFileSize 50 \

-emConfiguration NONE \

-ignorePreReqs

sqlplus / as sysdba <<EOF

alter pluggable database pdb1 save state;

alter system set db_recovery_file_dest_size=40g;

alter system set db_recovery_file_dest='/u01/app/oracle/fast_recovery_area';

alter system set db_create_file_dest = '/u02/oradata';

shutdown immediate;

startup mount;

alter database archivelog;

alter database open;

exit;

EOF

Source: oracle-base.com

Friday, September 17, 2021

Does your document database provide these 3 critical capabilities ? Ensure you future-proof your applications today!

Most businesses operate in dynamic operating environment with rapidly changing customer expectations, and preferences. This is forcing businesses to be creative in delivering value to their customers and partners through applications or application programming interfaces (API) with new features and capabilities daily or even hourly.

Read More: 1Z0-432: Oracle Real Application Clusters 12c Essentials

Let’s consider a typical airline mobile app, it let's users manage reservations, get flight updates and keep track of membership bDocumenefits. However, not in the distant past, generating electronic boarding passes, seat selection and tracking bags in real-time used be an offline business process.

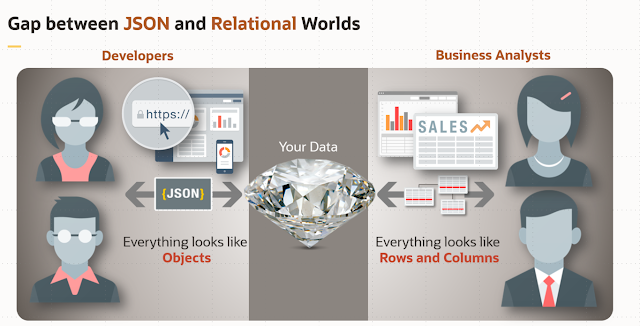

Businesses are increasingly depending on developers / IT teams with an established continuous delivery process in meeting customer expectations. Unfortunate reality is, business requirements for applications can often be vague, and it is not unusual for business leaders to demand the ability to define or change requirements frequently and within a short notice. This can cause lots of frustration between business leaders and development teams. Enter JSON.

JSON to the rescue

According to Wikipedia, JSON (JavaScript Object Notation) is a lightweight data-interchange format for web applications. JSON is programming language independent, it is easy for humans to read and write, easy for machines to parse and generate. It is these properties that make JSON an ideal data-interchange language. It derived from JavaScript, the most popular language among developers for nine years in a row according to 2021 Stack Overflow survey.

Evolution of document databases

In pursuit of ideal data persistence

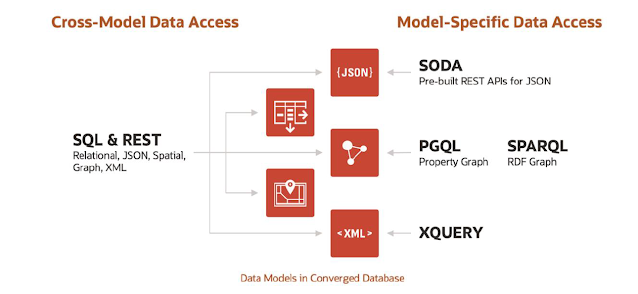

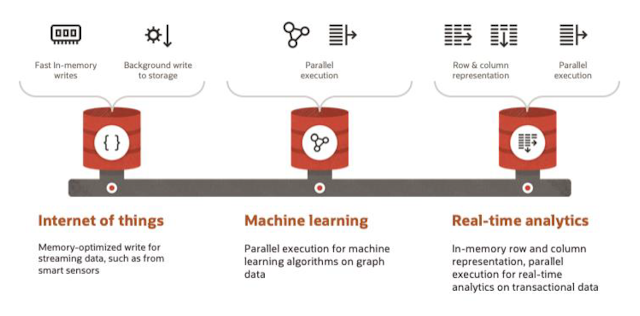

Optimized and fine tuned for JSON workloads

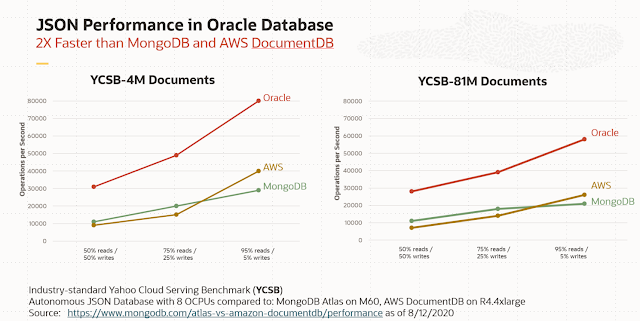

2X faster and 35% cheaper than MongoDB Atlas and AWS DocumentDB

Simplified data platform for current and future application needs

Wednesday, September 15, 2021

Metrics and Performance Hub for ExaCS and DBCS

We are pleased to announce the General Availability (GA) of Metrics and Performance Hub feature for Exadata Cloud Service and Database Cloud Service in all OCI commercial regions.These capabilities empower customers to monitor their database instances and get comprehensive database performance analysis and management capabilities, natively within the OCI console for ExaCS and DBCS.

Key Customer Benefits

◉ Database metrics help users monitor useful quantitative data, such as CPU and storage utilization, the number of successful and failed database logon and connection attempts, database operations, SQL queries, and transactions, etc. Users can use metrics data to diagnose and troubleshoot problems with their databases.

Read More: 1Z0-149: Oracle Database Program with PL/SQL

◉ Performance Hub provides in-depth diagnostics capability for doing Oracle database performance analysis and tuning. With this capability, users can have a consolidated view of real-time and historical performance data.

In order to use Metrics and Performance Hub features for ExaCS and DBCS, users need to enable Database Management Service on their databases. There are two management options (Basic and Full) to choose from. Details about Management options are here.

OCI Console Experience for ExaCS and DBCS

From the Bare Metal, VM, and Exadata service home page, navigate to Exadata VM Cluster → Database (for ExaCS) and navigate to DB Systems → Database (for DBCS). On the Database Details page, click 'Enable' for the label Database Management. Provide the details required and along with that choose between Full Management and Basic Management. There is an option to disable or edit the Database management option as well.