Logs are a power tool to understand the behavior of any service or application. In a ML post-deployment logs are extremely needed to track all the interactions over the published ML model in order to understand who is trying to invoke the model and which prediction were made by the model.

Along this article you will be presented on how to collect logs generate by a Data Science Model Deployment, copy them to an Autonomous Data Warehouse and analyze using Oracle Analytics Cloud.

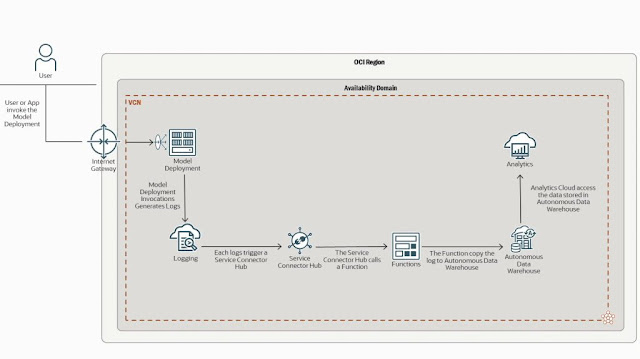

At the end of this article you will have deployed the architecture below:

Architecture for Deeper Model Deployment Logs Analysis

To achieve the architecture above firstly you need train and deploy a ML model. For that you will use an OCI Data Science Notebook. If you have doubt on how to create an OCI Data Science Notebook you can take a look at this documentation.

Model Deployment

OCI Data Science offers a complete machine learning model life cycle including the model deployment. OCI Data Science Model Deployment is a fully managed infrastructure by OCI to put your model in production and deliver model invocations as a web application HTPP endpoint which serves predictions in a real time.

OCI Data Science Model Deployment Flow

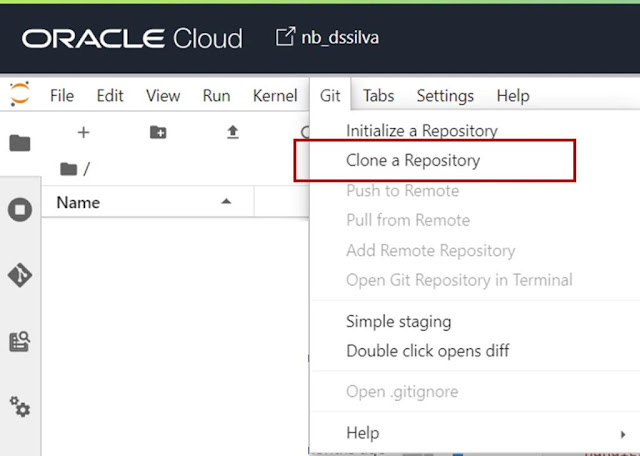

You will use a pre-prepared notebook to complete all the steps detailed in the flow above. This notebook is in a github repository and will be cloned to your notebook session using the native Git interface. To do that, open your notebook session click Git, then select the option Clone Repository.

Git Clone Repository Menu

Input the following URL https://github.com/dougsouzars/Deeper-Model-Deployment-Logs-Analysis.git and click Clone. All the files in this repository will be copied to your environment creating a new folder. Access the created folder and double click in the file Deeper Model Deployment Logs Analysis.ipynb to open it.

Ensure you are using the conda environment generalml_p37_cpu_v1. If you are not familiarized with conda environments take a look in this article and install the conda environment General Machine Learning for CPUs on Python 3.7. Then, in the menu click Run, and click Run All Cells.

Run All Cells

The notebook should took about 15 minutes to complete the Model Deployment process. After that, at the last cell there is a loop invoking the Model Deployment Endpoint to generate logs for further analysis.

Loop for Model Deployment invocation

Autonomous Data Warehouse

Create a new Autonomous Data Warehouse if you do not have one yet.You can follow these steps to create a new Autonomous Data Warehouse instance.

Access your Autonomous Data Warehouse instance and click over Database Actions.

ADW Database Actions

Log in with the admin user and the password you set when you created the database.

The Database Actions | Launchpad window is displayed.

Click SQL.

Open SQL Developer Web

Enter the following command:

soda create logs;

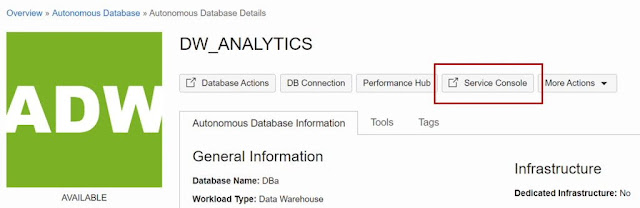

Go back to the Autonomous Data Warehouse detail page and click Service Console.

Open Service Console

In the new window click Development and then click Copy under RESTful Services and SODA and save it for later.

Copy RESTful Services and SODA

Go back to the Autonomous Data Warehouse detail page and click DB Connection.

Click Download Wallet, input a password and then click Download.

Click Close.

Functions

You need create a Function that will be responsible for copy the logs from OCI Logging to Autonomous Data Warehouse.

After create the Function Application, click Getting started and follow the Cloud Shell Setup tutorial to create the your function until the step 7.

Function Cloud Shell Setup

For steps 8 and 9 run the following commands:

fn init --runtime python send-logs

cd send-logs

This creates a new folder called send-logs and inside it 3 files called func.py, func.yaml and requirements.txt. You will replace the content of the func.py file with:

import ioimport json

import logging

import requests

from fdk import response

#soda insert uses the Autonomous Database REST API to insert JSON documents

def soda_insert(ordsbaseurl, dbschema, dbuser, dbpwd, collection, logentries):

auth=(dbuser,dbpwd)

sodaurl = ordsbaseurl + dbschema + '/soda/latest/'

bulkinserturl = sodaurl + 'custom-actions/insert/' + collection + "/"

headers = {'Content-Type': 'application/json'}

resp = requests.post(bulkinserturl, auth=auth, headers=headers, data=json.dumps(logentries))

return resp.json()

def handler(ctx, data: io.BytesIO=None):

logger = logging.getLogger()

logger.info("function start")

# Retrieving the Function configuration values

try:

cfg = dict(ctx.Config())

ordsbaseurl = cfg["ordsbaseurl"]

dbschema = cfg["dbschema"]

dbuser = cfg["dbuser"]

dbpwd = cfg["dbpwd"]

collection = cfg["collection"]

except:

logger.error('Missing configuration keys: ordsbaseurl, dbschema, dbuser, dbpwd and collection')

raise

# Retrieving the log entries from Service Connector Hub as part of the Function payload

try:

logentries = json.loads(data.getvalue())

if not isinstance(logentries, list):

raise ValueError

import ioimport json

import logging

import requests

from fdk import response

#soda insert uses the Autonomous Database REST API to insert JSON documents

def soda_insert(ordsbaseurl, dbschema, dbuser, dbpwd, collection, logentries):

auth=(dbuser,dbpwd)

sodaurl = ordsbaseurl + dbschema + '/soda/latest/'

bulkinserturl = sodaurl + 'custom-actions/insert/' + collection + "/"

headers = {'Content-Type': 'application/json'}

resp = requests.post(bulkinserturl, auth=auth, headers=headers, data=json.dumps(logentries))

return resp.json()

def handler(ctx, data: io.BytesIO=None):

logger = logging.getLogger()

logger.info("function start")

# Retrieving the Function configuration values

try:

cfg = dict(ctx.Config())

ordsbaseurl = cfg["ordsbaseurl"]

dbschema = cfg["dbschema"]

dbuser = cfg["dbuser"]

dbpwd = cfg["dbpwd"]

collection = cfg["collection"]

except:

logger.error('Missing configuration keys: ordsbaseurl, dbschema, dbuser, dbpwd and collection')

raise

# Retrieving the log entries from Service Connector Hub as part of the Function payload

try:

logentries = json.loads(data.getvalue())

if not isinstance(logentries, list):

raise ValueError

except:

logger.error('Invalid payload')

raise

# The log entries are in a list of dictionaries. We can iterate over the the list of entries and process them.

# For example, we are going to put the Id of the log entries in the function execution log

logger.info("Processing the following LogIds:")

for logentry in logentries:

logger.info(logentry["oracle"]["logid"])

# Now, we are inserting the log entries in the JSON Database

resp = soda_insert(ordsbaseurl, dbschema, dbuser, dbpwd, collection, logentries)

logger.info(resp)

if "items" in resp:

logger.info("Logs are successfully inserted")

logger.info(json.dumps(resp))

else:

raise Exception("Error while inserting logs into the database: " + json.dumps(resp))

# The function is done, we don't return any response because it would be useless

logger.info("function end")

return response.Response(

ctx,

response_data="",

headers={"Content-Type": "application/json"}

)

You also need update the file requirements.txt including the library requests. So the content of the requirements.txt file should be:

fdk>=0.1.40

requests

When concluded the steps above execute the step 10.

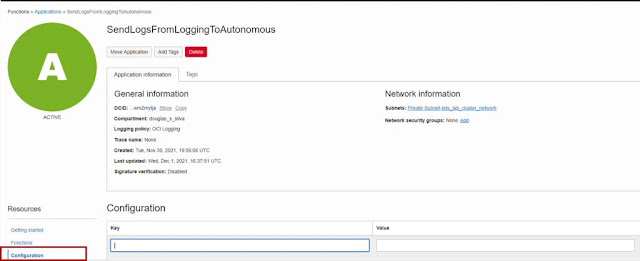

After complete the steps above you should click Configuration and pass your variables.

Functions Parameters Configuration

Input your values for each variable listed:

◉ ordsbaseurl - your RESTful URL you copied previously

◉ dbschema - your autonomous admin schema

◉ dbuser - your autonomous admin user

◉ dbpwd - your autonomous admin password

◉ collection - your collection called logs

After complete the imputation you should have a similar screen as displayed below:

Filled Functions Configuration

Service Connector Hub

You need to create a Service Connector Hub. Open the navigation menu and click Analytics & AI. Under Messaging, click Service Connector Hub.

Choose the Compartment where you want to create the service connector.

Click Create Service Connector.

Type a Connector Name such as "Send Logs to My Autonomous Database." Avoid entering confidential information.

Select the Resource Compartment where you want to store the new service connector.

Under Configure Service Connector, select your source and target services to move log data to a metric:

- Source: Logging

- Target: Functions

Under Configure source connection, select a Compartment Name, Log Group, and Log.

Under Configure target connection, select the Function Application and Function corresponding to the function you created.

Click Create.

Example of Service connector Hub Fill

After everything done you can check if the logs are been copied looking directly in the Service Connector detail page.

Service Connector Hub Metrics

Oracle Analytics Cloud

As the logs are been copied to the Autonomous Data Warehouse you can create analytics dashboards to understand the Model Deployment behaviors. In this case you will analyze the prediction logs and the dates they were generated.

In the OCI Home window, open the navigation menu and click Analytics & AI. Under Analytics, click Analytics Cloud.

Choose the Compartment where you want to create the Analytics Cloud.

If you have one instance created click in the instance name. If not, click Create Instance, fill your information and click Create.

Access your instance by clicking over the instance name, then click Analytics Home Page.

At the Home Page, in the top right corner click Three Dotted Button. Click Import Workbook/Flow.

Three dotted action menu button

Click Select File, then select the Deeper Model Deployment Logs Analysis.dva file.

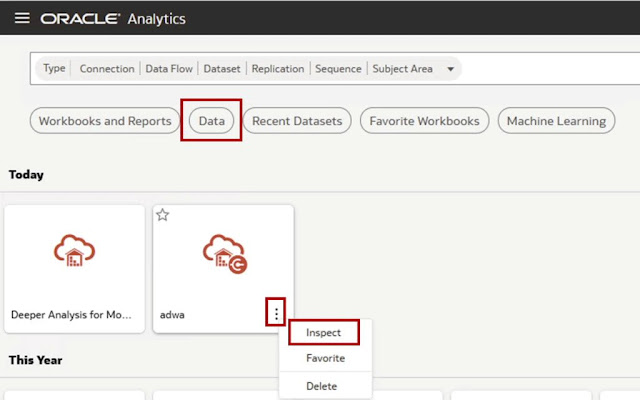

In the top menu, click Data, then mouse hover over the adwa and click in the Three Dotted Button. Click Inspect.

Inspect Connection

Input your connection to Autonomous Data Warehouse details and upload the wallet you made the download previously. Click Save.

Caption

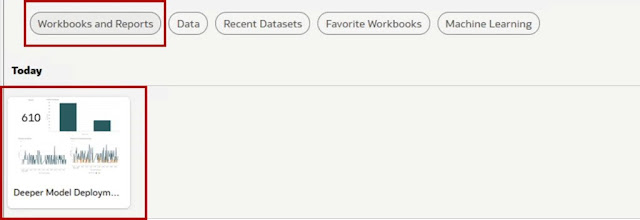

In the top menu, click Data, then click in the Workbook to open it.

Open the workbook

You should see a dashboard like below with the predictions analysis.

OAC Final Dashboard

Source: oracle.com

0 comments:

Post a Comment