Moving the workloads of your on-premises database to an Oracle Cloud gives you a competitive advantage today. Databases in Oracle Cloud leverage Oracle's Generation 2 infrastructure, providing maximum performance, better pricing, and the most secure environment for your enterprise workloads. OCI also provides the Oracle Autonomous Database, the industry’s first and only self-driving, self-securing, and self-repairing database.

Oracle continues to extend the capabilities of our industry-leading Enterprise Manager offering to support our customers' journey to cloud. We are excited to introduce you to the Database Migration Workbench, which will help you migrate on-premises databases to Oracle Autonomous Database. This blog will guide you through the various steps of the migration process.

Overview of Database Migration Workbench

Webinar: Automating Database Migration to Oracle Cloud, including Autonomous Database, Eliminates Operational Risk

Register: December 10, 2020 11:00 a.m. PT/2:00 p.m. ET

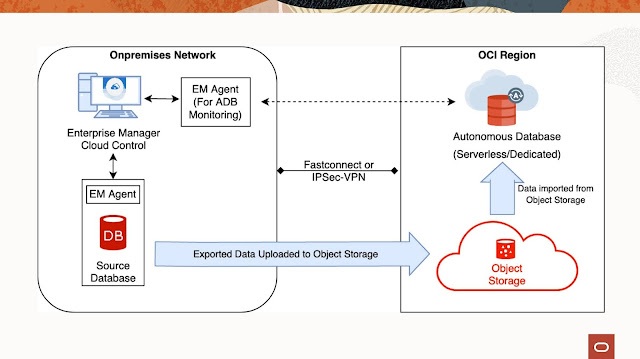

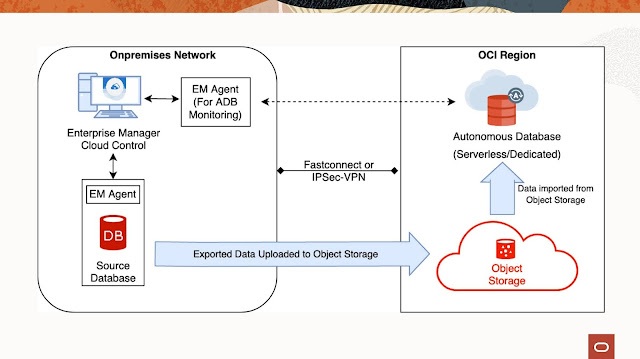

Database Migration Workbench simplifies the migration of an on-premises database to an Autonomous Database with a unified guided flow. Figure 1 (below) shows an overview of the Database Migration Workbench. It uses Data Pump to migrate data to an Autonomous Database. A DBA can use Data Pump regardless of the endian format and character set of the on-premises database. The source database can be a Single-Instance Database (SIDB), RAC Database (RACDB) or a Pluggable Database (PDB), and the destination database can be an ADW or ATP. As part of database migration, EM takes an export dump of source data files, uploads it to an object storage bucket, and imports it to an Autonomous Database.

Figure 1 - Overview of Database Migration Workbench

Prerequisites for Database Migration

1. Connectivity between the on-premises datacenter and OCI services should be present, which can be established by having a

FastConnect or an

IPSec VPN connection.

2. For a full database export or exporting any schema other than the user schema, create an on-premises database user having privileges to export data, i.e., GRANT DATAPUMP_EXP_FULL_DATABASE TO <user_name>. For example:

◉ Execute the following SQL if the source database is an SIDB or a RACDB:

CREATE USER MIGUSER IDENTIFIED BY MPass_123;

GRANT CREATE SESSION TO MIGUSER;

GRANT CONNECT TO MIGUSER;

GRANT SELECT ANY TABLE TO MIGUSER;

GRANT DATAPUMP_EXP_FULL_DATABASE TO MIGUSER;

ALTER USER MIGUSER QUOTA UNLIMITED ON USERS;

◉ Execute the following SQL if the source database is a PDB:

ALTER SESSION SET CONTAINER=SALESPDB;

CREATE USER MIGUSER IDENTIFIED BY MPass_123 CONTAINER=current;

GRANT CREATE SESSION TO MIGUSER;

GRANT CONNECT TO MIGUSER;

GRANT SELECT ANY TABLE TO MIGUSER;

GRANT DATAPUMP_EXP_FULL_DATABASE TO MIGUSER;

ALTER USER MIGUSER QUOTA UNLIMITED ON USERS;

3. Create a directory object, which should have enough disk space to accommodate the data to be migrated.

CREATE OR REPLACE DIRECTORY MIG_DMP_DIR AS '/u01/mig_data';

GRANT READ, WRITE ON DIRECTORY MIG_DMP_DIR TO MIGUSER;

4. Create an "Auth Token" based credential in the Autonomous Database and set it as a default credential that will be required for authentication between the Autonomous Database and OCI object storage. Follow the below steps:

b. Log in to your Autonomous Database and create a credential. Following is an example of SQL for the same:

◉ For Autonomous Database - Dedicated

SET DEFINE OFF

BEGIN DBMS_CREDENTIAL.CREATE_CREDENTIAL(

credential_name => 'ADB_CRED',

username => 'jhon_doe',

password => 'auth_token');

END;

/

◉ For Autonomous Database - Serverless

SET DEFINE OFF

BEGIN DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'ADB_CRED',

username => 'jhon_doe',

password => 'auth_token');

END;

/

5. Create new or identify an existing object storage bucket in the same region of your OCI tenancy where the Autonomous Database is residing.

a. The "Storage" tier setting for the bucket must be Standard.

b. The "Visibility" setting for the bucket must be Private.

6. If not already discovered, discover both the source and destination databases in Enterprise Manager.

7. cURL should be available on the host from where the export dumps will be uploaded to Object Storage.

Creating a Data Migration activity

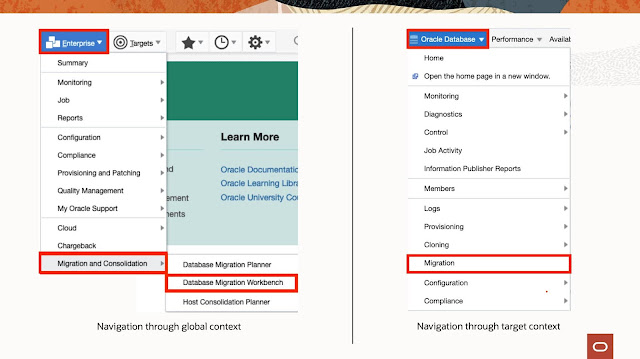

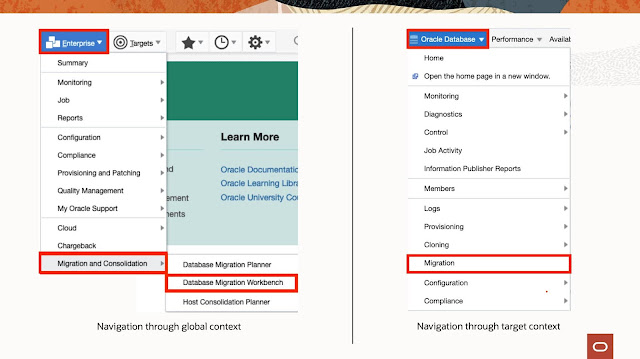

Navigate to the Database Migration Workbench to create migration activity. You can traverse either via a global context or via target context.

Launch Database Migration Workbench from a global context

Navigate to Enterprise > Migration and Consolidation > Database Migration Workbench

Launch Database Migration Workbench from target context

Goto to the target home page (target can be an SIDB, RACDB, PDB, or an Autonomous Database). Navigate to Oracle Database > Migration

Figure 2 - Navigating to Database Migration Workbench

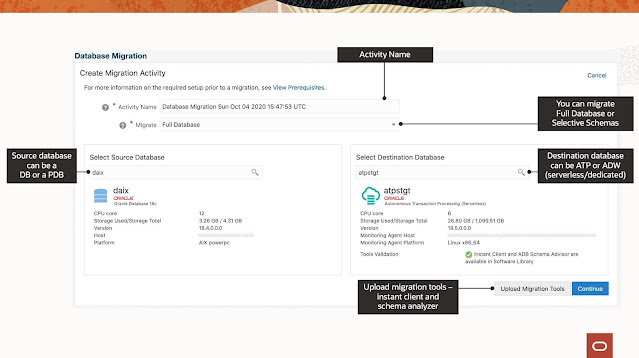

From the Database Migration Workbench dashboard, click on the button "Create Migration Activity" (as shown in figure 3).

Figure 3 - Database Migration Workbench Dashboard

Source, Destination, and Object Storage

Selecting a source and destination database

You can either perform a full fatabase migration or migrate specific schemas. Select the source database and destination database. The source database can be a SIDB, RACDB or a PDB. The destination database can be ATP or ADW.

Figure 4a - Select source and destination for migration

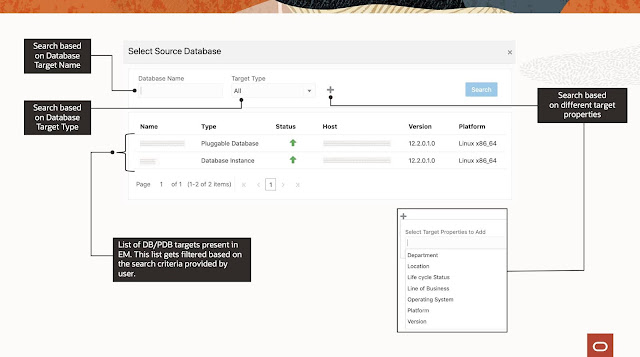

Selecting targets

Source and destination database targets can be selected by clicking the magnifying glass icon in the Select Source/Destination Database pane. Figure 4b (below) shows the target selector for the source database.

Figure 4b - Select source database

Upload Migration Tools

Database Migration Workbench makes use of the tools Oracle Instant Client and Autonomous Database Schema Analyzer. The latest version of these tools is downloaded automatically via job "Migration Prerequisite Download Job" and saved in Software Library. If these utilities are not available from the Software Library for your operating system, you will need to download them manually. Manual downloads are usually required when the latest version of the Migration Tools is not compatible with your current operating system version. The following binaries are needed to be uploaded:

1. Instant Client Files

Instant client basic zip package

Instant client SQL*Plus zip package

Instant client tools zip package

2. Autonomous Database Schema Advisor file

**Enterprise Manager automatically downloads the tools when it has a direct internet connection or has MOS proxy setup.

Adding Source, Destination, and Object Storage details

Figure 5 - Adding source and destination details

Source

1. Database Credentials - Select a named credential of an on-premises database user with Data Pump export privileges. This region gets auto-populated if the preferred credential is set.

◉ To create database credentials, navigate to Setup > Security > Named Credentials and click on create. Enter credential name, select authenticating target type as "Database Instance", credential type as "Database Credentials", enter username, password, role and click on save.

◉ Database Credential can also be created via EMCLI as follows:

emcli create_named_credential \

-auth_target_type=oracle_database \

-cred_type=DBCreds \

-cred_scope=Global \

-cred_name=SRC_DB_CREDS \

-attributes="DBUserName:db_user;DBPassword:Db_password;DBRole:Normal"

2. Host Credentials - Select a named credential for database host user. This region gets auto-populated if the preferred credential is set.

◉ To create host credentials, navigate to Setup > Security > Named Credentials and click on create. Enter credential name, select authenticating target type as "Host", credential type as "Host Credentials", enter the username, password, and click on save.

◉ Host Credential can also be created via EMCLI as follows:

emcli create_named_credential \

-auth_target_type=host \

-cred_type=HostCreds \

-cred_scope=Global \

-cred_name=SRC_HOST_CREDS \

-attributes="HostUserName:host_user;HostPassword:Host_password"

Destination

1. Database Credentials - Select a named credential of Autonomous Database user with Data Pump import privileges. This region gets auto-populated if the preferred credential is set.

◉ To create database credentials, navigate to Setup > Security > Named Credentials and click on create. Enter the credential name, select authenticating target type as "Autonomous Transaction Processing" or "Autonomous Data Warehouse", credential type as "Database Credentials", enter username, password, role and click on save.

◉ Database credential can also be created via EMCLI as follows:

emcli create_named_credential \

-auth_target_type=oracle_cloud_atp \

-cred_type=DBCreds \

-cred_scope=Global \

-cred_name=DEST_ADB_CREDS \

-attributes="DBUserName:adb_user;DBPassword:Adb_pass123;DBRole:Normal"

**Provide auth_target_type=oracle_cloud_adw if destination is Autonomous Data Warehouse

2. Agent Host Credentials - Select a named credential for destination ADB's monitoring agent user. This region gets auto-populated if the preferred credential is set.

◉ To create host credentials, navigate to Setup > Security > Named Credentials and click on create. Enter credential name, select authenticating target type as "Host", credential type as "Host Credentials", enter the username, password, and click on save.

◉ Host Credential can also be created via EMCLI as follows:

emcli create_named_credential \

-auth_target_type=host \

-cred_type=HostCreds \

-cred_scope=Global \

-cred_name=DEST_HOST_CREDS \

-attributes="HostUserName:host_user;HostPassword:Host_password"

3. Service Name - Select the Autonomous Database service name, which will be used for migration. It is recommended to use the service name "high" for data migration.

Action

◉ Migration Method - Migration Method for On-premises to Autonomous Database migration will always be "Data Pump".

◉ Recompile invalid objects after migration - On selecting this checkbox, all invalid objects from the migrated schema will be recompiled.

◉ Compare performance after migration - On selecting this checkbox, SQL Performance Analyzer will be used to compare performance before and after migration. You can see a detailed SQL Performance Analyzer report once the migration activity is complete.

◉ Source Data Pump Directory - Select a directory object of the source database, on which the selected database user is having read and write privileges. If the source database is a RAC target, then the Data Pump directory must be shared across all RAC nodes. Data Pump directory cannot be an ASM based location.

◉ Encryption Password - Provide encryption password for export dumps. The export dumps generated from the source database are always encrypted.

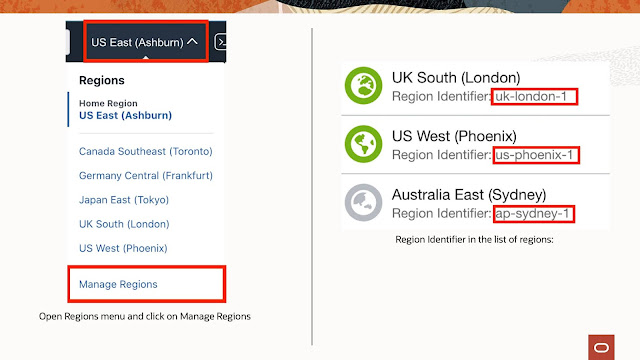

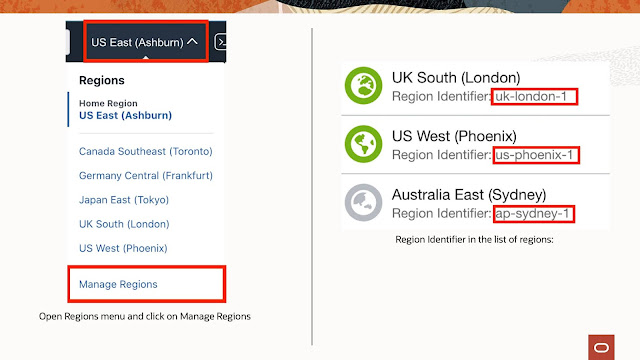

◉ Cloud Storage URL - Provide object storage URL for the region where your Autonomous Database exists. Cloud Storage URL is of the following format:

objectstorage.[Region Identifier].oraclecloud.com

You can get the value of region identifier from the OCI console. Open the "Regions" menu and click on "Manage Regions". You will find the "Region Identifier" in the list of regions.

Figure 6 - Finding region identifier from OCI console

◉ Bucket Name - Enter Object storage bucket name (this bucket should reside in the same region where destination ADB is residing).

◉ OCI Credential - Select a named credential for the Autonomous Database of type Oracle Cloud Infrastructure.

◉ To create OCI credentials, navigate to Setup > Security > Named Credentials and click on create. Enter credential name, select authenticating target type as "Autonomous Transaction Processing" or "Autonomous Data Warehouse", credential type as "Oracle Cloud Infrastructure Credential", enter Tenancy OCID, User OCID, Private Key, Fingerprint and Passphrase.

◉ Database Credential can also be created via EMCLI as follows:

emcli create_named_credential \

-cred_name=OCI_CRED \

-auth_target_type="oracle_cloud_atp" \

-cred_type="OCICredential" \

-cred_scope=Global \

-target_type="Autonomous Transaction Processing" \

-attributes="TenancyOCID#<OCI Tenancy OCID>;UserOCID#<OCI User OCID>;PrivateKey#<Private_key>;FingerPrint#<Fingerprint>;PassPhrase#<Pass_phrase>" \

-subseparator=attributes="#"

**Provide -auth_target_type=oracle_cloud_adw if destination is Autonomous Data Warehouse

**Provide -target_type="Autonomous Data Warehouse" if destination is Autonomous Data Warehouse

**Steps to setup SSH keys can be found here

**The new lines of <Private_key> should be delimited as \r\n while using in emcli

◉ Database OCI Auth Credential - Select the Auth Token based credential created in Autonomous Database.

Named credentials may be scoped as target-specific or global. All the examples above show named credential creation with global scope. More details of named credential creation can be found here.

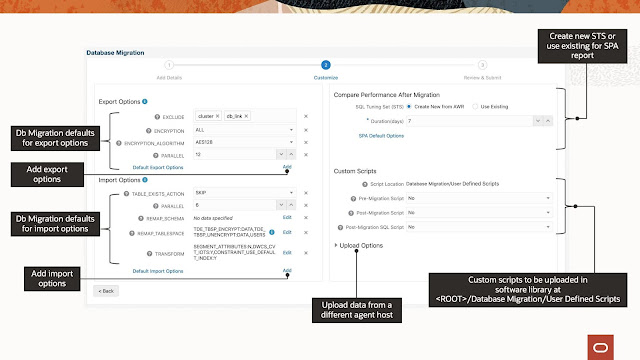

Customizing the Activity

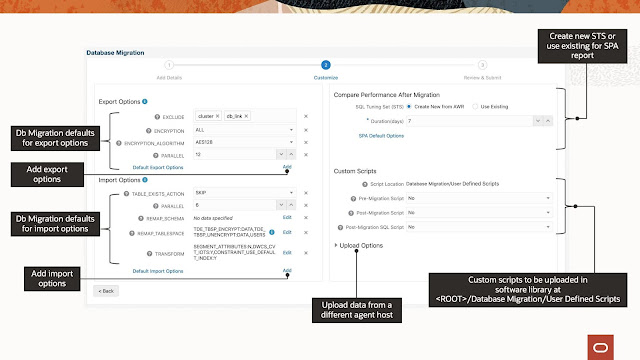

Figure 7 - Customizing export/import options, STS, and pre/post custom scripts

Export and Import Options - You can provide the applicable parameters for export and import command, which will be used during migration. The defaults are pre-populated with values that are required for migration to Autonomous Database.

There are a few options that get added by Database Migration Workbench implicitly, as shown in Figure 8 below.

Figure 8 - Implicitly added options for export and import

Compare Performance After Migration - SQL Performance Analyzer will be used to compare performance before and after migration. You can choose an existing SQL Tuning Set (STS), or Database Migration Workbench will create a new STS from AWR.

Pre/Post Script - Executes pre- or post- migration scripts stored in the software library. The scripts should be uploaded to the software library at <ROOT>/Database Migration/User Defined Scripts as a Directive or Generic Component. Following are the supported custom scripts:

Pre-Migration Script - SH script executed before migration on the on-premises database host.

Post-Migration Script- SH script executed after migration on the ADB monitoring agent's host.

Post-Migration SQL Script - SQL executed after migration on ADB.

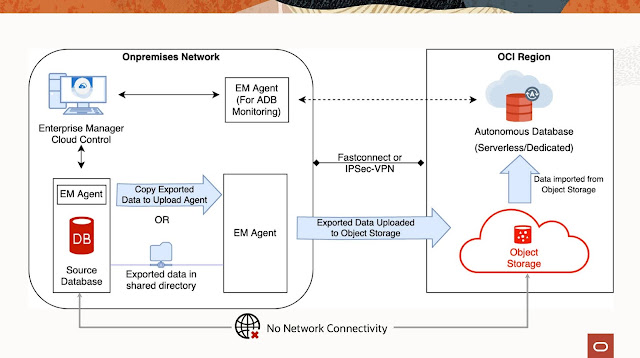

Upload Options - Database Migration Workbench uses system cURL to upload exported dumps to Object Storage. By default, the source database host is used to upload to Object Storage. But a user can opt to select a different agent host from which connectivity to object storage will be made for uploading export dumps. If the dump location is not shared with the upload agent host, then the dump files are copied to a staging directory.

Figure 9a - Flow of data from an on-premises database to Oracle Autonomous Database when data is uploaded from the source host

Figure 9b - Flow of data from an on-premises database to Oracle Autonomous Database when data is uploaded from a different host

Figure 10 - Inputs for uploading data from different host

Analyze Source Data

Based on the provided inputs, Analyze Source will provide a report describing the on-premises database objects which will not get successfully migrated to Autonomous Database. Analyze schema makes use of the schema analyzer tool, which has been uploaded in the first step. Click on "Analyze Source" on Review & Submit page. You have to either select the named credential of a user where schema advisor is installed or credential with sysdba privileges. If a sysdba credential is selected, then a temporary schema advisor user is created, which will be deleted post advisor report generation.

Figure 11 - Report generated by Analyze Source

Validate Inputs Before Submission

On the Review & Submit page, click on the "Validate" button to verify all the inputs concerning the source, destination, object storage, and export/import options provided in the activity. Validation is not a mandatory step, but it is advised to validate the inputs before submitting the activity. Figure 12 shows a list of validations that are executed as part of this step.

Figure 12 - Validations

Post Migration Activities

Once the migration activity is complete, you can Compare Performance, View Analysis of the Activity, View detailed logs of the activity, or cleanup dumps from source and object storage. You can perform all these operations by clicking on the Action Menu of an activity.

Figure 13 - Various options of Actions Menu

Cleanup - Deletes export dumps from the source location and object storage.

View Details - Redirects to the deployment activity page where you will get step by step progress details and logs for the corresponding migration activity.

View Analysis - This page analyzes validations, export, and import operation executed by migration activity. It will summarize the job steps and then deep dives into details of individual Object errors.

Figure 14 - Analyzing a Migration Activity

Compare Performance - Shows the SQL Performance Analyzer report of the migration activity.

Marking an activity as "Complete" - Importing data to an Autonomous Database might result in a few import errors. In such situations, the migration activity ends with a status as "Completed with errors". Users can analyze the migration (using View Analysis), and if the errors reported during import are ignorable, the user can choose to Mark the Activity as complete.

Figure 15 - Marking an activity as complete

0 comments:

Post a Comment