Data Transforms is a built-in data integration tool for Autonomous Database users. Accessible from Database Actions, it is a simple-to-use, drag-and-drop, no-code tool to load and transform data from heterogenous sources to the Autonomous Database. It can be used for all data integration needs such as building data warehouses and feeding analytics applications.

Data Transforms is based on Oracle Data Integrator and uses the same Extract-Load-Transform (ELT) methodology that has been proven as a mature and high-performance data integration architecture in thousands of customers both on premises and on Oracle Cloud Infrastructure.

In this blog post I will show you how to transform your data with little effort using this tool.

Invoking Data Transforms

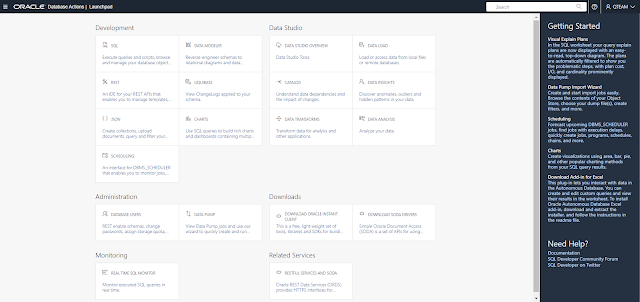

Log into the Autonomous Database and navigate to Database Actions page. The Data Transforms card is under Data Studio section.

This tool is always visible for the ADMIN user. Other users require the following user roles to be enabled.

DWROLE

DATA_TRANSFORMS_USER

Note: Make sure to check both Granted and Default boxes, as shown below.

Now log in as this database user and navigate to Database Actions page.

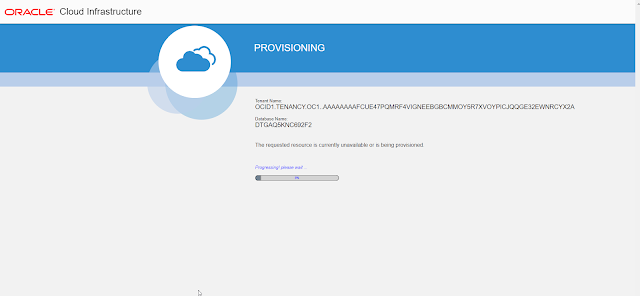

After you click on the Data Transforms card, you will need to re-enter the database username and password.

It takes around 1-2 minutes for the service to come up. It takes a little longer for the first time and subsequent startup times are shorter.

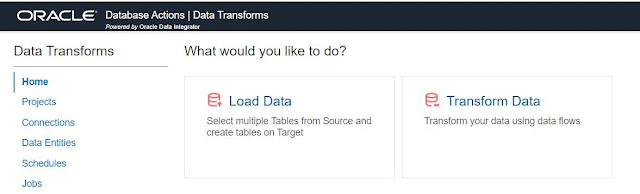

Once deployed, the home screen looks like this.

Data Transforms is an Extract-Load-Transform (E-L-T) tool. You can use it for

◉ Extract and Load (E-L) - with the “Load Data” wizard.

◉ Extract, Load and Transform (E-L-T) or simply Transform (T) - with the “Transform Data” wizard.

This blog post focuses on the data transformation use case. The data load use case without transformation is described in this blog post.

Creating connections

Data Transforms supports connections to a wide variety of sources. To load data from any of these sources, we first need to create a connection.

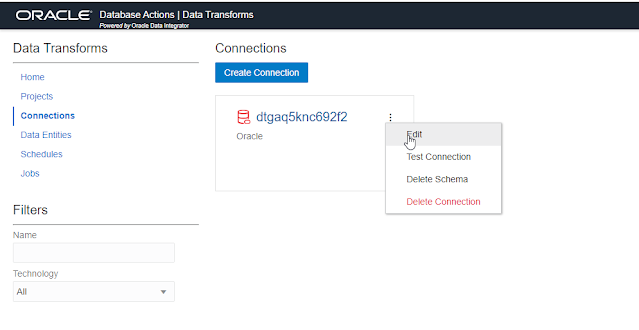

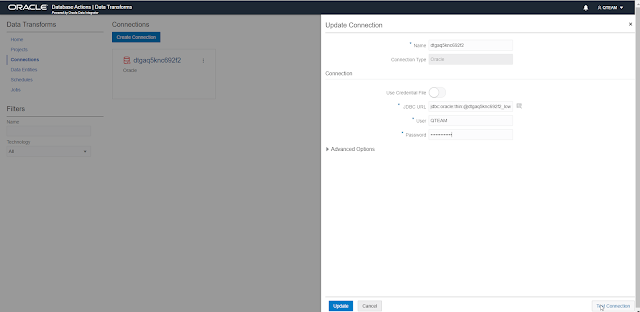

From the left side menu, click on “Connections”. It will show any existing connections, and you can create new connections.

For the first-time user, there is already a template connection to your Autonomous Database. Complete the connection details by editing the connection to provide username and password.

Test the connection and update it.

You can also create new connections to load data from other sources. Note that new connections are categorized in Databases, Applications and Services. Select the appropriate connection type in the category, and then provide connection parameters (such as username and password). Connection parameters are specific to the connection type. Test it for successful connection and save it.

In this blog post we will use the connection to your Autonomous Database and transform data which is already there.

Transforming data

To transform data, you need to create Data Flow jobs. The “Transform Data” wizard makes this task easier and is covered in this blog post. Advanced users can manually create a project and create data flows in the project.

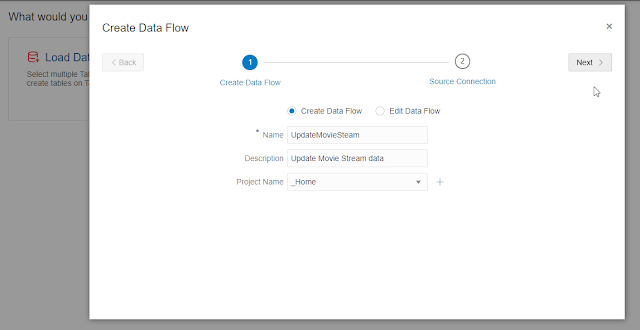

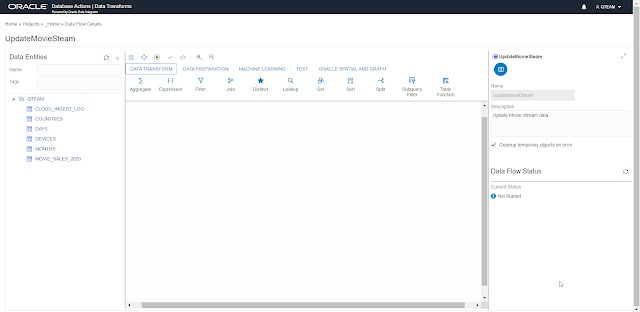

From the home screen, click on the “Transform Data” button. Provide a name and description for the data flow. You can create a new project if you don’t have one already. A project is a collection of integration jobs for better manageability. Click “Next”.

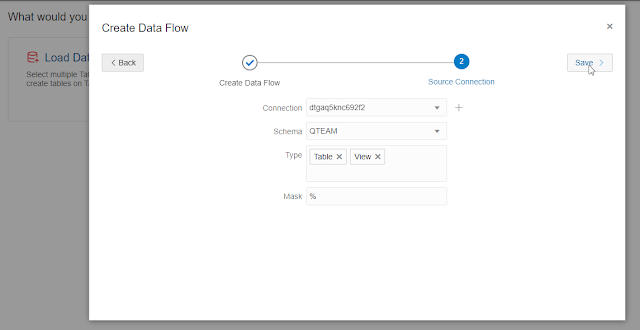

Select the connection to your Autonomous Database and schema (database user). For simplicity, we will use % for the mask that will retrieve all the table definitions from the schema. You can optionally use the mask to restrict tables of interest. Click “Save”.

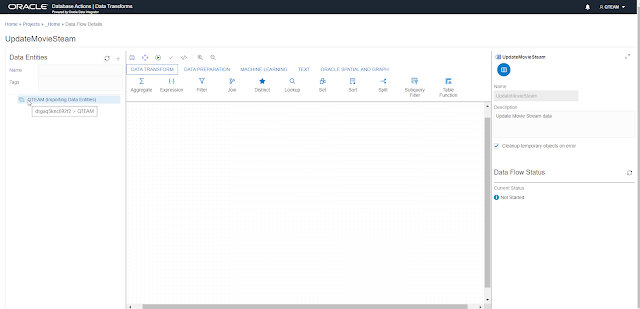

This will take you to the Data Flow editor. On the left side you will see the tables in your Autonomous Database. First you will see the status of “Importing Data Entities” against your schema name. Data Transforms is importing the table definitions in the background. This may take a few minutes.

Soon, the left side of the data flow editor will show all the tables. You can also search for specific tables if the list is long.

Now, you can use the editor to define any sequence of transforms to define your data flow. The transforms tools are available on the top of the page under various categories. You will simply drag the appropriate tool to the canvas, configure properties and link transformations in a flow.

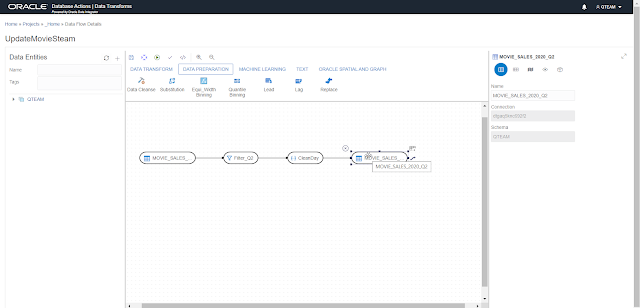

In the data flow shown below, the following transformations are defined:

1. MOVIE_SALES_2020 table is used as a source

2. Rows filtered for 2nd quarter

3. DAY column is cleansed to convert to title case

4. Load results into a new table called MOVIE_SALES_2020_Q2.

Configure the data flow to load the target table in various modes, such as:

◉ Drop/create and load

◉ Truncate and load

◉ Append

◉ Incremental load

This blog post does not go into details of how to build a data flow job.

Save and execute the data flow from this editor itself to load your target table with the transformed data immediately. Alternatively, create a schedule to run this job at a specific time and frequency.

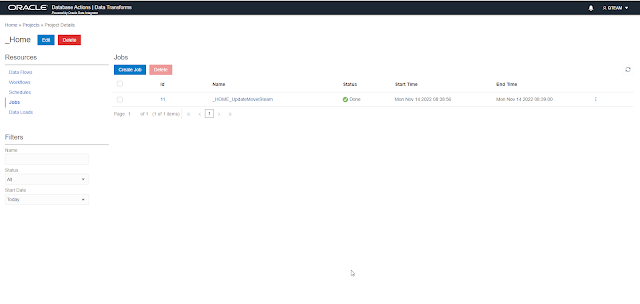

Advanced users can also combine data flows to create workflows that can be executed or scheduled. All these job execution details are logged and available under the Jobs menu. The following screenshot shows the job for the data flow executed earlier.

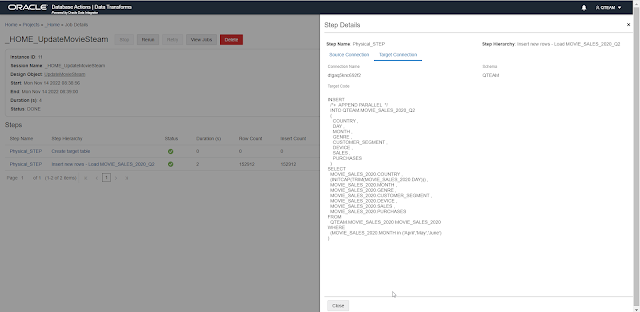

Click on the job to get execution details for each step. These include rows processed as well as the executed SQL for debugging purposes.

Summary

Data Transforms is a versatile and heterogenous data integration tool built into the Autonomous Database. Data Transforms makes it easy to transform data already in your Autonomous Database. You can also load and transform data from other sources. There is no need to write SQL or use any external data integration tool for your data transformation needs.

Source: oracle.com

0 comments:

Post a Comment