One of the greatest benefits of cloud computing is the ability to pay for only what you use. With Oracle Autonomous Database, the system automatically scales up and down the resources required to meet the requirements of the workload and you truly only pay for what is you use. Many users of Exadata Cloud Service have asked how they can also automatically scale their system in a similar manner, sizing it continuously to meet demand.

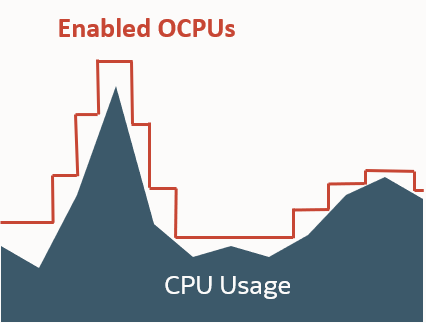

The first thing to understand is how Exadata Cloud Service charges for resources consumed by the system. The billing is based upon the number of cores allocated to the virtual machines in which the databases are running. When your workload increases you must scale up the number of cores allocated to the virtual machine, and when workload goes down you must scale down the cores allocated to the virtual machine. If you statically set the size of the VMs, you will most likely size them high to avoid having to frequently resize them as workload changes. Unfortunately, that also means you are probably paying for unnecessary capacity.

Exadata Cloud Service supports a rich set of APIs that allow you to scale your virtual machines up and down online, while the database is running, with no disruption to the database. You can do this using a variety of APIs but perhaps the simplest is the

OCI CLI API that will connect to the control plane and scale the VM up or down to a specified number of OCPUs. So, to automatically scale your VMs, all you need to do is run a script that determines if a scale up or down operation is appropriate based on pre-defined criteria, and if those criteria are met, calls the appropriate API to perform the action.

The criteria for performing a scale up or down operation is dependent upon a few different parameters and algorithms that you must set:

◉ The resource you measure: Typically, you measure CPU utilization within the VM. Since you are scaling CPU, this is the logical resource to measure.

◉ Minimum resource threshold: A point at which you declare the resource is low. For example, you may decide your CPU utilization should not go below 40%.

◉ Maximum resource threshold: A point at which you declare the resource is high. For example, you may decide your CPU utilization should not go above 60%.

◉ The frequency of measurement: How often you take a measurement and evaluate it against your criteria.

◉ Interpretation of the measurement: This algorithm determines how a measurement will be interpreted and when your script will initiate a scaling operation.

◉ Delay for Scale Down Operations: To provide optimum service levels, it may be best to not immediately scale down when load drops. This determines how long should you wait before initiating a scale-down operation.

You can create a script using a scripting language of your choice. You can create a script to run in the VMs you want to autoscale, or you can orchestrate the process from another server. We recommend you set up the script to automatically start and run as a daemon in the background. The script should basically loop indefinitely, measuring the resource (CPU utilization) every period and evaluating the interpreted results of the measurements against the thresholds. If a scaling operation is determined to be required, the script should call the API to scale the VM by the desired amount.

Measuring CPU Utilization

The easiest way to measure CPU Utilization is to use the mpstat Linux command. This package is installed by default on Exadata guest images. The relevant syntax for mpstat is:

mpstat <interval> <count>

You can specify an <interval> for the measurement, and repeat the measurement <count> times. Be sure to specify an interval or mpstat will return the average CPU since boot. Choose an interval long enough to smooth out transient spikes in CPU (see ‘Interpretation of Measurements’ below). So, to calculate the utilization over a single 5-second interval, use the command:

mpstat 5 1

The command will display:

Linux 4.14.35-1902.304.6.4.el7uek.x86_64 (pmtest-ineif1) 11/30/2020 _x86_64_ (4 CPU)

05:47:40 PM CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle

05:47:45 PM all 2.81 0.27 3.45 0.05 0.00 0.05 0.16 0.00 0.00 93.21

Average: all 2.81 0.27 3.45 0.05 0.00 0.05 0.16 0.00 0.00 93.21

Use the last row (AVERAGE) and the 12th field (%idle). Subtract that value from 100 to get the CPU utilization over that period. You can also see the size (in VCPU) of each VM on the top line. Remember, the count (4 CPU in the above example) refers to the number of VCPUs. To get the number of physical cores allocated to that VM, divide by two.

Keep in mind this provides the CPU utilization for a single node in the cluster. You should measure the CPU utilization across all nodes in the cluster, as any resizing action will affect all nodes.

Determining the Thresholds

Choose a minimum and maximum threshold for CPU Utilization. You don’t want to set the minimum and maximum too close together in order to avoid continually scaling up and down as the resource bounces around the thresholds. These thresholds create a target window for CPU utilization, and ideally, the VM CPU utilization should remain within that window. When choosing the thresholds, be sure to take into account demand spikes you want to be able to immediately handle. It will take up to 5 minutes to scale a system up, so your target window should be able to accommodate any transient spikes. Also, think about what happens should a server go down. You should ensure there are adequate resources available to process workload until autoscaling can react.

Determining Measurement Frequency

The frequency of measurement will affect the overall response time for scaling events to be initiated. The longer the period between measurements, the longer it will take to react to resources being out of the target range. If your workload will not change quickly, you do not need to take measurements very frequently. On the other hand, if it can quickly spike, you will need to take measurements more frequently. The script ought to be very low overhead, so it is alright to take a measurement every few seconds.

Interpretation of Measurements

You typically will not want to react to a single measurement that is out of bounds. There could be transient effects on the workload that are best ignored. So, as an example, you may want to only react if 2 or 3 consecutive measurements, or two measurements over a certain period, or even an average over a period, are deemed out of the target range. There is no rule of thumb here, as you really need to understand your workload and the types of transient behaviors that may exist.

Delay for Scale Down

The frequency of measurements, plus the interpretation of measurements, will determine how quickly you can react to changes in load. You want to ensure these settings allow the system to react quickly enough. However, while it is desirable to immediately scale up the OCPUs in the event the CPU utilization is high, you may not want to immediately drop the OCPUs should the load drop and CPU utilization drop below the target range. If the load is fluctuating, you don’t want to drop the OCPU on every dip in load as that will lead to more situations where the CPU is below target and service levels could be affected. Specifying a delay for scale-down operations will prevent the system from scaling down immediately, without affecting the response time for scale-up events. You should be careful to only enable this delay after the system has stabilized, so as to not adversely affect scaling operations that require multiple iterations.

Granularity of Scaling Operations

You can scale an ExaCS VM by as little as a single core per node in the cluster. However, with a large number of cores, you may want to scale by multiple cores when scaling up or down to speed reaction time to an event. You can try to estimate the step in the OCPU count by doing a little math.

Calling the Cloud APIs

You can programmatically scale your Exadata VM by calling the appropriate REST APIs from your script. However, calling REST APIs can be complex, so Oracle provides a CLI (OCI CLI) that simplifies the calls. The OCI CLI still uses the REST APIs, but the CLI client simplifies authentication. If you don’t object to installing the CLI client, use the CLI. Otherwise, you can call the REST API directly. The examples in Part 2 assume you are using the CLI. You will need the following items to call the API:

◉ VM Cluster OCID (available from the console)

◉ Tenancy OCID (available from the console)

◉ User OCID (available from the console)

◉ Key fingerprint (generated during setup)

◉ Private key (with public key uploaded to OCI)

The specific commands used to scale ExaCS are different from those used by ExaCC, but the concepts are the same. Note you only have to specify the VM Cluster OCID, as the other parameters are stored in an OCI CLI configuration file. To scale an ExaCS, you can issue the following OCI CLI command.

$ oci db cloud-vm-cluster update --cloud-vm-cluster-id <VM Cluster OCID>

--cpu-core-count <#OCPUs>

For ExaCC, use the following:

$ oci db vm-cluster update --vm-cluster-id <VM Cluster OCID>

--cpu-core-count <#OCPUs>

Autoscaling the Easy Way

If you don’t want to deal with writing and supporting your own script, Oracle RAC Pack has developed an autoscaling script for Exadata Cloud Service (on OCI or Exadata Cloud@Customer) that you are free to use. You can download it from MOS Note 2719916.1. It takes many of the parameters discussed above and implements some additional features.

The easiest way to reduce your TCO in the cloud is to stop paying for excess capacity when it is not required. Autoscaling is a great way to match workload demands with Exadata capacity, providing you true pay-for-use pricing. It is very simple using the published APIs to implement script-driven automation, tuned specifically for your workload. Try it out with your favorite scripting language, or download a fully tested implementation from MyOracleSupport.

0 comments:

Post a Comment