Zero Downtime Migration (ZDM) 21c is available for download! ZDM is Oracle’s premier solution for moving your on-premises Oracle Database workloads to Oracle Cloud, supporting a variety of Oracle Database versions as the source and most Oracle Cloud Database Services as targets. Zero Downtime Migration 21c enhances the existing functionality by adding the long-requested Logical Migration workflow, which provides even more zero downtime migration choices!

Wednesday, March 31, 2021

NEW: Oracle Zero Downtime Migration 21c

Monday, March 29, 2021

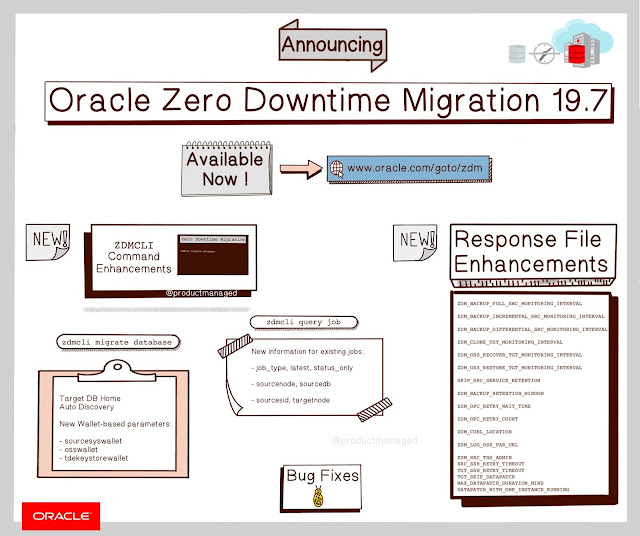

Announcing Oracle Zero Downtime Migration Release 19.7

ZDM is Oracle’s premier solution to move your on-premises Oracle Database workloads to the Oracle Cloud. ZDM supports a wide range of Oracle Database versions and Oracle Cloud Database Services as targets and ensures minimal to no production database impact during migration Oracle Maximum following Availability Architecture (MAA) principles. Zero Downtime Migration Patch Release 19.7 enhances the existing functionality of ZDM, providing bug fixes and more control of the migration process.

Read More: 1Z0-067: Upgrade Oracle 9i/10g/11g OCA to Oracle Database 12c OCP

Oracle Zero Downtime Migration supports Oracle Database versions 11g, 12c, 18c, 19c and newer versions and can migrate to Oracle Cloud Database Services Virtual Machines, Bare Metal, Exadata Cloud Services and Exadata Cloud at Customer. ZDM performs such Oracle Database migrations to the Oracle Cloud in eight simple steps, which can be scheduled and monitored as needed.

What's New in Oracle Zero Downtime Migration 19.7?

Zero Downtime Migration Patch Release 19.7 enhances the existing functionality of ZDM, providing bug fixes and more control of the migration process. Here is what’s new in a nutshell:

ZDMCLI Command Enhancements

ZDMCLI Response File Parameter Enhancements

ZDM Backup-related Response File Parameter Enhancements

Bug Fixes

Friday, March 26, 2021

Building a modern app with Oracle's Converged Database

Synergized, Secure, Scalable: Oracle’s Converged Database helps Development Teams Get Application-Smart

Business today runs on high-performance applications. But in order to innovate and differentiate in an always-on world, application development needs to be swift, efficient, and responsive to complex data. Oracle’s converged database delivers a unified solution loaded with the power to move business forward at speed.

When it comes to doing business in the 21st century, a company’s success depends on its applications. Not only do applications differentiate a company, their strength, speed, responsiveness, reliability, and performance can make or break the customer experience.

More Info: 1Z0-068: Oracle Database 12c - RAC and Grid Infrastructure Administration

As a result, modern applications are more prevalent than ever. To customers, they represent brand experience. To employees, they’re the key to efficient operations and empowerment. To executives, apps drive business growth and competitiveness in the marketplace — factors measured externally by visibility as well as customer acquisition, retention, and loyalty. Internally, apps drive analytics and insight into all operations, which in turn facilitate better corporate decision-making.

In light of all this, drop a layer deeper (across any enterprise) and an important truth becomes crystal clear. Databases are the backbone of every application.

Databases not only contain rafts of crucial data, they keep it safe, secure, and available 24/7. But this data is diverse. Gone are the days of capturing simple transactions and generating monthly reports. Now, customers are their own transaction agents. They travel and transact from mobile phones, laptops, and other devices, any time of day or night. They nurture relationships to other people, places, and things. Their needs and behaviors drive the always-on world.

When it comes to capturing all this data, this trend of transactions pushing out to customers creates exponential compounding of data. But let that data remain latent or untapped, and not only will a company’s applications suffer, they’ll miss the real-time cues that are most valuable to any organization.

Many companies are making their way through this complex landscape with piecemeal solutions — technologies and architectures reached for as needed over time; some of them proprietary, some home-grown, others open-source. Regardless, the resulting patchwork can’t handle the data load. Data fragments. Security becomes inconsistent.

Administration is ad hoc. The systems become brittle as data compounds, which quickly hampers a company’s agility, as well as its ability to innovate.

This exacts a high price. A literal and metaphoric cost that can be radically mitigated with Oracle’s converged database.

Let’s unpack some of the key ways Oracle’s synergized solution delivers the power to keep you smart. Application Smart. On our way there, we’ll begin with a fairly common view of the challenges many companies face, and how IT organizations typically respond — and can course-correct.

The modern application experience is continuously evolving. This change in business needs demands fluid adaptation when it comes to the back-end. Tech stack, application architecture, dev/test, release processes, support: IT’s responsiveness, and its ability to efficiently handle various workflows, becomes the fulcrum of the enterprise — the leverage-point that requires deliberate strategies and savvy choices to deliver the necessary simplification, unification, and automation of architectures, technologies, and processes.

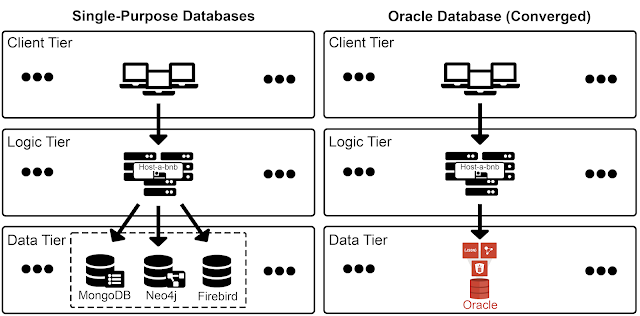

Without these deliberate choices, applications that combine analytics, geo-location, network relationships, machine learning, and transaction activity are often fueled by separate, single-purpose databases and their resulting technology stacks. That may seem viable on the surface, at first-blush. But within this solution construct, the more data moves, the more it degrades an application’s performance. Dev-time increases. Release-schedules lag. Apps become less competitive. And each one requires IT specialists trained in specific stacks.

The overall hit to efficiency can be devastating.

To demonstrate this with specifics, let’s take a quick look at the data, data types, and demands of a truly modern application.

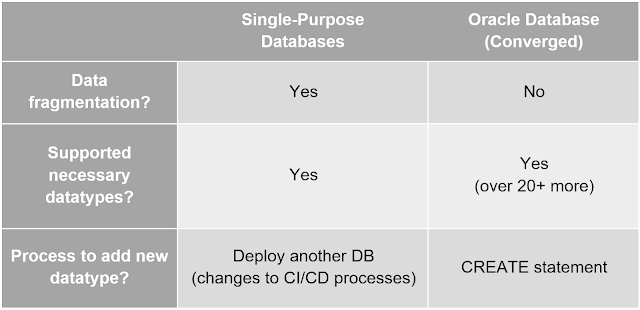

For a long time, the nature of data, and data types, has been row/columns representing relational data. As IT has advanced, data such as key-value, spatial, graph, blockchain, document, time series, and IoT have subsequently emerged. Each of these datasets must co-exist with relational data. When seen in its totality, every modern, data-driven application has inherent data-type complexity. As a result, workloads like transactional, analytics, machine learning, and IoT require different database algorithms to solve for unique demands. This in turn has given rise to single-purpose database solutions.

But while single-purpose databases might appear to be best-of-breed in isolation, they quickly become worst-of-weakness when cobbled and patched together.

As an example, let’s take a look at the retail application of “eShop.biz” — a fictitious company with a common challenge who would come to us after having chosen independent, single-purpose databases as their architecture for online retail.

As you can see in the graphic below, all “eShop.biz” retail services, which included product and customer catalogs; payment, geo-location, cache, and recommend engine services; plus payment, finance, inventory, and delivery services — benefit from a single-purpose database.

Wednesday, March 24, 2021

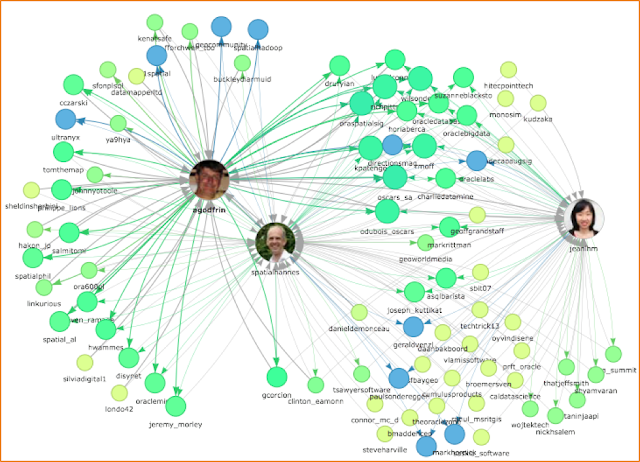

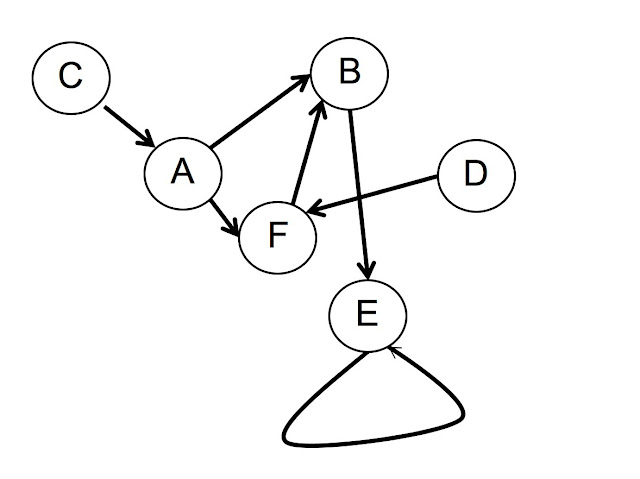

Graph Databases: What Can They Do?

How Do Graphs Work?

Why Have Graphs Become Popular?

What Do Graphs Do?

Graph Analytics in Oracle Database and Oracle Autonomous Database

How Does Graph Technology Work?

Monday, March 22, 2021

JSON_SERIALIZE in Oracle Database 19c

The JSON_SERIALIZE function converts a JSON document from any supported data type to text.

◉ The Problem

We can store JSON data in a number of different data types, including binary types. Let's create a test table to demonstrate the issue.

-- DROP TABLE json_documents PURGE;

CREATE TABLE json_documents (

id NUMBER,

data BLOB,

CONSTRAINT json_documents_is_json CHECK (data IS JSON)

);

INSERT INTO json_documents (id, data) VALUES (1, '{"id":1,"first_name":"Iron","last_name":"Man"}');

COMMIT;

If we try to display the data directly, we don't get anything useful.

SELECT data FROM json_documents;

DATA

--------------------------------------------------------------------------------

7B226964223A312C2266697273745F6E616D65223A2249726F6E222C226C6173745F6E616D65223A

SQL>

Read More: 1Z0-064: Oracle Database 12c - Performance Management and Tuning

We can manually convert a BLOB to a CLOB. For example, we can use the BLOB_TO_CLOB function created by the blob_to_clob.sql script, or for small amounts of data using the UTL_RAW package.

SELECT blob_to_clob(data) AS data FROM json_documents;

DATA

--------------------------------------------------------------------------------

{"id":1,"first_name":"Iron","last_name":"Man"}

SQL>

SELECT UTL_RAW.cast_to_varchar2(data) AS data FROM json_documents;

DATA

--------------------------------------------------------------------------------

{"id":1,"first_name":"Iron","last_name":"Man"}

SQL>

We could also use the JSON_QUERY function to return the whole document, rather than a fragment.

SELECT JSON_QUERY(data, '$') AS data FROM json_documents;

DATA

----------------------------------------------------------------------------------------------------

{"id":1,"first_name":"Iron","last_name":"Man"}

1 row selected.

SQL>

◉ JSON_SERIALIZE Basic Usage

The documentation provides the following description of the JSON_SERIALIZE function.

JSON_SERIALIZE (target_expr [ json_query_returning_clause ] [ PRETTY ]

[ ASCII ] [ TRUNCATE ] [ json_query_on_error_clause ])

The target expression is the JSON we want to convert.

In its basic form we can convert the JSON data from any supported data type to text, similar to what we did with the BLOB_TO_CLOB function.

SELECT JSON_SERIALIZE(data) AS data FROM json_documents;

DATA

--------------------------------------------------------------------------------

{"id":1,"first_name":"Iron","last_name":"Man"}

SQL>

We can use the JSON_SERIALIZE function to convert the output from other SQL/JSON calls. In this case we use the JSON_OBJECT function to produce a JSON document in binary form, then convert it text using the JSON_SERIALIZE function.

SELECT JSON_SERIALIZE(

JSON_OBJECT(empno, ename, hiredate RETURNING BLOB)

PRETTY) AS data

FROM emp

WHERE empno = 7369;

DATA

--------------------------------------------------------------------------------

{

"empno" : 7369,

"ename" : "SMITH",

"hiredate" : "1980-12-17T00:00:00"

}

SQL>

◉ Format Output

The returning clause works like that of the other SQL/JSON functions, as described here.

The PRETTY keyword displays the output in a human readable form, rather than minified.

SELECT JSON_SERIALIZE(a.data PRETTY) AS data

FROM json_documents a

WHERE a.data.first_name = 'Iron';

DATA

--------------------------------------------------------------------------------

{

"id" : 1,

"first_name" : "Iron",

"last_name" : "Man"

}

SQL>

The TRUNCATE keyword indicates the output should be truncated to fit the return type. In the following example the return type is VARCHAR2(10), so the output is truncated to fit.

SELECT JSON_SERIALIZE(a.data RETURNING VARCHAR2(10) TRUNCATE) AS data

FROM json_documents a

WHERE a.data.first_name = 'Iron';

DATA

---------------------------------------------------------------------------------

{"id":1,"f

SQL>

Unlike some of the other SQL/JSON functions, the TRUNCATE function doesn't seem necessary, as it seems to truncate the output to match the returning clause.

SELECT JSON_SERIALIZE(a.data RETURNING VARCHAR2(10)) AS data

FROM json_documents a

WHERE a.data.first_name = 'Iron';

DATA

---------------------------------------------------------------------------------

{"id":1,"f

SQL>

The ASCII keyword indicates the output should convert any non-ASCII characters to JSON escape sequences.

◉ Error Handling

If there are any failures during the processing of the data the default response is to return a NULL value. The way an error is handled can be specified explicitly with the ON ERROR clause.

-- Default behaviour.

SELECT JSON_SERIALIZE('This is not JSON!' NULL ON ERROR) AS data

FROM dual;

DATA

--------------------------------------------------------------------------------

SQL>

SELECT JSON_SERIALIZE('This is not JSON!' ERROR ON ERROR) AS data

FROM dual;

*

ERROR at line 2:

ORA-40441: JSON syntax error

SQL>

◉ PL/SQL Support

There is no support for JSON_SERIALIZE in direct PL/SQL assignments.

SET SERVEROUTPUT ON

DECLARE

l_blob BLOB;

l_clob CLOB;

BEGIN

l_blob := UTL_RAW.cast_to_raw('{"id":1,"first_name":"Iron","last_name":"Man"}');

l_clob := JSON_SERIALIZE(l_blob);

DBMS_OUTPUT.put_line('After : ' || l_clob);

END;

/

l_clob := JSON_SERIALIZE(l_blob);

*

ERROR at line 6:

ORA-06550: line 6, column 13:

PLS-00201: identifier 'JSON_SERIALIZE' must be declared

ORA-06550: line 6, column 3:

PL/SQL: Statement ignored

SQL>

The simple workaround for this is to make the assignment using a query from dual.

DECLARE

l_blob BLOB;

l_clob CLOB;

BEGIN

l_blob := UTL_RAW.cast_to_raw('{"id":1,"first_name":"Iron","last_name":"Man"}');

SELECT JSON_SERIALIZE(l_blob)

INTO l_clob

FROM dual;

DBMS_OUTPUT.put_line('After : ' || l_clob);

END;

/

After : {"id":1,"first_name":"Iron","last_name":"Man"}

SQL>

◉ 21c Update

Oracle 21c introduced the JSON data type. The JSON_SERIALIZE function also support this new data type.

Friday, March 19, 2021

It’s Independence Day every day with the new Autonomous Data Warehouse Data Tools

In an earlier blog, we reviewed how Oracle Departmental Data Warehouse enables business teams to get the deep, trustworthy, data-driven insights they need to make quick decisions. We described how the governed, secure solution reduces risks and complexity while increasing both IT and analysts’ productivity - allowing IT teams to rely on a simple, reliable, and repeatable approach for all data analytics requests from business departments.

Did we stop there? No we didn’t.

We aim to keep providing more value to analysts, as well as to line of business developers, data scientists, and DBAs. To that end, we recently released a new suite of data tools included for free in Autonomous Database.

Indeed, while Oracle Departmental Data Warehouse enables business users in finance, HR and other departments to independently set up data marts in minutes and rapidly get insights from a single source of truth, they may still have needed to turn to IT for operations such as data loading and transformation. The new Autonomous Data Warehouse data tools further decrease business users’ reliance on IT teams – representing a benefit for both groups.

This new suite of built-in, self-service tools includes:

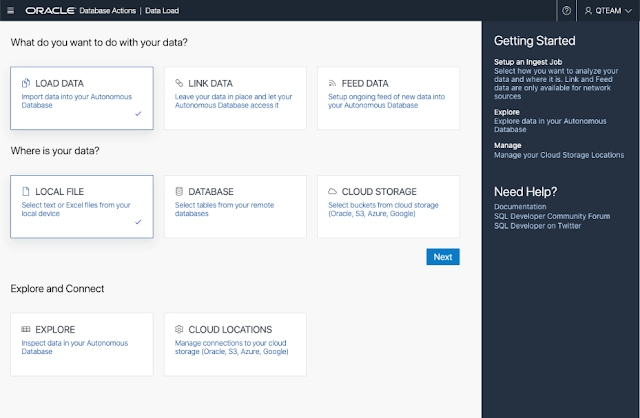

1. Data Loading

Business users can perform drag and drop data loading to swiftly load data themselves from local files such as spreadsheets, databases, and object storage (Oracle and non-Oracle) in Autonomous Data Warehouse. No need to call on IT.

2. Data Transformation

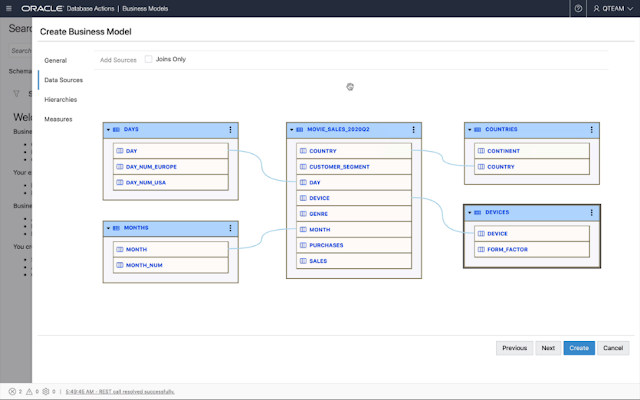

3. Business Modelling

4. Data Insights

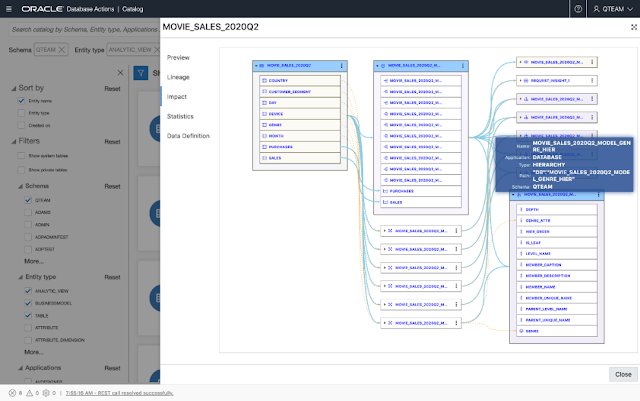

5. Catalog

Wednesday, March 17, 2021

How to Simplify Development and Optimize your Data with Oracle's Converged Database

In our digitized, data-fueled world, enterprises must create unique assets — and make the most of them — in order to remain competitive. This puts developers front and center when it comes to managing and optimizing database performance. Developers not only shape the underlying technologies throughout the stack, they now drive efficiency and best-practices from a variety of hybrid roles (DevOps, Full-Stack, etc.). But developers are often forced to choose between data productivity or their own.

Oracle's converged database has rendered that dilemma obsolete.

Developers know better than anyone that the digital landscape is ever-changing. New languages, new frameworks, new tools, new protocols — the demands and opportunities can quickly become overwhelming unless there’s a clear signal amid the noise. In keeping with the KISS (“keep it simple”) principle, developers strive for modern development while not sacrificing operational efficiencies required to keep their companies competitive.

Single-purpose databases, often known as purpose-built databases, are engineered to solve targeted problems. However, when operations grow, integration of multiple single-purpose databases is required.

Enter Oracle's converged database — a platform loaded with a suite of capabilities that are simpler, easier, and far less error-prone than multiple single-purpose databases combined.

In this article, we’ll highlight a few key advantages delivered by Oracle converged database that help our customers keep it simple while staying smart. Development Smart. Especially when developing with multiple data types.

As companies pursue the efficiency and relevancy necessary for their success, they must digitize complex processes and data-integration at scale. As a result, developer teams are faced with a difficult choice: optimize for fast application-development now, or prioritize easier data-capture later. Developer productivity or data productivity. It’s a difficult either/or.

In the first case (developer productivity), developer teams might spin-up single-purpose databases for specific projects (especially when it comes to greenfield development). Each database offers a low barrier for entry that often includes a convenient data model for the purpose at hand along with related APIs. Development moves quickly, as each database has its own operational characteristics that are useful in the immediate. But as a project grows and additional databases or cloud services are required, complexity multiplies and data fragments. Efficiencies gained are quickly lost, as each database’s once-enticing characteristics no longer function at scale. As a result, developer teams face long-term difficulties such as lack of standardization (APIs, query languages, etc.), data fragmentation, security risks, and management gaps.