Introducing Autonomous Database Select AI - enabling you to query your data using natural language. Combining generative AI large language models (LLMs) with Oracle SQL empowers you to describe what you want - declarative intent - and let the database generate the SQL query relevant to your schema. Some LLMs might be good at generating SQL, but being able to run that SQL against your database is another matter. Select AI enables generating SQL that is specific to your database.

Since LLMs are trained on huge volumes of text data, they can understand the nuances and even intended meaning in most natural language queries. Using natural language to generate SQL reduces the time required to generate queries, simplifies query building, and helps to minimize or eliminate specialized SQL knowledge. Using natural language, deriving information from your database that involves writing queries with multiple joins, nested subqueries, and other SQL constructs becomes much easier and faster. While analytics tools make it easy to query and understand database data, the ability to interact with your SQL database using natural language prompts can help increase productivity of expert and non-expert SQL users to query their database without writing queries.

By learning effective SQL query patterns from curated training data, LLMs can produce more efficient queries - enabling them to perform better. As part of Oracle Autonomous Database, Select AI inherits all security and authentication features of the database.

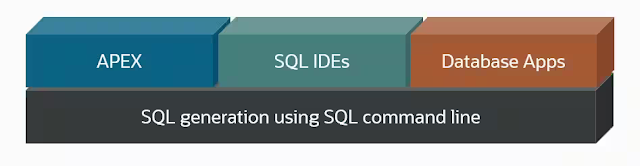

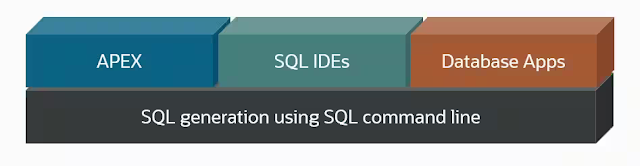

By virtue of being integrated with Oracle SQL, this capability is available through any SQL IDE, SQL Developer Web, Oracle Application Express (APEX), and Oracle Machine Learning Notebooks. Any application that invokes SQL and has an AI provider account also has access to Select AI.

Read on to explore how to take advantage of this new capability in Autonomous Database.

LLMs are not enough

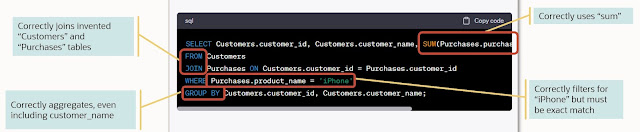

Some LLMs are good at generating SQL, often correctly inferring what the user wants and inventing table and column names in the structure of a valid SQL query. Of course, this query works only if your database has tables with columns as generated. In a sense, the LLM hallucinates the tables and columns needed to produce the SQL query. You can use such a generated query as a template and manually change table and column names as needed, but what if you didn't need to?

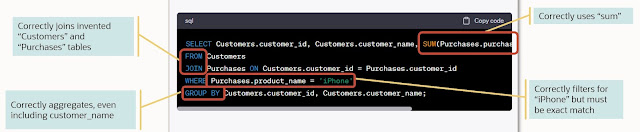

Consider the following prompt “Generate the SQL to find the total amount spent by each customer on iPhones.” Below, we used OpenAI's ChatGPT and see the use of Customers and Purchases tables, a group-by clause, the aggregation using SUM, and a filter using the product name. Such LLMs provide a great start for SQL generation, but they need context in the form of the user’s specific tables and columns. You could manually add details to your prompt with the table definition, but no doubt you’d prefer that the system identifies relevant tables and columns and automatically augment the prompt. This augmented prompt enables the LLM to produce a much more relevant query.

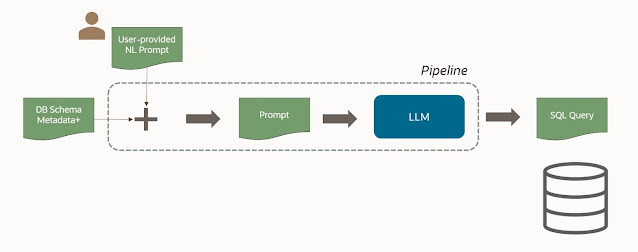

To produce a runnable query, we need to anchor this to a specific schema, and this is where Select AI comes in. By setting up a profile, you can by default use your local schema, but you can also optionally specify the schema(s) and the table(s) you want to be considered when generating queries.

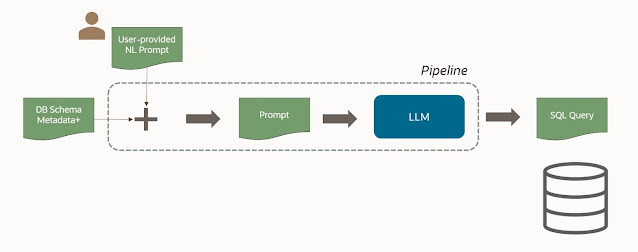

As depicted below, the original natural language query is augmented with metadata from schema(s) identified in the user’s profile. Feeding this to the LLM allows the LLM to produce a SQL query runnable against your database, and ideally provide the results you’re looking for.

We say ideally because even with an augmented prompt, LLMs aren’t perfect and so it will be important to vet the results from the query and possibly the query itself.

Examples using Select AI

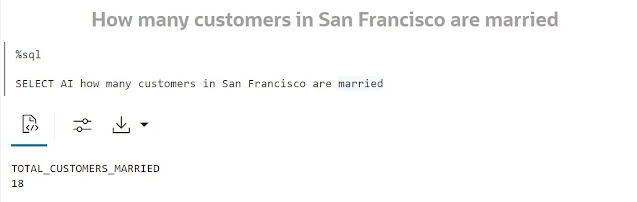

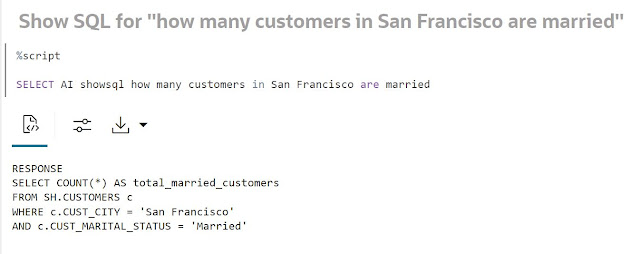

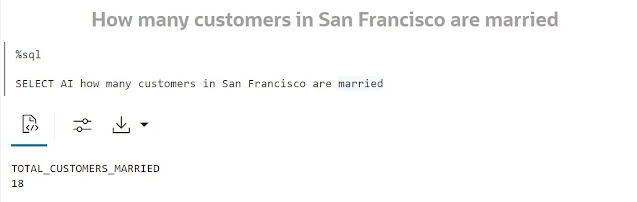

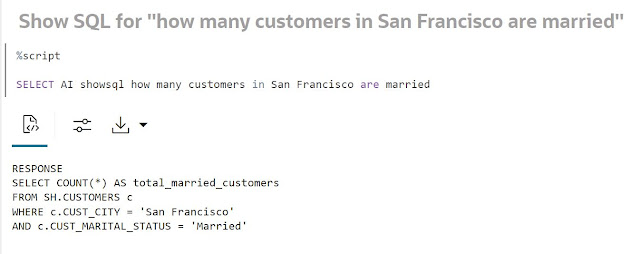

Let’s look at a few examples. We’ll start simply. How many customers in San Francisco are married? Using the keyword “AI”, we run the SQL command (shown here in OML Notebooks using the %sql interpreter) “SELECT AI how many customers in San Francisco are married”. We see the result as well as the SQL query generated by our LLM adding the keyword “showsql” after “SELECT AI”.

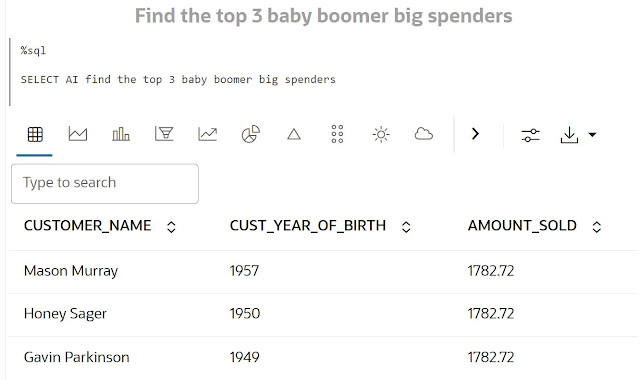

The next query highlights using the LLM’s broader knowledge. To “find the top 3 baby boomer big spenders” requires an understanding of what a “baby boomer” is, or more importantly, what date-of-birth years apply, and that finding the top 3 big spenders requires ordering by AMOUNT_SOLD. This finds those customers who buy big ticket items. The LLM draws on its broader understanding of these concepts to correctly generate an applicable query.

You can use the chat option to interact with the LLM as you would a chatbot with a single message. In the following example, a developer may need to create a table. By describing the table you want, it generates a CREATE TABLE statement you can use directly or modify to suite your needs.

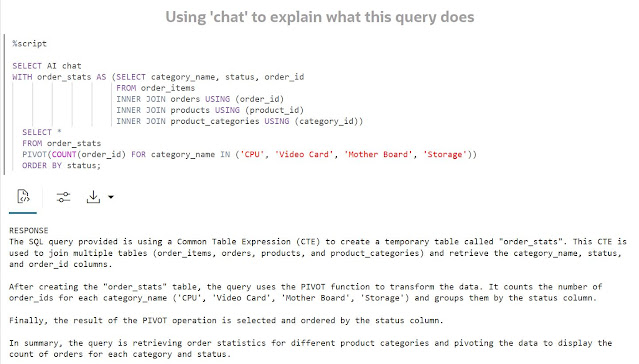

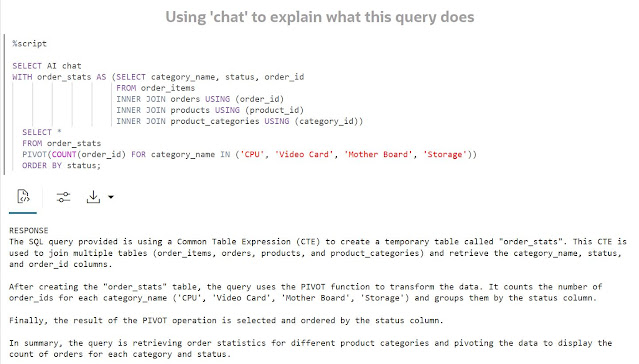

You can also use ‘chat’ to ask “what is Oracle Autonomous Database?” or understand what a query is doing, as shown below.

DBMS_CLOUD_AI Package

Enabling this feature is a new package, DBMS_CLOUD_AI, in Autonomous Database that enables the use of LLMs for generating SQL from natural language prompts. The package provides access to user specified LLMs along with knowledge of the user’s accessible database metadata. This enables producing runnable SQL queries applicable to that schema. The DBMS_CLOUD_AI package currently integrates with AI providers such as OpenAI and Cohere, with others planned to follow. To use this feature, you need an account with the AI provider and must supply your API credentials to Autonomous Database.

Getting started

To get started with Select AI, sign into your Autonomous Database instance with administrator privileges and add your user (here MY_USER) to the ACL list and grant access to the DBMS_CLOUD_AI package:

BEGIN

DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE(

host => 'api.openai.com',

ace => xs$ace_type(privilege_list => xs$name_list('http'),

principal_name => 'MY_USER',

principal_type => xs_acl.ptype_db)

);

END;

grant execute on DBMS_CLOUD_AI to MY_USER;

Create a database credential to your AI provider account. In the case of OpenAI, the password is the uniquely generated token for API usage.

BEGIN

DBMS_CLOUD.DROP_CREDENTIAL (

credential_name => 'OPENAI_CRED');

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'OPENAI_CRED',

username => 'OPENAI',

password => '...' );

END;

Create your DBMS_CLOUD_AI profile. Here, we list the schema(s) we want to be considered (e.g., SH) and, optionally, any tables (e.g., customers, sales, etc.).

BEGIN

DBMS_CLOUD_AI.drop_profile(profile_name => 'OPENAI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'OPENAI',

attributes => '{"provider": "openai",

"credential_name": "OPENAI_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"}]

}');

END;

Lastly, set your DBMS_CLOUD_AI profile, which must be done in each database session.

BEGIN

DBMS_CLOUD_AI.SET_PROFILE(

profile_name => 'OPENAI'

);

END;

Now, you’re ready to invoke your first Select AI prompt.

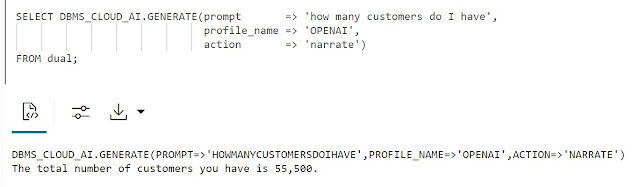

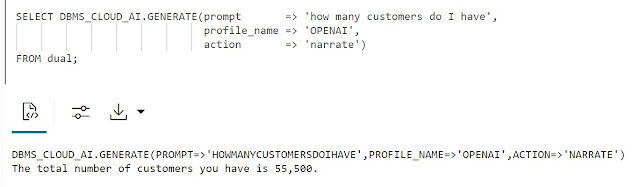

Note that if you're using a stateless environment, like the Database Actions SQL worksheet in Autonomous Database, use the DBMS_CLOUD_AI.GENERATE function in your SQL query. This allows you to specify the AI profile directly for a stateless invocation, as shown in the following example.

Benefits and limitations

The ability to generate SQL queries using natural language to get useful results sounds amazing. You get ease of use without SQL expertise. This feature is seamlessly integrated with your database and because it’s SQL, it readily integrates with your analytics tools and applications. It can help speed up the information retrieval process since you don’t have to write the SQL yourself or wait for a SQL expert to write it for you. The SQL is also specific to your database tables.

Despite how well LLMs perform – even with augmented prompts – they’re not perfect. As such, the results and possibly the corresponding queries should be reviewed before relying on the results. The way you write the prompt – as with any LLM interaction – can have a significant effect on the output.

While large language models (LLMs) are adept at generating useful and relevant content, they also can generate incorrect and false information - known as hallucinations. This includes SQL queries that do not produce the desired results. As a result of being trained on a broad set of text documentation and content, typically from the Internet, LLMs may have incorporated patterns from invalid or malicious content, which may impact the accuracy and security of your results.

As noted above, Select AI augments the user-specified prompt with database metadata to mitigate hallucinations from the LLM. The augmented prompt is then sent to the user specified LLM to produce the query. Select AI automates the process that the user could do manually.

Source: oracle.com

0 comments:

Post a Comment