Oracle Database 19c (release 19.4 and later) now includes the ability to dynamically scale compute for Pluggable Databases up and down in response to workload demands using CPU min/max ranges. Scale-up and scale-down of compute resources available to a Pluggable Database can be configured to happen automatically, instantly, and dynamically without intervention.

Why does this matter? All systems (whether bare metal or virtual machines) have some amount of un-used compute capacity at any given point in time. Database workloads are not constant and can vary significantly throughout the day. Dynamic CPU Scaling allows Pluggable Databases to automatically consume extra capacity when needed if it’s available on the system. This capability eliminates the need to over-provision at the system level, resulting in much more predictable performance while also taking advantage of un-used compute capacity. For databases running in a Cloud subscription model, Dynamic CPU Scaling makes more effective use of provisioned capacity and therefore reduces subscription costs.

Prior to this new feature, DBAs had to allocate resources for peak loads to a database to ensure scale up was possible, and to ensure needed resources weren’t taken by other applications, commonly known as “Noisy Neighbors”. The ability to use min/max ranges in Oracle Database 19.4 provides a better approach to the “Noisy Neighbor” problem because it doesn’t rely on over-provisioning, which is difficult to control and can result in severe performance problems. The “Noisy Neighbor” problem is really caused by the over-provisioning of resources as we will see below.

First, some basics…

Servers can be configured as Bare Metal or as Virtual Machines. Bare Metal servers can use all of the CPU cores available on the chips, or the number of active cores can be limited in the system BIOS. Each processor core can also have multiple hyperthreads, which are referred to as a Virtual CPU (vCPU). The current generation of Intel processors have 2 hyperthreads (2 vCPUs) for each processor core.

Virtual Machines are typically configured on top of Virtual CPUs (vCPU) and can be configured to use all of the available vCPUs or a subset of vCPUs on a server. Of course, it is also possible to over-provision Virtual Machines by giving VMs on a machine more vCPUs than the machine has available.

For example, assume a machine has 100 vCPUs (hyperthreads) available. Creating 10 VMs with 10 vCPU each would not be over-provisioning the system. However, creating 20 VMs with 10 vCPU each would exceed the number of available vCPUs on the system, and we would say this system is over-provisioned by a factor of 2X. If all 20 VMs simultaneously consumed all of the vCPU allocated to them, the system as a whole would experience severe performance problems. This condition can be viewed at the system level by looking at the processor run queue using tools such as the “sar -q” command on Linux.

On top of the Bare Metal or Virtual Machine, each instance of an Oracle database is configured to use a number of vCPUs by enabling Oracle Database Resource Manager (DBRM) and setting the CPU_COUNT parameter. If DBRM is not configured, the CPU_COUNT setting simply reflects the total vCPUs on the system. Enabling DBRM allows the CPU_COUNT setting to control the number of vCPUs available to the database. This applies at both the CDB (Container Database) and PDB (Pluggable Database) levels.

As noted earlier, we can over-provision at the system level, but it’s also possible to over-provision at the database level as well. For example, assume a Virtual Machine is configured with 10 vCPUs and we create 10 databases with 2 vCPU each (CPU_COUNT=2). That VM would therefore be over-provisioned by a factor of 2X. If all 10 databases simultaneously consumed all of the vCPU allocated to them, the system (Bare Metal or VM) as a whole would experience severe performance problems.

In short, over-provisioning is essentially “selling” the same resource multiple times to multiple users. It’s making a promise of resources in the hopes that not everyone will need those resources at the same time. The danger of over-provisioning is when all of the users (all of the databases) become active at the same time. Overrunning the available vCPUs puts work into the run queue of the processor, and the system can become unstable or even unresponsive.

The most common approach to managing CPU resources is to NOT over-provision and simply allocate CPU according to what’s available. While this certainly is quite simple to implement, it will always result in under-utilization of system resources. All databases will spend some amount of time operating at LESS than the maximum allocated CPU (vCPU), so this approach results in lower utilization and therefore higher cost to the organization.

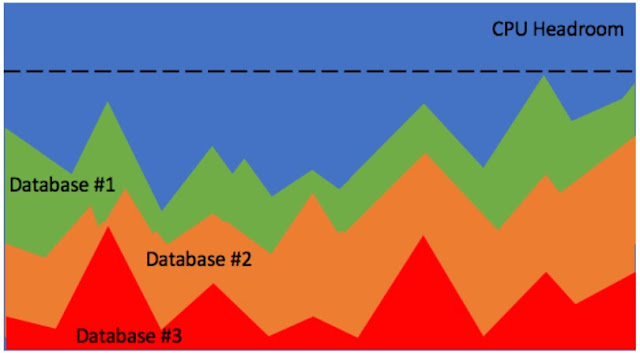

Whether CPU is allocated to Virtual Machines that each contain a single database, or CPU is allocated to individual databases residing on a single Virtual Machine, the result is the same. Each database is given an amount of excess CPU headroom, and CPU usage below that threshold will fluctuate. The result is excess un-used CPU capacity and higher costs as shown in the following graphic.

Why does this matter? All systems (whether bare metal or virtual machines) have some amount of un-used compute capacity at any given point in time. Database workloads are not constant and can vary significantly throughout the day. Dynamic CPU Scaling allows Pluggable Databases to automatically consume extra capacity when needed if it’s available on the system. This capability eliminates the need to over-provision at the system level, resulting in much more predictable performance while also taking advantage of un-used compute capacity. For databases running in a Cloud subscription model, Dynamic CPU Scaling makes more effective use of provisioned capacity and therefore reduces subscription costs.

A Better Approach to the Noisy Neighbor Problem

Prior to this new feature, DBAs had to allocate resources for peak loads to a database to ensure scale up was possible, and to ensure needed resources weren’t taken by other applications, commonly known as “Noisy Neighbors”. The ability to use min/max ranges in Oracle Database 19.4 provides a better approach to the “Noisy Neighbor” problem because it doesn’t rely on over-provisioning, which is difficult to control and can result in severe performance problems. The “Noisy Neighbor” problem is really caused by the over-provisioning of resources as we will see below.

First, some basics…

Servers can be configured as Bare Metal or as Virtual Machines. Bare Metal servers can use all of the CPU cores available on the chips, or the number of active cores can be limited in the system BIOS. Each processor core can also have multiple hyperthreads, which are referred to as a Virtual CPU (vCPU). The current generation of Intel processors have 2 hyperthreads (2 vCPUs) for each processor core.

Virtual Machines are typically configured on top of Virtual CPUs (vCPU) and can be configured to use all of the available vCPUs or a subset of vCPUs on a server. Of course, it is also possible to over-provision Virtual Machines by giving VMs on a machine more vCPUs than the machine has available.

For example, assume a machine has 100 vCPUs (hyperthreads) available. Creating 10 VMs with 10 vCPU each would not be over-provisioning the system. However, creating 20 VMs with 10 vCPU each would exceed the number of available vCPUs on the system, and we would say this system is over-provisioned by a factor of 2X. If all 20 VMs simultaneously consumed all of the vCPU allocated to them, the system as a whole would experience severe performance problems. This condition can be viewed at the system level by looking at the processor run queue using tools such as the “sar -q” command on Linux.

Note that Oracle Cloud (unlike some other Cloud vendors) does not over-provision Virtual Machine compute shapes. Note also that Oracle charges customers for usage of processor cores rather than hyperthreads. One OCPU equals one processor core in the Oracle Cloud, while some other Cloud providers charge based on vCPUs (hyperthreads) or approximately ½ core.

On top of the Bare Metal or Virtual Machine, each instance of an Oracle database is configured to use a number of vCPUs by enabling Oracle Database Resource Manager (DBRM) and setting the CPU_COUNT parameter. If DBRM is not configured, the CPU_COUNT setting simply reflects the total vCPUs on the system. Enabling DBRM allows the CPU_COUNT setting to control the number of vCPUs available to the database. This applies at both the CDB (Container Database) and PDB (Pluggable Database) levels.

As noted earlier, we can over-provision at the system level, but it’s also possible to over-provision at the database level as well. For example, assume a Virtual Machine is configured with 10 vCPUs and we create 10 databases with 2 vCPU each (CPU_COUNT=2). That VM would therefore be over-provisioned by a factor of 2X. If all 10 databases simultaneously consumed all of the vCPU allocated to them, the system (Bare Metal or VM) as a whole would experience severe performance problems.

In short, over-provisioning is essentially “selling” the same resource multiple times to multiple users. It’s making a promise of resources in the hopes that not everyone will need those resources at the same time. The danger of over-provisioning is when all of the users (all of the databases) become active at the same time. Overrunning the available vCPUs puts work into the run queue of the processor, and the system can become unstable or even unresponsive.

CPU Allocation Approach

The most common approach to managing CPU resources is to NOT over-provision and simply allocate CPU according to what’s available. While this certainly is quite simple to implement, it will always result in under-utilization of system resources. All databases will spend some amount of time operating at LESS than the maximum allocated CPU (vCPU), so this approach results in lower utilization and therefore higher cost to the organization.

Whether CPU is allocated to Virtual Machines that each contain a single database, or CPU is allocated to individual databases residing on a single Virtual Machine, the result is the same. Each database is given an amount of excess CPU headroom, and CPU usage below that threshold will fluctuate. The result is excess un-used CPU capacity and higher costs as shown in the following graphic.

CPU Over-Provisioning

As noted earlier, one commonly used approach to capturing the un-used CPU resources is to simply over-provision the system. Each database can be given access to more CPU resources, such that the total amount allocated exceeds the amount of CPU on the system. Whether this is done at the system (Virtual Machine) level or by over-provisioning across and within databases, the result is the same. Over-provisioning is what causes the “Noisy Neighbor” problem and can result in unstable or unresponsive systems.

Shares & Limits

Oracle offers the ability to configure “shares” for each Pluggable Database within a Container Database. Each instance of a Container Database is given an amount of vCPU to use by enabling DBRM and setting CPU_COUNT. The Pluggable Databases within that Container Database are then given “shares” of the vCPU available to the Container Database. Each Pluggable Database then receives the designated share of CPU resources, and the system is not over-subscribed.

Each Pluggable Database can also be assigned a limit of CPU resources it can use, which serves to prevent wide swings in database performance. Without a limit imposed on a Pluggable Database, each database is able to use the ENTIRE amount of un-used CPU on the system, which can appear to users as a large variation in performance. Shares are expressed as a share value, whereas utilization limit is expressed as a percentage such as shown in the example below:

| Pluggable Database | Shares | Shares% | Utilization Limit |

| PDB1 | 1 | 10% | 20% |

| PDB2 | 2 | 20% | 40% |

| PDB3 | 2 | 20% | 40% |

| PDB4 | 5 | 50% | 90% |

| Total: | 10 | 100% |

Shares values are relative to other Pluggable Databases in the Container Database. The share percentage can be calculated by simply dividing the share value by the total of all shares in the Container Database. Utilization limits are expressed in terms of percentages. Notice that the total of Utilization Limits is greater than 100%, but the Shares value will take precedence if the Container database reaches its limit. Pluggable Databases will receive their stated share of CPU and can exceed that share up to their limit if additional CPU is available. Shares and Limits can be difficult to manage in environments where Pluggable Databases are frequently added to and removed from Container Databases. Share and limit values need to be re-factored each time a Pluggable Database is added or removed. Rather than using the shares & limits approach, we now recommend using the CPU min/max range feature.

Dynamic CPU Scaling in Oracle Database 19c (19.4)

Now that we have explained why Dynamic CPU Scaling is so important, let’s look at how this feature works. Using CPU min/max ranges, administrators can establish lower and upper bounds of vCPU available to each Pluggable Database. Each Pluggable Database receives a guaranteed minimum amount of vCPU but is also able to automatically scale up to a maximum level. This feature is controlled by 2 simple parameters within each Pluggable Database as follows:

◉ CPU_MIN_COUNT

◉ CPU_COUNT

CPU_MIN_COUNT is the minimum number of vCPUs the Pluggable Database Instance will receive. The total of CPU_MIN_COUNT for all Pluggable Database instances should not exceed the CPU_COUNT of the Container Database instance. When DBRM is enabled, and when CPU_MIN_COUNT has been set, the CPU_COUNT parameter defines the maximum number of vCPUs that can be used by a Pluggable Database Instance.

By eliminating hard allocations to databases through Virtual Machines or individual database allocations within a single Container Database, all databases are able to share any excess capacity. All databases also share a single allocation for CPU headroom, and all databases share the same capacity with minimum guarantees. In the example below, the overall capacity used is approximately ½ as much as shown in the previous graphic, yet all databases are able to use the capacity they require:

We recommend establishing a standardized ratio between CPU_MIN_COUNT and CPU_COUNT. For example, set CPU_COUNT equal to 3 times the value for CPU_MIN_COUNT. This means that Oracle Database vCPU utilization would run within a 3X range from MIN to MAX. Using wide ranges may result in larger variability in performance and user satisfaction issues, while narrow ranges may leave excess (unused) compute capacity, resulting in higher costs. The exact CPU min/max range will depend on the database workloads that reside within a specific Container Database. We also recommend using symmetric clustered configurations (in an Oracle Real Application Clusters environment) with equally sized database nodes and equal settings for CPU min/max ranges on each instance. Asymmetric clusters (using different sized database servers or Virtual Machines) is acceptable if the degree of asymmetry is low (small variation in server or VM sizes).

Note that both CPU_MIN_COUNT and CPU_COUNT apply at the instance-level for each Pluggable Database. If the Pluggable Database is part of a Real Application Cluster (RAC) configuration, the total CPU min/max range for the Pluggable Database will be multiplied by the number of Oracle Real Application Cluster (RAC) instances. For example, a Pluggable Database with CPU_MIN_COUNT=2 belonging to a 2-node RAC cluster will have access to a minimum of 4 vCPUs in total. The Container Database level only uses CPU_COUNT (not CPU_MIN_COUNT), and this also applies to each RAC instance. CPU min/max ranges at the Container Database level (as well as for non-PDB/Non-CDB databases) is not available as of Oracle Database 19c. Each instance of a RAC cluster should ideally use a symmetrical configuration with similarly sized compute nodes and the same values for these parameters in both the Pluggable Database and Container Database levels.

DBRM constantly monitors demand for CPU resources within each Pluggable Database, as well as the overall availability of CPU resources at the Container Database level. DBRM allows each Pluggable Database to automatically and immediately scale up to use more CPU resources if available in the Container Database. DBRM automatically and immediately scales CPU resource down again when demand subsides. Other Pluggable Databases residing within the container may begin to consume their CPU allocation, so any capacity above CPU_MIN_COUNT is not guaranteed and can be withdrawn for use by those other Pluggable Databases.

0 comments:

Post a Comment