Following is procedure to load the data from Third Party Database into Oracle using SQL Loader.

1. Convert the Data into Flat file using third party database command.

2. Create the Table Structure in Oracle Database using appropriate datatypes

3. Write a Control File, describing how to interpret the flat file and options to load the data.

4. Execute SQL Loader utility specifying the control file in the command line argument

To understand it better let us see the following case study.

CASE STUDY (Loading Data from MS-ACCESS to Oracle)

Suppose you have a table in MS-ACCESS by name EMP, running under Windows O/S, with the following structure

EMPNO INTEGER

NAME TEXT(50)

SAL CURRENCY

JDATE DATE

This table contains some 10,000 rows. Now you want to load the data from this table into an Oracle Table. Oracle Database is running in LINUX O/S.

Solution

Step 1

Start MS-Access and convert the table into comma delimited flat (popularly known as csv) , by clicking on File/Save As menu. Let the delimited file name be emp.csv

Now transfer this file to Linux Server using FTP command

a. Go to Command Prompt in windows

b. At the command prompt type FTP followed by IP address of the server running Oracle.

FTP will then prompt you for username and password to connect to the Linux Server. Supply a valid username and password of Oracle User in Linux

For example:-

C:\> ftp 200.200.100.111

Name: oracle

Password: oracle

FTP>

c. Now give PUT command to transfer file from current Windows machine to Linux machine

FTP>put

Local file:C:\>emp.csv

remote-file:/u01/oracle/emp.csv

File transferred in 0.29 Seconds

FTP>

d. Now after the file is transferred quit the FTP utility by typing bye command.

FTP>bye

Good-Bye

Step 2

Now come to the Linux Machine and create a table in Oracle with the same structure as in MS-ACCESS by taking appropriate datatypes. For example, create a table like this

$ sqlplus scott/tiger

SQL> CREATE TABLE emp (empno number(5),

name varchar2(50),

sal number(10,2),

jdate date);

Step 3

After creating the table, you have to write a control file describing the actions which SQL Loader should do. You can use any text editor to write the control file. Now let us write a controlfile for our case study

$ vi emp.ctl

1 LOAD DATA

2 INFILE ‘/u01/oracle/emp.csv’

3 BADFILE ‘/u01/oracle/emp.bad’

4 DISCARDFILE ‘/u01/oracle/emp.dsc’

5 INSERT INTO TABLE emp

6 FIELDS TERMINATED BY “,” OPTIONALLY ENCLOSED BY ‘”’ TRAILING NULLCOLS

7 (empno,name,sal,jdate date ‘mm/dd/yyyy’)

Notes: (Do not write the line numbers, they are meant for explanation purpose)

1. The LOAD DATA statement is required at the beginning of the control file.

2. The INFILE option specifies where the input file is located

3. Specifying BADFILE is optional. If you specify, then bad records found during loading will be stored in this file.

4. Specifying DISCARDFILE is optional. If you specify, then records which do not meet a WHEN condition will be written to this file.

5. You can use any of the following loading option

i. INSERT : Loads rows only if the target table is empty

ii. APPEND: Load rows if the target table is empty or not.

iii. REPLACE: First deletes all the rows in the existing table and then, load rows.

iv. TRUNCATE: First truncates the table and then load rows.

6. This line indicates how the fields are separated in input file. Since in our case the fields are separated by “,” so we have specified “,” as the terminating char for fields. You can replace this by any char which is used to terminate fields. Some of the popularly use terminating characters are semicolon “;”, colon “:”, pipe “|” etc. TRAILING NULLCOLS means if the last column is null then treat this as null value, otherwise, SQL LOADER will treat the record as bad if the last column is null.

7. In this line specify the columns of the target table. Note how do you specify format for Date columns

Step 4

After you have wrote the control file save it and then, call SQL Loader utility by typing the following command

$sqlldr userid=scott/tiger control=emp.ctl log=emp.log

After you have executed the above command SQL Loader will shows you the output describing how many rows it has loaded.

The LOG option of sqlldr specifies where the log file of this sql loader session should be created. The log file contains all actions which SQL loader has performed i.e. how many rows were loaded, how many were rejected and how much time is taken to load the rows and etc. You have to view this file for any errors encountered while running SQL Loader.

Conventional Path Load and Direct Path Load.

SQL Loader can load the data into Oracle database using Conventional Path method or Direct Path method. You can specify the method by using DIRECT command line option. If you give DIRECT=TRUE then SQL loader will use Direct Path Loading otherwise, if omit this option or specify DIRECT=false, then SQL Loader will use Conventional Path loading method.

Conventional Path

Conventional path load (the default) uses the SQL INSERT statement and a bind array buffer to load data into database tables.

When SQL*Loader performs a conventional path load, it competes equally with all other processes for buffer resources. This can slow the load significantly. Extra overhead is added as SQL statements are generated, passed to Oracle, and executed.

The Oracle database looks for partially filled blocks and attempts to fill them on each insert. Although appropriate during normal use, this can slow bulk loads dramatically.

Direct Path

In Direct Path Loading, Oracle will not use SQL INSERT statement for loading rows. Instead it directly writes the rows, into fresh blocks beyond High Water Mark, in datafiles i.e. it does not scan for free blocks before high water mark. Direct Path load is very fast because

◉ Partial blocks are not used, so no reads are needed to find them, and fewer writes are performed.

◉ SQL*Loader need not execute any SQL INSERT statements; therefore, the processing load on the Oracle database is reduced.

◉ A direct path load calls on Oracle to lock tables and indexes at the start of the load and releases them when the load is finished. A conventional path load calls Oracle once for each array of rows to process a SQL INSERT statement.

◉ A direct path load uses multiblock asynchronous I/O for writes to the database files.

◉ During a direct path load, processes perform their own write I/O, instead of using Oracle's buffer cache. This minimizes contention with other Oracle users.

Restrictions on Using Direct Path Loads

The following conditions must be satisfied for you to use the direct path load method:

◉ Tables are not clustered.

◉ Tables to be loaded do not have any active transactions pending.

◉ Loading a parent table together with a child Table

◉ Loading BFILE columns

Loading data from Fixed Width files into Oracle Database

CASE STUDY (Loading Data from Fixed Length file into Oracle)

Suppose we have a fixed length format file containing employees data, as shown below, and wants to load this data into an Oracle table.

7782 CLARK MANAGER 7839 2572.50 10

7839 KING PRESIDENT 5500.00 10

7934 MILLER CLERK 7782 920.00 10

7566 JONES MANAGER 7839 3123.75 20

7499 ALLEN SALESMAN 7698 1600.00 300.00 30

7654 MARTIN SALESMAN 7698 1312.50 1400.00 30

7658 CHAN ANALYST 7566 3450.00 20

7654 MARTIN SALESMAN 7698 1312.50 1400.00 30

SOLUTION:

Steps :-

1. First Open the file in a text editor and count the length of fields, for example in our fixed length file, employee number is from 1st position to 4th position, employee name is from 6th position to 15th position, Job name is from 17th position to 25th position. Similarly other columns are also located.

2. Create a table in Oracle, by any name, but should match columns specified in fixed length file. In our case give the following command to create the table.

SQL> CREATE TABLE emp (empno NUMBER(5),

name VARCHAR2(20),

job VARCHAR2(10),

mgr NUMBER(5),

sal NUMBER(10,2),

comm NUMBER(10,2),

deptno NUMBER(3) );

3. After creating the table, now write a control file by using any text editor

$ vi empfix.ctl

1) LOAD DATA

2) INFILE '/u01/oracle/fix.dat'

3) INTO TABLE emp

4) (empno POSITION(01:04) INTEGER EXTERNAL,

name POSITION(06:15) CHAR,

job POSITION(17:25) CHAR,

mgr POSITION(27:30) INTEGER EXTERNAL,

sal POSITION(32:39) DECIMAL EXTERNAL,

comm POSITION(41:48) DECIMAL EXTERNAL,

5) deptno POSITION(50:51) INTEGER EXTERNAL)

Notes:

(Do not write the line numbers, they are meant for explanation purpose)

1. The LOAD DATA statement is required at the beginning of the control file.

2. The name of the file containing data follows the INFILE parameter.

3. The INTO TABLE statement is required to identify the table to be loaded into.

4. Lines 4 and 5 identify a column name and the location of the data in the datafile to be loaded into that column. empno, name, job, and so on are names of columns in table emp. The datatypes (INTEGER EXTERNAL, CHAR, DECIMAL EXTERNAL) identify the datatype of data fields in the file, not of corresponding columns in the emp table.

5. Note that the set of column specifications is enclosed in parentheses.

4. After saving the control file now start SQL Loader utility by typing the following command.

$sqlldr userid=scott/tiger control=empfix.ctl log=empfix.log direct=y

After you have executed the above command SQL Loader will shows you the output describing how many rows it has loaded.

Loading Data into Multiple Tables using WHEN condition

You can simultaneously load data into multiple tables in the same session. You can also use WHEN condition to load only specified rows which meets a particular condition (only equal to “=” and not equal to “<>” conditions are allowed).

For example, suppose we have a fixed length file as shown below

7782 CLARK MANAGER 7839 2572.50 10

7839 KING PRESIDENT 5500.00 10

7934 MILLER CLERK 7782 920.00 10

7566 JONES MANAGER 7839 3123.75 20

7499 ALLEN SALESMAN 7698 1600.00 300.00 30

7654 MARTIN SALESMAN 7698 1312.50 1400.00 30

7658 CHAN ANALYST 7566 3450.00 20

7654 MARTIN SALESMAN 7698 1312.50 1400.00 30

Now we want to load all the employees whose deptno is 10 into emp1 table and those employees whose deptno is not equal to 10 in emp2 table. To do this first create the tables emp1 and emp2 by taking appropriate columns and datatypes. Then, write a control file as shown below

$vi emp_multi.ctl

Load Data

infile ‘/u01/oracle/empfix.dat’

append into table scott.emp1

WHEN (deptno=’10 ‘)

(empno POSITION(01:04) INTEGER EXTERNAL,

name POSITION(06:15) CHAR,

job POSITION(17:25) CHAR,

mgr POSITION(27:30) INTEGER EXTERNAL,

sal POSITION(32:39) DECIMAL EXTERNAL,

comm POSITION(41:48) DECIMAL EXTERNAL,

deptno POSITION(50:51) INTEGER EXTERNAL)

INTO TABLE scott.emp2

WHEN (deptno<>’10 ‘)

(empno POSITION(01:04) INTEGER EXTERNAL,

name POSITION(06:15) CHAR,

job POSITION(17:25) CHAR,

mgr POSITION(27:30) INTEGER EXTERNAL,

sal POSITION(32:39) DECIMAL EXTERNAL,

comm POSITION(41:48) DECIMAL EXTERNAL,

deptno POSITION(50:51) INTEGER EXTERNAL)

After saving the file emp_multi.ctl run sqlldr

$sqlldr userid=scott/tiger control=emp_multi.ctl

Migrate Data From MySQL to Oracle

How to migrate data from MySQL to Oracle using SQL Loader tool

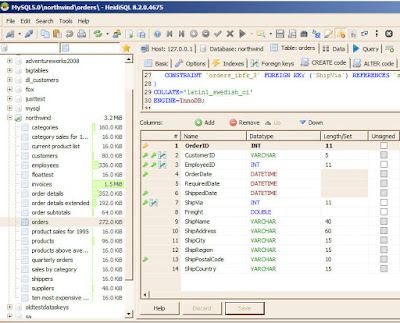

For example suppose we have a table "Orders" in MySQL running in Windows with the following structure

This table has some 1000 rows and we need to load these rows into a table in Oracle running under Linux O/s.

Here are the specs of both PC's

Source

Database : MySQL

Table Name: Orders

O/s: Windows

IP Address:192.168.50.1

Target

Database: Oracle

Table Name: Orders (Doesn't exist)

O/s : Linux

IP Address: 192.168.50.132

To migrate the MySQL table we need to do the following

Step 1

First Export the rows from MySQL table to a CSV or any delimited file. To export the rows we can use HeidiSQL or any other similar tool

To export the rows to a CSV file using HeidiSQL ,

Open HeidiSQL tool in Source PC

Login to MySQL server by typing your username and password

Select the MySQL Database in which "Orders" table is residing from from Left Panel

Expand the database node and select Orders table in the left panel

Then on the right panel, click the on the "Data" tab to view the data from "Orders" table as shown in the picture below

Right Click on any column in the data grid and select "Export Grid Rows" option

You will see a Export Grid rows dialog window. In this window enter CSV file name as "test.csv" and select "Complete Rows" option and De-Select "Include Column Names" checkbox and click OK

How to Migrate data from MS SQL Server to Oracle using SQL Loader utility

Here is a step by step example on how to copy the data from an MS SQL Server table to Oracle using Oracle's SQL Loader utility.

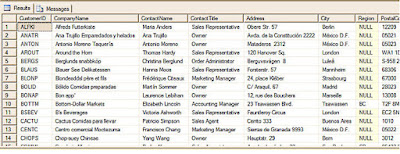

To describe the scenario, let's say suppose we have a table in MS SQL Server by name "Customers" in Northwind sample database. The SQL Server is running under Windows O/s and Oracle database is running under Linux.

Here is the structure of the customers table in MS SQL Server.

| Column Name | Data Type |

| CustomerID | varchar(5) |

| CompanyName | varchar(40) |

| ContactName | varchar(30) |

| ContactTitle | varchar(30) |

| Address | varchar(60) |

| City | varchar(15) |

| Region | varchar(15) |

| PostalCode | varchar(10) |

| Country | varchar(15) |

| Phone | varchar(24) |

| Fax | varchar(24) |

This table has some 91 rows and we need to migrate all these rows to a table in Oracle.

To broadly describes the steps we need to perform to achieve this objective, we need to first convert the MS SQL data from this table into TAB delimited text file and then copy this file to Linux machine running Oracle. We then need to create a table structure in Oracle database with the same structure as in MS SQL by taking appropriate data types and widths. After this we need to write a SQL Loader control file and then run SQL Loader command.

So let's begin.

Step 1.

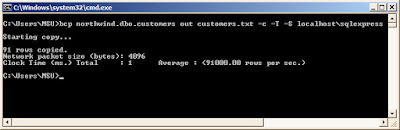

In the machine running MS SQL Server create a tab delimited to file. To create this file you can use SQL Server's BCP utility or MS SQL Server's DTS package. In our case we will use BCP command.

The MS SQL Server's Bulk Copy Program (BCP) is used to import and export large volumes of data from an MS SQL Server instance. It can be used to export individual tables as well as customized queries.

To use BCP to export the data, run the command line prompt by clicking on Window's Start button and typing cmd in run command

At the Command Prompt type the BCP command with the following arguments

bcp northwind.dbo.customers out customers.txt -c -T -S localhost\sqlexpress

The arguments which we have used

◈ out: specifies the data should be exported

◈ -c : export as ASCII with a tab delimiter and carriage return/line feed line terminator

◈ -T : Trusted Connection i.e. use Windows authentication

◈ -S : server to connect to. In our case, we are connecting to local SQL Express instance

Once you type the above command you will get the following output as shown in the pic below

As you can see from the above screenshot, the bcp command has copied all the data into tab delimited file customers.txt

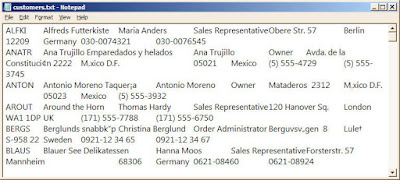

You can open and see the contents in it by opening notepad, here is the screenshot of the just created tab delimited file.

Next step: transfer the file to Linux machine running Oracle

0 comments:

Post a Comment