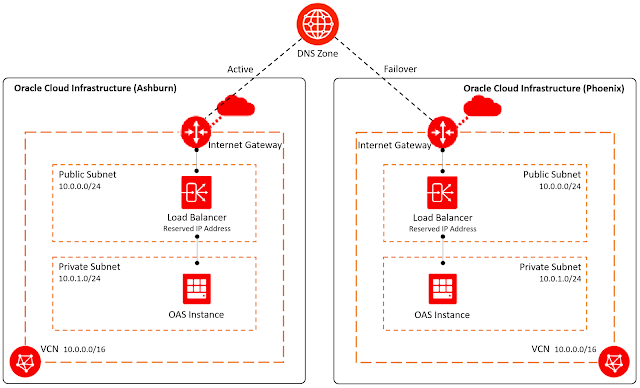

1. Create Primary Oracle Analytics Server Instance in one Region e.g. Ashburn

2. Create Disaster Recovery Oracle Analytics Server Instance in another Region e.g. Phoenix

3. Configure both Oracle Analytics Server Instances to use same external connections like SMTP Server, Data Sources and Database Tables that involved in the Security Configuration like BISQLGroupProvider, Act as Configuration, Data Level Security, etc.

4. Configure both the Oracle Analytics Server Instances share the same Security Rules to connect to same on-Premise Data Sources or Cloud Data Sources.

5. Both Oracle Analytics Server Instances are running independent of each other which means both doesn't share the RCU Database Schemas to sync the connections, Catalog Objects, etc. (Answers Reports/DV Projects).

6. Same Set of Users and Groups can be accessed with in the Oracle Analytics Cloud Service Console, Users and Role Section as both of the Services run on same Oracle Identity Cloud Service (IDCS) for Identity Management.

7. Snapshot Create and Restore is the method available to synchronize the content between the two Oracle Analytics Server Instances.

8. Creation of Snapshots and Restore can be automated using WLST Scripting or else using REST APIs in future releases.

9. Snapshot Create on Primary Oracle Analytics Server Instance and Restore on Disaster Recovery Oracle Analytics Server Instance will not sync Data File content and BI Publisher JDBC Connections, may need to manually move across Instances.

10. At this moment we don’t have automatic Snapshot Create and Snapshot Restore mechanism. We need to perform this task manually and periodically.

11. Due to the periodic manual tasks involved in restoring the Content from Primary Oracle Analytics Server Instance to Disaster Recovery Oracle Analytics Server Instance, Live Content Sync is not possible between the Instances. We might need to live with delayed content sync.

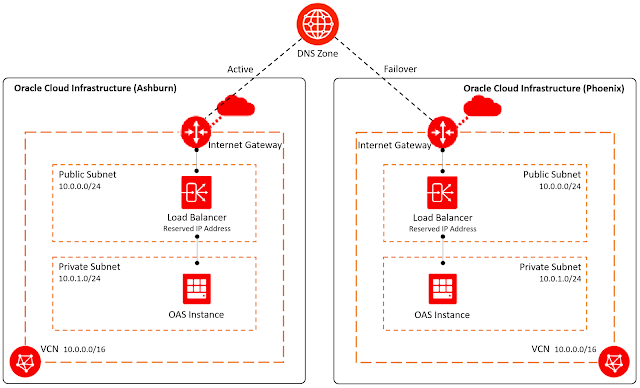

12. Create a Load Balancer in each Region and decide on a single DNS name e.g oas.oracleceal.com for both the Load Balancers.

13. Get the SSL/TLS Certificate for the DNS Name and implement the same SSL Certificate to both the Load Balancers in each Region.

14. Use Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover) and configure Ashburn Load Balancer as the Primary and Phoenix Load Balancer as the Disaster Recovery Load Balancer.

15. Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover) maps the Primary Load Balancer IP Address to the DNS Name using “A” Record in Oracle Cloud Infrastructure Zones.

16. When the Primary Load Balancer or Oracle Analytics Server is not reachable based on the Health Check Policy defined, the Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover) will remove the Primary Load Balancer IP Address from the DNS Mapping in “A” Record and maps the Disaster Recovery Load Balancer IP Address to the DNS Name in the “A” Record of the Oracle Cloud Infrastructure Zones.

17. By this configuration we can create Disaster Recovery and make sure the end user always use the same URL.

18. When the Primary Load Balancer or Oracle Analytics Server is reachable based on the Health Check Policy defined, the Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover) will Fall back to Primary Load Balancer i.e. remove the Secondary Load Balancer IP Address from the DNS Mapping in “A” Record and maps the Primary Load Balancer IP Address to the DNS Name in the “A” Record of the Oracle Cloud Infrastructure Zones.

19. Before the Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover) Fallback to the Primary we need to take the latest snapshot of Secondary Oracle Analytics Server and restore it on Primary Oracle Analytics Server.

Oracle Analytics Server Disaster Recovery Architecture and Configuration across Different Oracle Cloud Infrastructure (OCI) Regions

Oracle Analytics Server Instance configured with Load Balancer in the Front End.

In this Blog, we have created Oracle Analytics Server Instance in a Private Subnet and Load Balancer in a Public Subnet of the VCN.

Demonstrating Disaster Recovery Architecture using Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover)

You can also create Oracle Analytics Server Instance and Load Balancer in the same Public Subnet of the VCN.

Oracle Cloud Infrastructure (OCI) Traffic Management Steering Policy

For Disaster Recovery we use Failover Policy Type

◉ Failover: Use Oracle Cloud Infrastructure Health Checks to determine the health of answers. If the primary answer is determined to be unhealthy, DNS traffic is automatically steered to the secondary answer.

Subscribe for Secondary Oracle Cloud Infrastructure (OCI) Region (Phoenix)

We have two regions Ashburn and Phoenix subscribed for the Oracle CloudInfrastructure Tenancy

On the Oracle Cloud Infrastructure (OCI) Console of Home Region (Ashburn)

- Create Component

- Create a Virtual Cloud Network (VCN)

- Access Control

- Route Rules

- Create Oracle Analytics Server Instance in the Private Subnet of the VCN

- Generate SSL Certificate for Load Balancer for the desired DNS Name

- Create a Load Balancer with Reserved Public IP Address on the Public Subnet of the VCN

- Configure the Load Balancer to the backend Oracle Analytics Server

- Get a Domain from Domain Providers like GoDaddy based on the DNS Name

- Create Public Zone

- Create Oracle Cloud Infrastructure Traffic Management Steering Policy

On the Oracle Cloud Infrastructure Console Secondary Region (Phoenix)

- Use Existing Compartment created in Home Region Ashburn

- Create a Virtual Cloud Network (VCN)

- Access Control

- Route Rules

- Create Oracle Analytics Server Instance in the Private Subnet of the VCN

- Use the already generated same SSL Certificate for Load Balancer

- Create a Load Balancer with Reserved Public IP Address on the Public Subnet of the VCN

- Configure the Load Balancer to the backend Oracle Analytics Server

- Use Existing Public Zone created in Home Region, no steps required here

- Use Existing Oracle Cloud Infrastructre Traffic Management Steering Policy, no steps required here

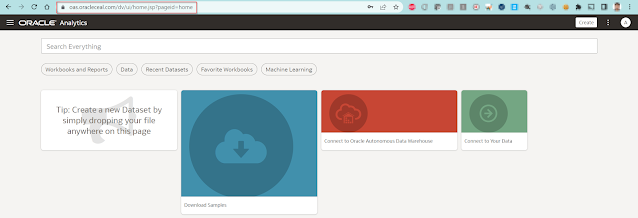

Let us check that we have an Oracle Analytics Server Front End with Load Balancer and the Load Balancer configured with same SSL Certificate and Hostname as the DNS Name e.g. oas.oracleceal.com in both the Oracle Cloud Infrastructure Regions i.e Ashburn and Phoenix.

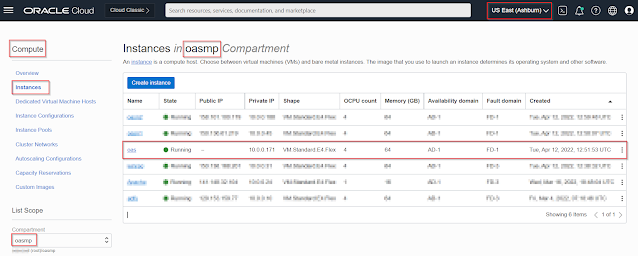

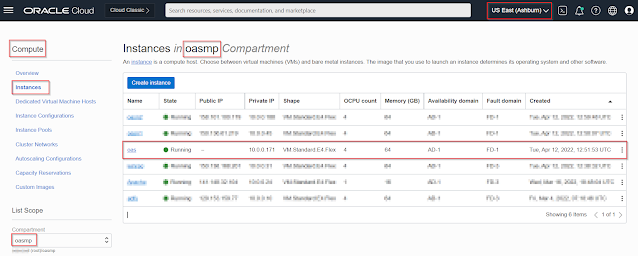

Oracle Analytics Server Compute Instance

Ashburn

Phoenix

Phoenix

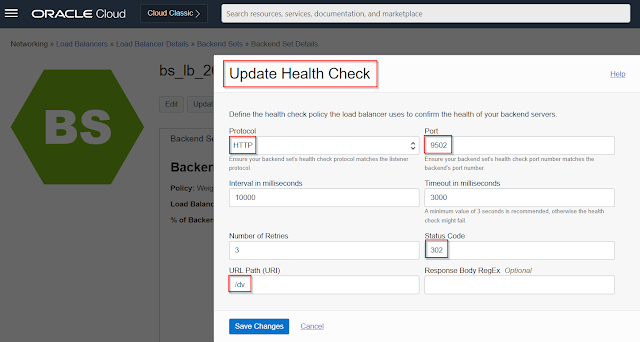

Load Balancer Backend Set Health Check

How to find the Status Code

From any Bastion Server or Windows Server (on Public Subnet) that can access the Oracle Analytics Server (on Private Subnet), run below command

curl -k -vvv http://<Oracle Analytics Server Private IP Address>:<Port No>/dv

e.g. curl -k -vvv http://10.0.1.253:9502/dv

Two Load Balancers URL’s for Oracle Analytics Server (Primary and Disaster Recovery)

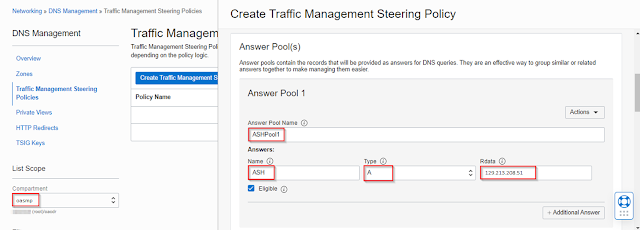

Ashburn: https://129.213.208.51/dv

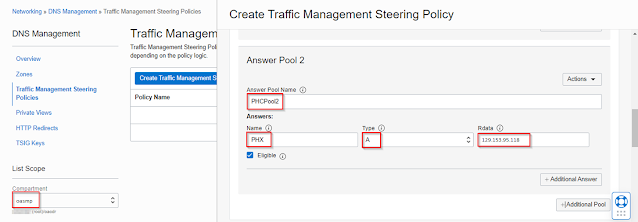

Phoenix: https://129.153.95.118/dv

Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover)

To work with the Oracle Cloud Infrastructure Traffic Management Steering Policies, you need delegated Zone in Oracle Cloud Infrastructure DNS. Oracle is not a registrar, so you need a domain, get a domain if you don’t have one.

Get a Domain e.g. oracleceal.com from Domain Provider like GoDaddy

GoDaddy uses its own NameServers

Can create an “A Record” at GoDaddy DNS Management mapping the Load Balancer Reserved IP address to the required Sub Domain e.g. oas.oracleceal.com, but we need to delegate the Domain to Oracle Cloud Zone.

To delegate the Domain, we should create a DNS Zone in Oracle Cloud Infrastructure for that Domain and use the Oracle Cloud Infrastructure NameServers at the Domain Provider e.g. GoDaddy

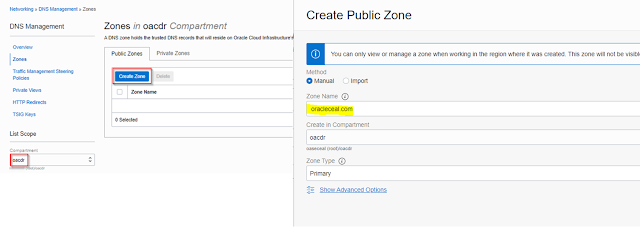

Create Public Zone on the Oracle Cloud Infrastructure (OCI) Home Region (e.g. Ashburn)

In Oracle Cloud Infrastructure Console Navigate to Networking à DNS Management à Zones à Create Public Zone

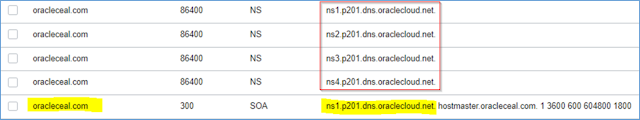

Obtain the Public Zone Name Server hostnames

Add these DNS Server Oracle NameServers to your Domain provider like GoDaddy.

Login to Domain Provider portal change the Name Servers based on the DNS Zone created in Oracle Cloud Infrastructure.

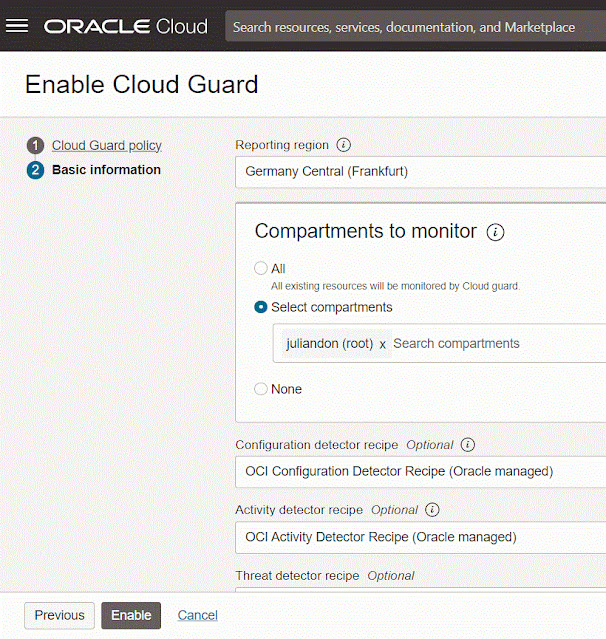

Create Oracle Cloud Infrastructure Traffic Management Steering Policy (Failover)

Login to Oracle Cloud Infrastructure Console as an Administrator, Select Home Region e.g. Ashburn

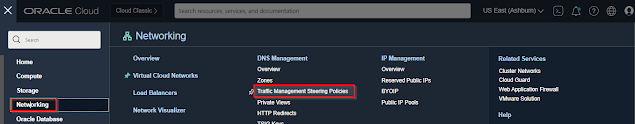

Navigate to Networking à Under DNS Management select Traffic Management Steering Policies

Select the Compartment as “oasmp”.

Click on Create Traffic Management Steering Policy

Select the Policy Type as Failover

Create Answer Pools (Pool 1 - Ashburn, Pool 2 - Phoenix)

Create Pool Priority (Which Pool should be the Primary Instance and which one to be the Disaster Recovery Instance based on Health Check Fail of the Primary Instance)

Click on Show Advanced Options

Select the Compartment where Oracle Cloud Infrastructure Public Zone is created. e.g. oacdr

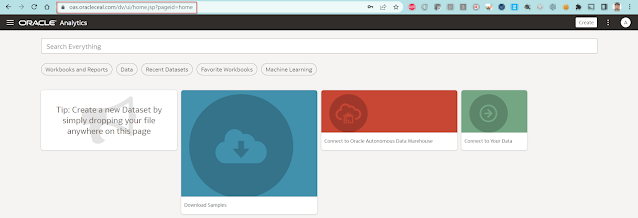

Test the access to Primary Oracle Analytics Server Instance.

https://oas.oracleceal.com/dv

Test the Oracle Cloud Infrastructure Traffic Management Steering Failover from Primary to Disaster Recovery Load Balancer, by stopping services at Primary Oracle Analytics Server Instance.

https://oas.oracleceal.com/dv

LIMITATION: Since the content is replicated using Snapshots and not by using common Database RCU Schemas, we suggest to take snapshot from DR Instance i.e. secondary Oracle Analytics Server Instance after a failover from Primary to Secondary (i.e. DR Oracle Analytics Server) and restore it to Primary Oracle Analytics Server Instance before allowing Users to access the Primary Oracle Analytics Server Instance through the same URL.

Migrate Metadata and Content between Primary and Disaster Recovery Oracle Analytics Server Instances

Migrating Content using Snapshots

A snapshot captures the state of your environment at a point in time

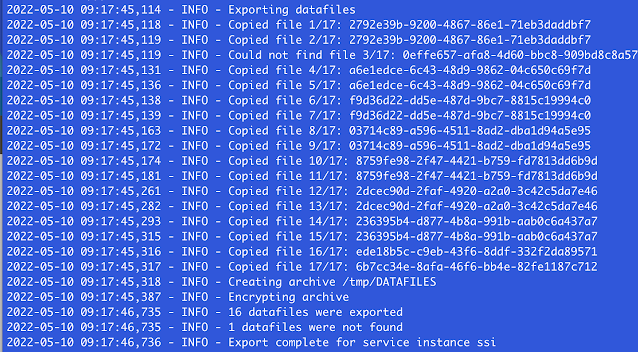

Exporting Snapshot in Primary Oracle Analytics Server Instance using script:

Run the exportarchive command to create a BAR file:

$DOMAIN_HOME/bitools/bin/exportarchive.sh <service instance key> <export directory> encryptionpassword=<password>

Example: ./exportarchive.sh ssi /tmp encryptionpassword=Admin123

Result:

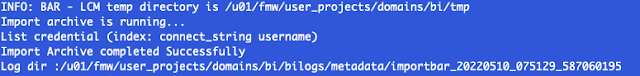

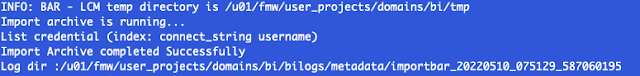

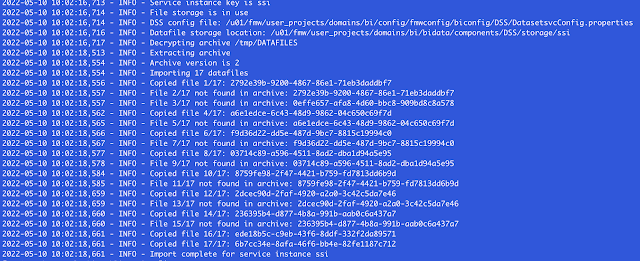

Importing Snapshot in Disaster Recovery Oracle Analytics Server Instance using script:

Copy the snapshot created in Primary Oracle Analytics Server Instance to Disaster Recovery Oracle Analytics Server Instance.

Run the exportarchive command to create a BAR file:

$DOMAIN_HOME/bitools/bin/importarchive.sh <service instance key> <location of BAR file> encryptionpassword=<password>

Example: ./importarchive.sh ssi /tmp/ssi.bar encryptionpassword=Admin123

Result:

Snapshot Exclusions:

There are a few items that aren't included in a snapshot

- System settings - Any properties that you configured on the System Settings page.

- Snapshot list - The list of snapshots that you see on the Snapshot page.

- BI Publisher JDBC connections.

- Don’t include data that's hosted on external data sources

Migrating File-based Data

Snapshots don’t include the file-based data which are used for creating data sets in Oracle Analytics Server.

To migrate file-based data, you must export your data files to an archive file in the Primary Oracle Analytics Server Instance and import the archive file into the Disaster Recovery Oracle Analytics Server Instance.

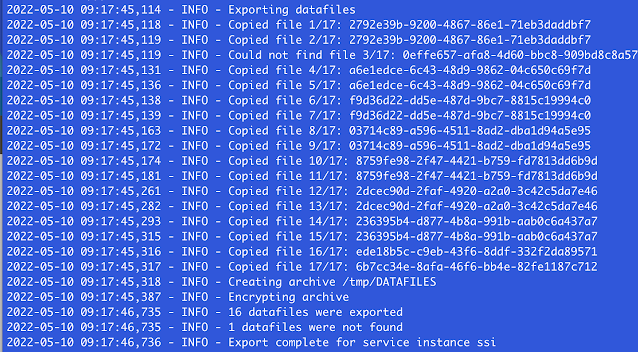

Export Data files from Primary Oracle Analytics Server Instance

- Navigate to ORACLE_HOME/bi/modules/oracle.bi.publicscripts

- Copy migrate_datafiles.py to migrate_datafiles_export.py

- Update migrate_datafiles_export.py as follows

domain_home = topology.get_domain_home()

oracle_home = topology.get_oracle_home()

wlst_path = topology.get_wlst_path()

internal_scripts_path = os.path.join(topology.get_publicscripts_path(), 'internal')

To

domain_home = '[PATH_DOMAIN_HOME]'

oracle_home = '[PATH_ORACLE_HOME]'

wlst_path = '[PATH_WLST.SH]'

internal_scripts_path = '[PATH_INTERNAL_SCRIPTS]'

Example:

domain_home = "/u01/fmw/user_projects/domains/bi"

oracle_home = "/u01/fmw/bi"

wlst_path = "/u01/fmw/oracle_common/common/bin/wlst.sh"

internal_scripts_path = "/u01/fmw/bi/modules/oracle.bi.publicscripts/internal"

Export data files to an archive file in the Primary Oracle Analytics Server Instance using following command

python migrate_datafiles.py /tmp/DATAFILES export --logdir=/tmp

Result:

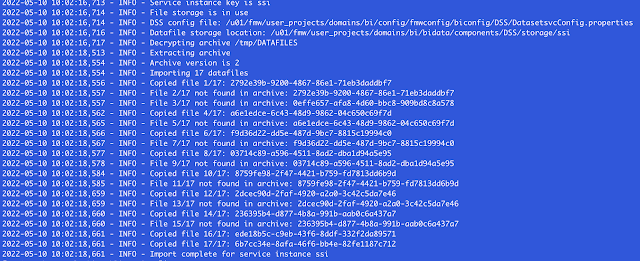

Import Data files to Disaster Recovery Oracle Analytics Server Instance

- Copy DATFILES archive file from source environment to target environment.

- Navigate to ORACLE_HOME/bi/modules/oracle.bi.publicscripts

- Copy migrate_datafiles.py to migrate_datafiles_import.py

- Update migrate_datafiles_import.py as follows

domain_home = topology.get_domain_home()

oracle_home = topology.get_oracle_home()

wlst_path = topology.get_wlst_path()

internal_scripts_path = os.path.join(topology.get_publicscripts_path(), 'internal')

To

domain_home = '[PATH_DOMAIN_HOME]'

oracle_home = '[PATH_ORACLE_HOME]'

wlst_path = '[PATH_WLST.SH]'

internal_scripts_path = '[PATH_INTERNAL_SCRIPTS]'

Example:

domain_home = "/u01/fmw/user_projects/domains/bi"

oracle_home = "/u01/fmw/bi"

wlst_path = "/u01/fmw/oracle_common/common/bin/wlst.sh"

internal_scripts_path = "/u01/fmw/bi/modules/oracle.bi.publicscripts/internal"

Import data files from the archive file into the Disaster Recovery Oracle Analytics Server Instance using following command

python migrate_datafiles.py /tmp/DATAFILES import --logdir=/tmp

Result:

Migrating BI Publisher JDBC Connections:

Snapshots don’t include the BI Publisher JDBC Connections hence copy datasources.xml file from Primary Oracle Analytics Server Instance to Disaster Recovery Oracle Analytics Server Instance.

Path: /fmw/user_projects/domains/bi/config/fmwconfig/biconfig/bipublisher/Admin/DataSource/datasources.xml

Note: Once JDBC Connections are migrated, need to enter the passwords in the Disaster Recovery Oracle Analytics Server Instance and submit, apply.

Source: oracle.com